背景

如果你在容器中执行过top/free/df,cat /proc/cpuinfo,cat /proc/meminfo等查看系统资源的命令,你会发现返回的是物理机或者是外部主机的资源。这会导致一些应用运行出现问题。

比如java的gc需要看主机的cpu核数,容器设置了limit比如2核,如果获取到的是主机的cpu核数,那么java进程可能就会无法启动,或者出现异常。

原因

容器的资源是通过cgroup来限制的,而容器会默认挂载主机的/proc目录,/proc目录中就包括了free,top,df,meminfo,cpuinfo等资源信息,/proc目录是不知道用户通过cgroup给这个容器做了什么限制,所以在执行命令时就读取到了主机上的资源。

解决

最直接的做法就是容器不挂载主机的/proc目录。但就无法获取到一些资源信息。

另一种方法就是使用lxcfs。

lxcfs

LXCFS is a small FUSE filesystem written with the intention of making Linux containers feel more like a virtual machine. It started as a side-project of LXC but is useable by any runtime.

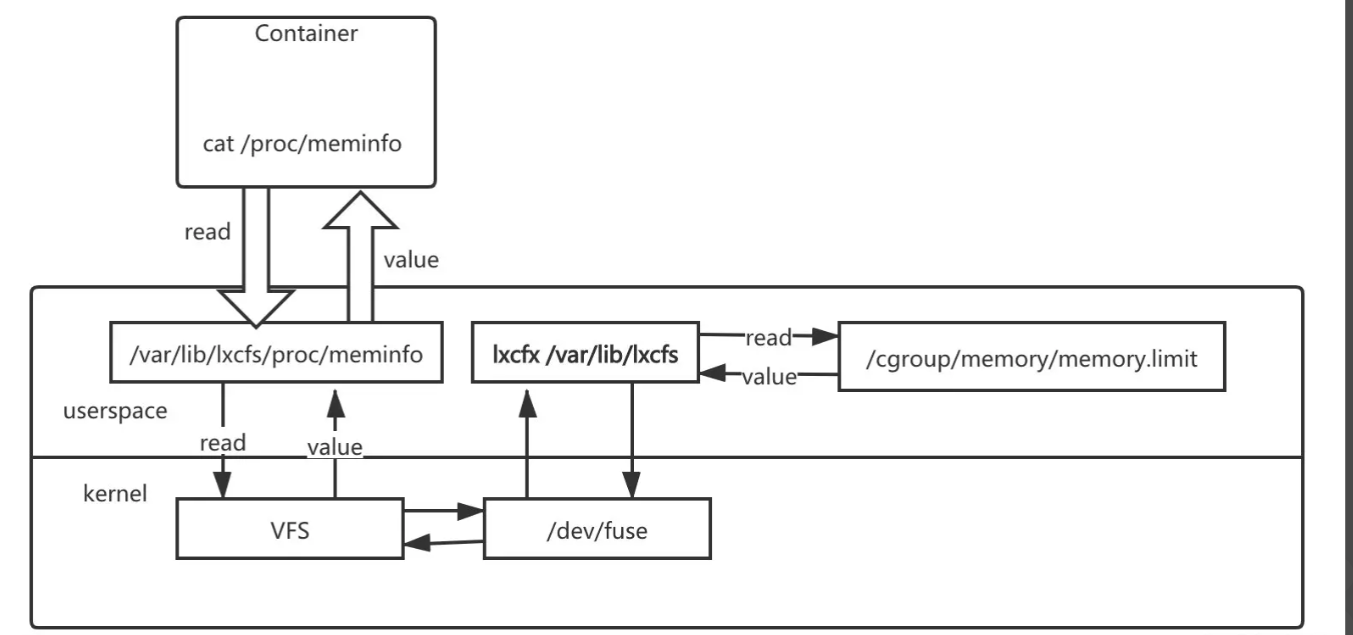

lscxf是小型的fuse文件系统。lxcfs是通过文件挂载的方式,把容器的cgroup中系统资源信息读取出来,通过volume挂载到容器内部的/proc目录。 然后docker内的应用读取/proc目录时,实际上读取的是这个容器真实的资源限制和使用信息。

原理

把宿主机的 /var/lib/lxcfs/proc/memoinfo 文件挂载到容器的 /proc/meminfo,容器中进程读取/proc文件内容时,lxcfs 的/dev/fuse实现会从容器对应的cgroup中读取正确的内存限制。从而使得应用获得正确的资源。cpu 的限制原理也是一样的。

安装

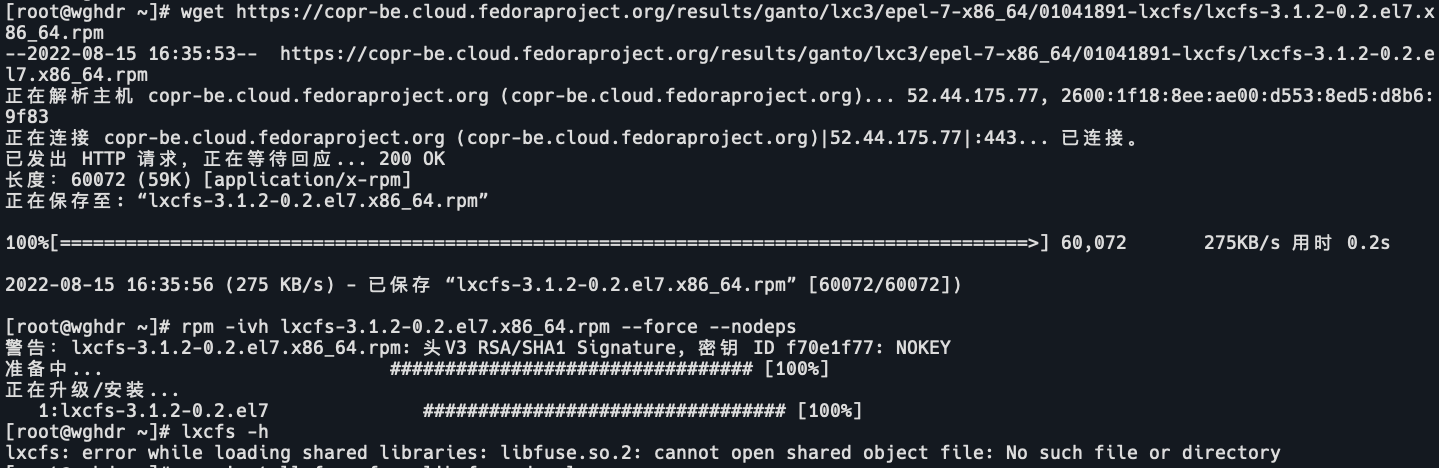

1.下载

wget https://copr-be.cloud.fedoraproject.org/results/ganto/lxc3/epel-7-x86_64/01041891-lxcfs/lxcfs-3.1.2-0.2.el7.x86_64.rpm

rpm -ivh lxcfs-3.1.2-0.2.el7.x86_64.rpm --force --nodeps安装完会报错:

lxcfs: error while loading shared libraries: libfuse.so.2: cannot open shared object file: No such file or directory

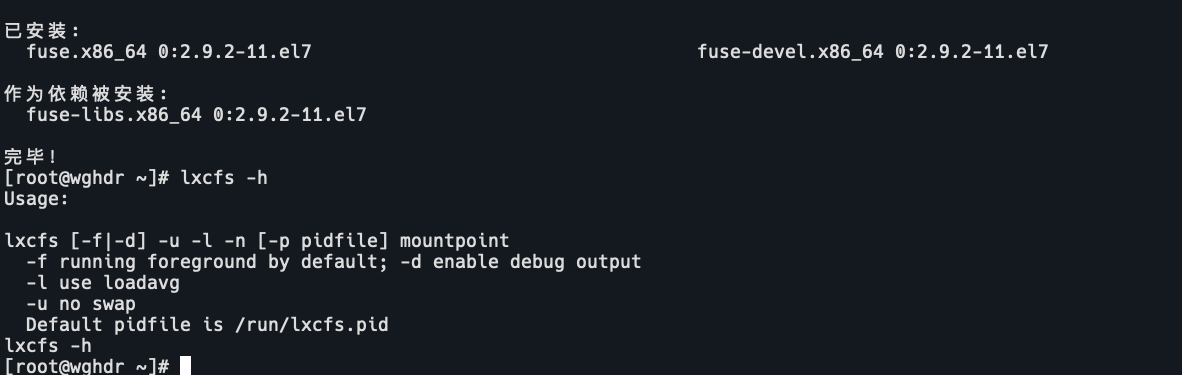

yum -y install fuse fuse-lib fuse-devel

lxcfs -h

2.查看启动脚本

cat /usr/lib/systemd/system/lxcfs.service

[Unit]

Description=FUSE filesystem for LXC

ConditionVirtualization=!container

Before=lxc.service

Documentation=man:lxcfs(1)

[Service]

ExecStart=/usr/bin/lxcfs /var/lib/lxcfs/

KillMode=process

Restart=on-failure

ExecStopPost=-/bin/fusermount -u /var/lib/lxcfs

Delegate=yes

ExecReload=/bin/kill -USR1 $MAINPID

[Install]

WantedBy=multi-user.target3.启动lxcfs

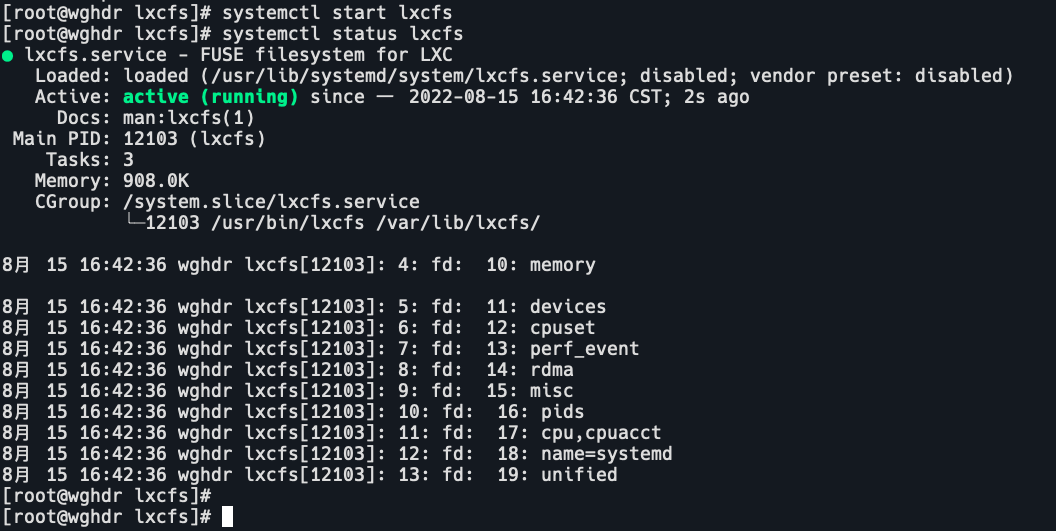

systemctl start lxcfs

systemctl status lxcfs

验证

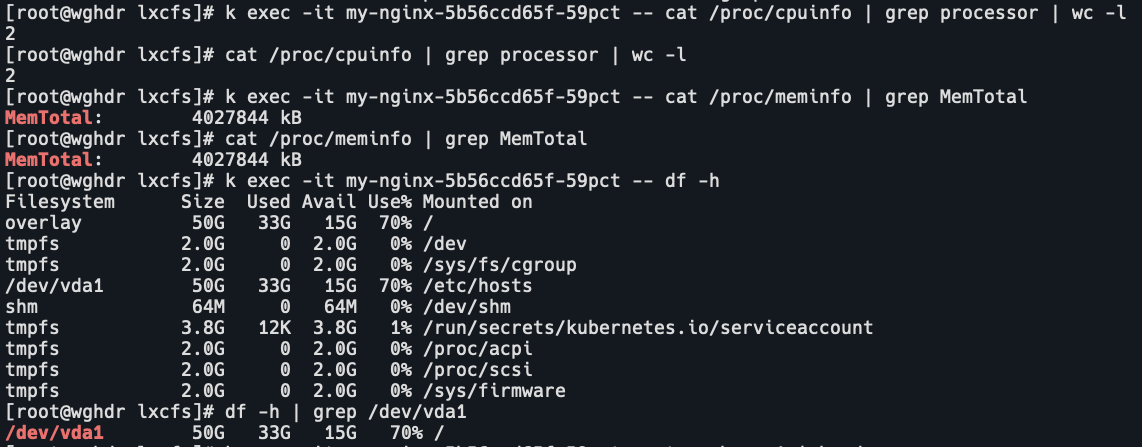

未开启lxcfs的容器

k exec -it my-nginx-5b56ccd65f-59pct -- cat /proc/cpuinfo | grep processor | wc -l

cat /proc/cpuinfo | grep processor | wc -l

k exec -it my-nginx-5b56ccd65f-59pct -- cat /proc/meminfo

cat /proc/meminfo

k exec -it my-nginx-5b56ccd65f-59pct -- df -h

df -h

k exec -it my-nginx-5b56ccd65f-59pct -- top -b -n 1

top -b -n 1

可以看到未开启lxcfs的容器中获取到的资源信息就是宿主机的资源信息。

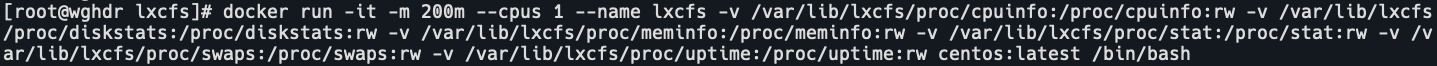

开启lxcfs

docker run -it -m 200m --cpus 1 --name lxcfs -v /var/lib/lxcfs/proc/cpuinfo:/proc/cpuinfo:rw -v /var/lib/lxcfs/proc/diskstats:/proc/diskstats:rw -v /var/lib/lxcfs/proc/meminfo:/proc/meminfo:rw -v /var/lib/lxcfs/proc/stat:/proc/stat:rw -v /var/lib/lxcfs/proc/swaps:/proc/swaps:rw -v /var/lib/lxcfs/proc/uptime:/proc/uptime:rw centos:latest /bin/bash

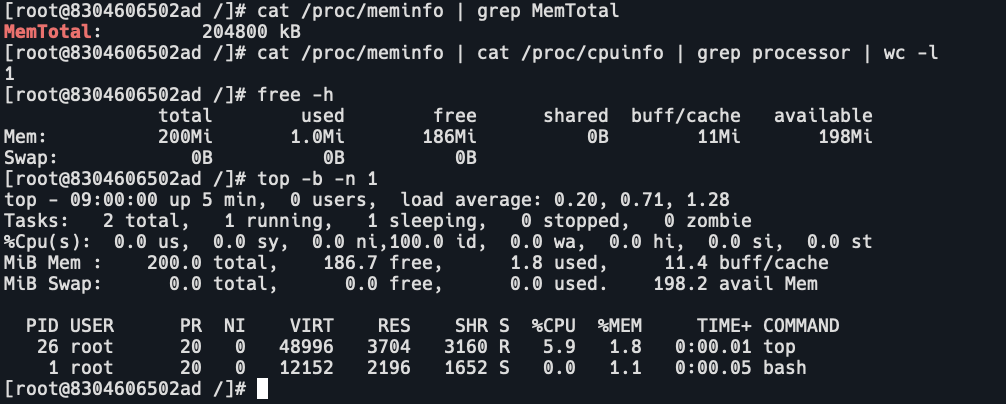

查看系统资源

可以看到容器中获取到的系统资源就是容器真实的资源限制了。

k8s中使用lxcfs

要在k8s中使用lxcfs,需要:

- 集群中每个节点都需要开启lxcfs,

- 并且把/proc挂载到每个容器中。

所以需要使用daemonset来部署lxcfs。

挂载可以在yaml文件中定义volume指定hostpath的/proc目录,参照上面的docker启动命令。

但是这样需要手动修改yaml,及其麻烦。有一种自动化挂载的方式,就是使用admission-webhook,定义规则在创建pod时,自动挂载/proc目录到容器中。

部署

git clone https://github.com/denverdino/lxcfs-admission-webhook.git

cd lxcfs-admission-webhook/deployment

cat lxcfs-daemonset.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: lxcfs

labels:

app: lxcfs

spec:

selector:

matchLabels:

app: lxcfs

template:

metadata:

labels:

app: lxcfs

spec:

hostPID: true

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

containers:

- name: lxcfs

image: registry.cn-hangzhou.aliyuncs.com/denverdino/lxcfs:3.1.2

imagePullPolicy: Always

securityContext:

privileged: true

volumeMounts:

- name: cgroup

mountPath: /sys/fs/cgroup

- name: lxcfs

mountPath: /var/lib/lxcfs

mountPropagation: Bidirectional

- name: usr-local

mountPath: /usr/local

- name: usr-lib64

mountPath: /usr/lib64

volumes:

- name: cgroup

hostPath:

path: /sys/fs/cgroup

- name: usr-local

hostPath:

path: /usr/local

- name: usr-lib64

hostPath:

path: /usr/lib64

- name: lxcfs

hostPath:

path: /var/lib/lxcfs

type: DirectoryOrCreate

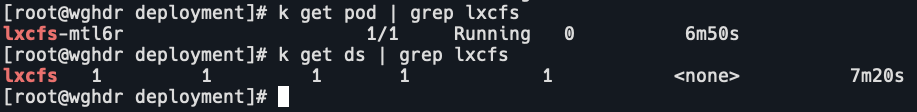

k apply -f lxcfs-daemonset.yaml

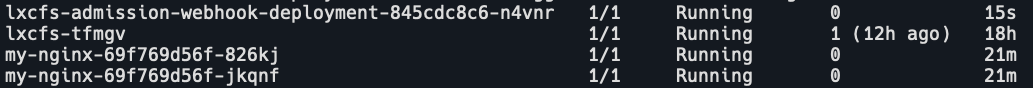

k get pod | grep lxcfs

查看admission是否开启

k api-versions | grep 'admission'

启用admission

我这里是1.22版本的k8s,如果你的集群版本是1.19以前的就不用改。

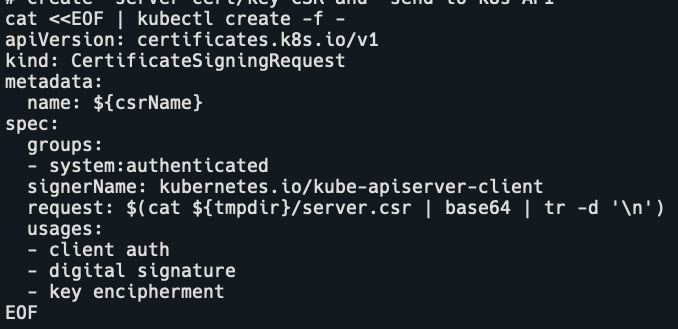

sed -i 's/certificates.k8s.io\/v1beta1/certificates.k8s.io\/v1/g' deployment/webhook-create-signed-cert.sh

sed -i 's/admissionregistration.k8s.io\/v1beta1/admissionregistration.k8s.io\/v1/g' mutatingwebhook-ca-bundle.yaml

sed -i 's/admissionregistration.k8s.io\/v1beta1/admissionregistration.k8s.io\/v1/g' mutatingwebhook.yaml

sed -i 's/admissionregistration.k8s.io\/v1beta1/admissionregistration.k8s.io\/v1/g' validatingwebhook.yaml

sed -i 'N;94 i \ signerName: kubernetes.io/kube-apiserver-client' webhook-create-signed-cert.sh

# 1.22版本中csr.spec需要指定signerName

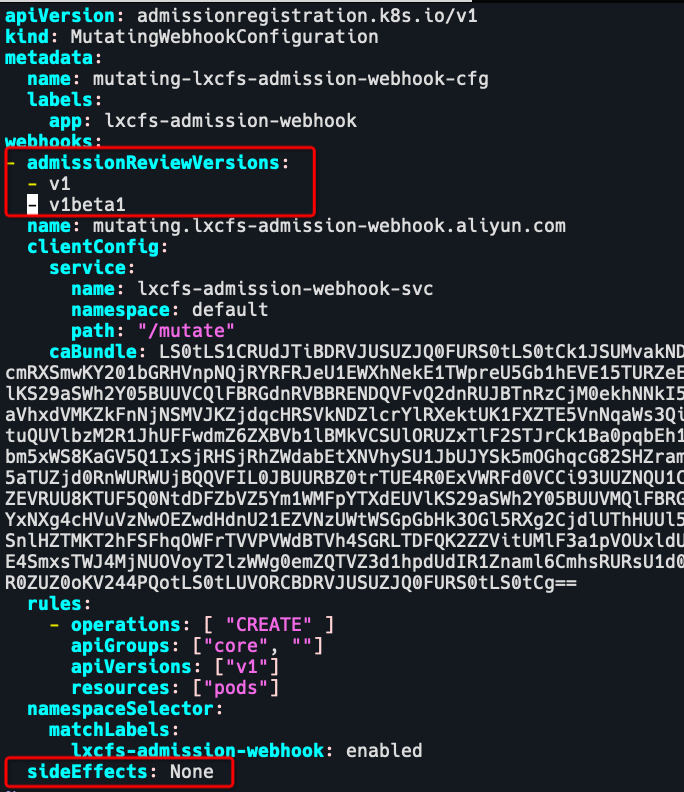

vim deployment/mutatingwebhook-ca-bundle.yaml

vim deployment/mutatingwebhook.yaml

vim deployment/validatingwebhook.yaml

# MutatingWebhookConfiguration,ValidatingWebhookConfiguration中需要指定admissionReviewVersions,sideEffects

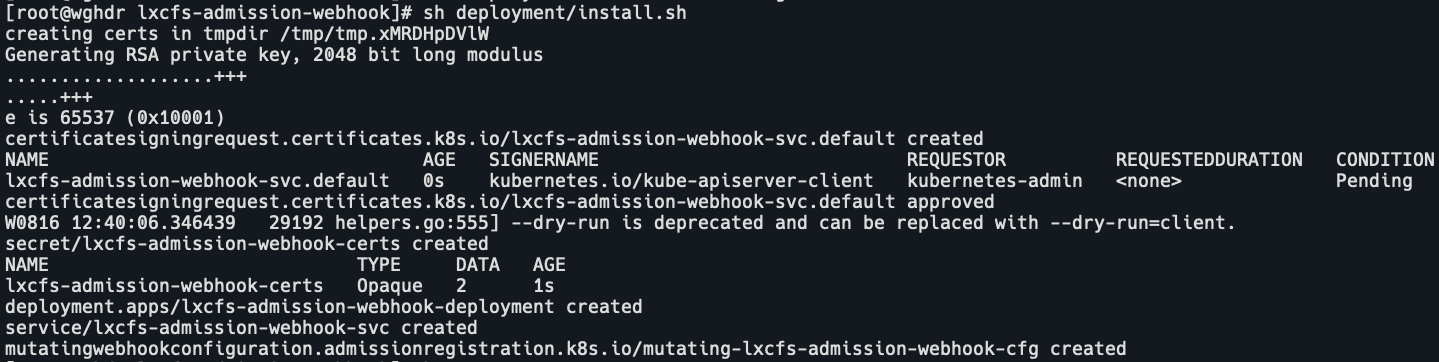

sh deployment/install.sh

查看部署情况

k get svc,csr,deployment,pod,mutatingwebhookconfiguration | grep lxcfs

测试

MutatingWebhookConfiguration中指定了namespaceSelector:

namespaceSelector:

matchLabels:

lxcfs-admission-webhook: enabled所以需要给default打label:

k label namespace default lxcfs-admission-webhook=enabled

创建pod

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-nginx

spec:

selector:

matchLabels:

run: my-nginx

replicas: 2

template:

metadata:

labels:

run: my-nginx

spec:

containers:

- name: my-nginx

image: nginx

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

resources:

requests:

memory: "200Mi"

cpu: "100m"

limits:

memory: "200Mi"

cpu: "100m"

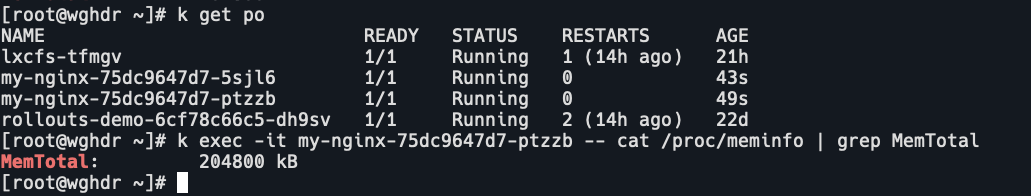

可以看到pod中的内存已经为设置的200m,而不是宿主机的内存值。