环境

这里使用kube-vip来部署高可用k8s集群,如果想手动部署可以参考这里:https://wghdr.top/archives/2078

master和node各3台。

master1: 10.2.10.101

master2: 10.2.10.102

master3: 10.2.10.103

node:10.2.10.104

网卡名:ens192

POD_CIDR:10.43.0.0/16

SVC_CIDR:10.42.0.0/16

MASTER_VIP: 10.2.10.125

docker version:19.03.15

k8s version:1.20.15以上环境变量需要根据自己环境进行修改。

先安装epel-release,然后安装ansible,安装后修改hosts。

yum -y install epel-release

yum -y install ansible

cat /etc/ansible/hosts

[master]

10.2.10.101

[add_master]

10.2.10.102

10.2.10.103

[add_node]

10.2.10.104系统初始化脚本

---

- hosts: all

remote_user: root

any_errors_fatal: no

gather_facts: no

tasks:

- name: "stop firewalld"

shell: systemctl stop firewalld

- name: "stop selinux"

lineinfile:

dest: /etc/selinux/config

regexp: "SELINUX=enforcing"

line: "SELINUX=disabled"

- name: "swap off"

shell: swapoff -a

- name: "modprobe"

shell: modprobe br_netfilter

- name: "add kernel file"

shell:

cmd: |

cat >> /etc/sysctl.d/k8s.conf << EOF

vm.max_map_count = 262144

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.swappiness=1

kernel.pid_max =1000000

fs.inotify.max_user_instances=524288

EOF

- name: "modify limits"

lineinfile:

path: /etc/security/limits.conf

insertafter: EOF

state: present

line: "{{item}}"

with_items:

- "* soft nofile 1024000"

- "* hard nofile 1024000"

- "* soft memlock unlimited"

- "* hard memlock unlimited"

- "root soft nofile 1024000"

- "root hard nofile 1024000"

- "root soft memlock unlimited"

- name: "enable kernel file"

shell: sysctl -p /etc/sysctl.d/k8s.conf

- name: "add ipvs modules"

shell:

cmd: |

cat >> /etc/sysconfig/modules/ipvs.modules << EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

EOF

- name: "chmod ipvs modules"

file:

path: /etc/sysconfig/modules/ipvs.modules

mode: 755

- name: "enbale ipvs modules"

shell: bash /etc/sysconfig/modules/ipvs.modules

- name: "mkdir docker prory"

file:

path: /etc/systemd/system/docker.service.d

state: directory

- name: "mkdir docker volume"

file:

path: /etc/docker

state: directory

- name: "add docker daemon"

shell:

cmd: |

cat >> /etc/docker/daemon.json << EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["https://dockerproxy.com"],

"oom-score-adjust": -1000,

"insecure-registries": ["harbor.rubikstack.com"],

"log-driver": "json-file",

"log-opts": {

"max-size": "300m",

"max-file": "10"

},

"max-concurrent-downloads": 10,

"max-concurrent-uploads": 10

}

EOF

- name: "reload"

shell: systemctl daemon-reload

- name: "install yum-utils"

yum:

name: yum-utils

state: present

- name: "add docker repo"

shell: yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

- name: "add k8s repo"

shell:

cmd: |

cat >> /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

- name: "install docker-ce"

yum:

name: docker-ce-19.03.15-3.el8

state: present

- name: "install docker-ce-cli"

yum:

name: docker-ce-cli-19.03.15-3.el8

state: present

- name: "install containerd.io"

yum:

name: containerd.io

state: present

- name: "install app"

yum:

name: "{{ item }}"

state: present

loop:

- ipset

- ipvsadm

- vim

- git

- net-tools

- wget

- unzip

- telnet*

- gzip

- nmap-ncat

- socat

- conntrack

- bash-completion

- name: "update systemd"

yum:

name: "systemd"

state: latest

- name: "enable docker"

service:

name: docker

enabled: yes

state: started

- name: 拷贝kubeadm

# 我这里使用了自编译的kubeadm,如果没有使用默认的就行。

copy:

src: "/root/kubeadm"

dest: "/root"

owner: root

group: root

mode: 755执行

ansible-playbook init.ymlk8s部署脚本

---

- hosts: master

remote_user: root

gather_facts: no

any_errors_fatal: no

vars:

POD_CIDR: 10.43.0.0/16

MASTER_VIP: 10.2.10.125

VIP_IF: ens192

SOURCE_DIR: /root

MASTER1: 10.2.10.101

MASTER2: 10.2.10.102

MASTER3: 10.2.10.103

tasks:

- name: 安装命令行

yum:

name: "{{ item }}"

state: present

enablerepo: kubernetes

loop:

- kubelet-1.20.15

- kubectl-1.20.15

- kubeadm-1.20.15

- name: "enable kubelet"

service:

name: kubelet

enabled: yes

state: started

- name: 集群初始化准备1

# 如果使用默认的kubeadm,需要换成 kubeadm reset -f

shell: "/root/kubeadm reset -f"

- name: 集群初始化准备2

shell: "systemctl daemon-reload && systemctl restart kubelet"

- name: 集群初始化准备3

shell: "iptables -F && ipvsadm --clear"

- name: 下载vip镜像

shell: "docker pull plndr/kube-vip:v0.5.5"

- name: 启动kube-vip

shell:

cmd: |

export VIP={{ MASTER_VIP }}

export INTERFACE={{ VIP_IF }}

export master1={{ MASTER1 }}

export master2={{ MASTER2 }}

export master3={{ MASTER3 }}

mkdir -p /etc/kubernetes/manifests

docker run --rm plndr/kube-vip:v0.5.5 manifest pod --interface $INTERFACE --vip $VIP --controlplane --services --arp --leaderElection | tee /etc/kubernetes/manifests/kube-vip.yaml

- name: 集群初始化

shell: "/root/kubeadm init --config={{ SOURCE_DIR }}/kubeadm-config.yaml --upload-certs &>{{ SOURCE_DIR }}/token.txt"

- name: 获取master的token

shell: "grep -B2 'certificate-key' {{ SOURCE_DIR }}/token.txt | grep -v note > {{ SOURCE_DIR }}/master.sh"

- name: 获取node的token

shell: "grep -A1 'kubeadm join' {{ SOURCE_DIR }}/token.txt |tail -2 > {{ SOURCE_DIR }}/node.sh"

- name: 拷贝master.sh

copy:

src: "{{ SOURCE_DIR }}/master.sh"

dest: "{{ SOURCE_DIR }}"

owner: root

group: root

mode: 755

- name: 拷贝node.sh

copy:

src: "{{ SOURCE_DIR }}/node.sh"

dest: "{{ SOURCE_DIR }}"

owner: root

group: root

mode: 755

- name: 创建 $HOME/.kube 目录

file: name=$HOME/.kube state=directory

- name: 拷贝KubeConfig

copy: src=/etc/kubernetes/admin.conf dest=$HOME/.kube/config owner=root group=root

- hosts: add_master

remote_user: root

gather_facts: no

any_errors_fatal: no

vars:

POD_CIDR: 10.43.0.0/16

MASTER_VIP: 10.2.10.125

VIP_IF: ens192

SOURCE_DIR: /root

MASTER1: 10.2.10.101

MASTER2: 10.2.10.102

MASTER3: 10.2.10.103

tasks:

- name: 安装命令行

yum:

name: "{{ item }}"

state: present

enablerepo: kubernetes

loop:

- kubelet-1.20.15

- kubectl-1.20.15

- kubeadm-1.20.15

- name: "enable kubelet"

service:

name: kubelet

enabled: yes

state: started

- name: 集群初始化准备1

# 如果使用默认的kubeadm,需要换成 kubeadm reset -f

shell: "/root/kubeadm reset -f"

- name: 集群初始化准备2

shell: "systemctl daemon-reload && systemctl restart kubelet"

- name: 集群初始化准备3

shell: "iptables -F && ipvsadm --clear"

- name: download vip image

shell: "docker pull plndr/kube-vip:v0.5.5"

- name: kube-vip

shell:

cmd: |

export VIP={{ MASTER_VIP }}

export INTERFACE={{ VIP_IF }}

export master1={{ MASTER1 }}

export master2={{ MASTER2 }}

export master3={{ MASTER3 }}

mkdir -p /etc/kubernetes/manifests

- name: 集群增加master

script: "{{ SOURCE_DIR }}/master.sh"

- name: start vip

copy: src=/etc/kubernetes/manifests/kube-vip.yaml dest=/etc/kubernetes/manifests owner=root group=root

- hosts: add_node

remote_user: root

gather_facts: no

any_errors_fatal: no

vars:

SOURCE_DIR: /root

tasks:

- name: 安装命令行

yum:

name: "{{ item }}"

state: present

enablerepo: kubernetes

loop:

- kubelet-1.20.15

- kubeadm-1.20.15

- name: "enable kubelet"

service:

name: kubelet

enabled: yes

state: started

- name: 集群初始化准备1

# 如果使用默认的kubeadm,需要换成 kubeadm reset -f

shell: "/root/kubeadm reset -f"

- name: 集群初始化准备2

shell: "systemctl daemon-reload && systemctl restart kubelet"

- name: 集群初始化准备3

shell: "iptables -F && ipvsadm --clear"

- name: 集群增加node

script: "{{ SOURCE_DIR }}/node.sh"

- hosts: master

remote_user: root

gather_facts: no

any_errors_fatal: no

tasks:

- name: 部署calico

shell: "kubectl apply -f /root/calico.yaml"kubeadm配置

cat kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta1

# 高可用地址

controlPlaneEndpoint: "10.2.10.125:6443"

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

# 与k8s集群版本一致

kubernetesiVersion: v1.20.15

scheduler:

extraArgs:

port: "10251"

# 关闭yaml导出时的ManagedFields字段

apiServer:

extraArgs:

feature-gates: ServerSideApply=false

networking:

# 与原集群版本一致

# set podSubnet network,same as calico network in calico.yaml

podSubnet: "10.43.0.0/16"

---

# Open IPVS mode

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvscalico

curl https://raw.githubusercontent.com/projectcalico/calico/v3.24.1/manifests/calico.yaml执行

kubeadm-config.yaml和calico.yaml需要放在/root下。

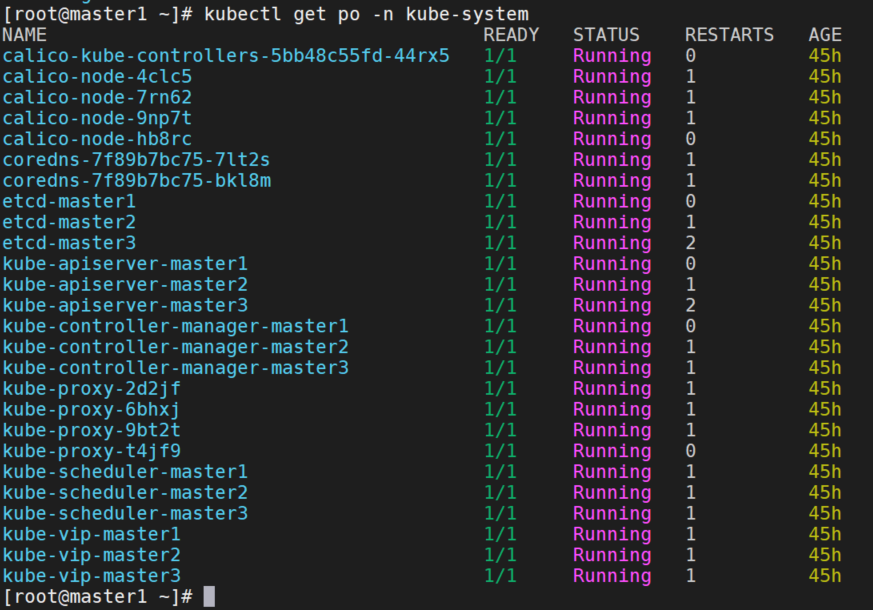

ansible-playbook k8s.yml执行完成后,查看集群已部署完成。