环境

3个集群版本都已经升级到了1.24.14,步骤参考这里,并且使用karmada部署了多集群,且集群之间互通。

- master1:172.16.255.183

- master2:172.16.255.181

- master3:172.16.255.182

istio多集群

部署多集群时,需要考虑到istio的部署模型。网格将被限制在单个集群中还是分布在多个集群中? 是将所有服务都放置在单个完全连接的网络中,还是需要网关来跨多个网络连接服务? 是否存在单个控制平面(可能在集群之间共享), 或者是否部署了多个控制平面以确保高可用(HA)? 如果要部署多个集群(更具体地说是在隔离的网络中), 是否要将它们连接到单个多集群服务网格中, 还是将它们联合到一个多网格部署中?每个问题都代表了istio部署的独立配置维度。

- 单一或多个集群

- 单一或多个网络

- 单一或多控制平面

- 单一或多个网格

如何选择正确的部署模型,取决于您对隔离性、性能和HA的要求。

具体到安装方式可以分为以下几种:

- 多主架构的安装

- 主从架构的安装

- 在不同的网络上,多主架构的安装

- 在不同的网络上,主从架构的安装

准备工作

集群

我这里istio是1.18版本,至少需要2个k8s集群,版本是1.24+。

API Server Access

每个集群中的API服务器必须能被网格中其他集群访问。 如果API服务器不能被直接访问,则需要调整安装流程以放开访问。 例如,用于多网络、主从架构配置的东西向网关就可以用来开启API服务器的访问。

环境变量

配置每个集群的kubeconfig。不配置也行,在执行kubectl命令时手动指定。

export CTX_CLUSTER1=<your cluster1 context>

export CTX_CLUSTER2=<your cluster2 context>配置信任关系

多集群服务网格部署要求您在网格中的所有集群之间建立信任关系。 基于您的系统需求,可以有多个建立信任关系的选择。

如果您计划仅部署一个主集群(即采用本地——远程部署的方式),您将只有一个 CA (即使用 cluster1 上的 istiod )为两个集群颁发证书。 在这种情况下,您可以跳过以下 CA 证书生成步骤, 并且只需使用默认自签名的 CA 进行安装。

如果您当前有一个自签名 CA 的独立集群 (就像入门中描述的那样), 您需要用一个证书管理中介绍的方法,来改变 CA。 改变 CA 通常需要重新安装 Istio。

下载 Istio

官方下载地址:https://github.com/istio/istio/releases/tag/1.18.0 。也可以使用自动化工具下载并提取最新版本。

# 每个集群都需要下载。

curl -L https://istio.io/downloadIstio | sh -

wget https://github.com/istio/istio/releases/download/1.18.0/istioctl-1.18.0-linux-amd64.tar.gz

tar xvf istio-1.18.0-linux-amd64.tar.gz

cd istio-1.18.0

export PATH=$PWD/bin:$PATH- samples/ 目录下的示例应用程序

- bin/ 目录下的 istioctl 客户端二进制文件。

istioctl命令补全

istioctl completion bash > /etc/bash_completion.d/istioctl

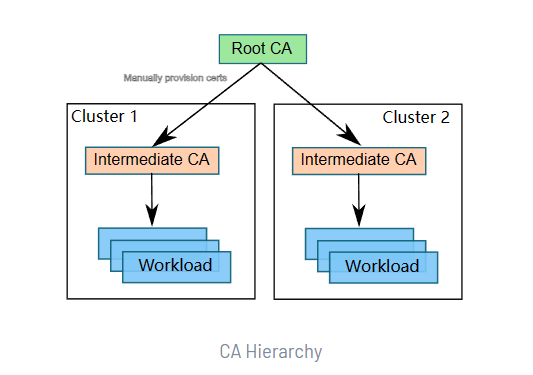

source /etc/bash_completion.d/istioctl证书管理

默认情况下,Istio CA 会生成一个自签名的根证书和密钥,并使用它们来签署工作负载证书。 为了保护根 CA 密钥,您应该使用在安全机器上离线运行的根 CA,并使用根 CA 向运行在每个集群上的 Istio CA 签发中间证书。Istio CA 可以使用管理员指定的证书和密钥来签署工作负载证书, 并将管理员指定的根证书作为信任根分配给工作负载。

在集群中插入证书和密钥

生产环境中官方强烈建议使用生产型 CA,如 Hashicorp Vault。我这里用直接使用本地生成。

Go 1.18 默认禁用对 SHA-1 签名的支持。 如果您正在 macOS 上生成证书,请确保您使用的是 OpenSSL。详情请参阅 GitHub issue 38049。

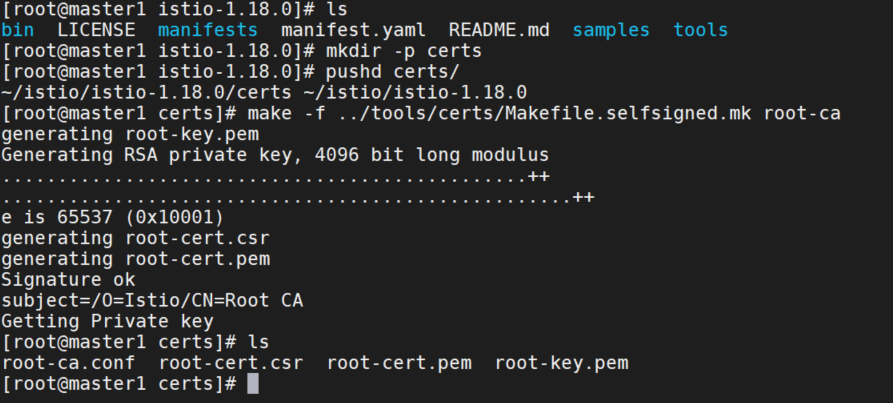

按照官方文档生成根证书:

# 每个集群都需要操作,在Istio安装包的顶层目录下,创建一个目录来存放证书和密钥:

mkdir -p certs

pushd certs

# member1集群操作,生成根证书和密钥:

make -f ../tools/certs/Makefile.selfsigned.mk root-ca

# 将会生成以下文件:

root-cert.pem:生成的根证书

root-key.pem:生成的根密钥

root-ca.conf:生成根证书的 openssl 配置

root-cert.csr:为根证书生成的 CSR

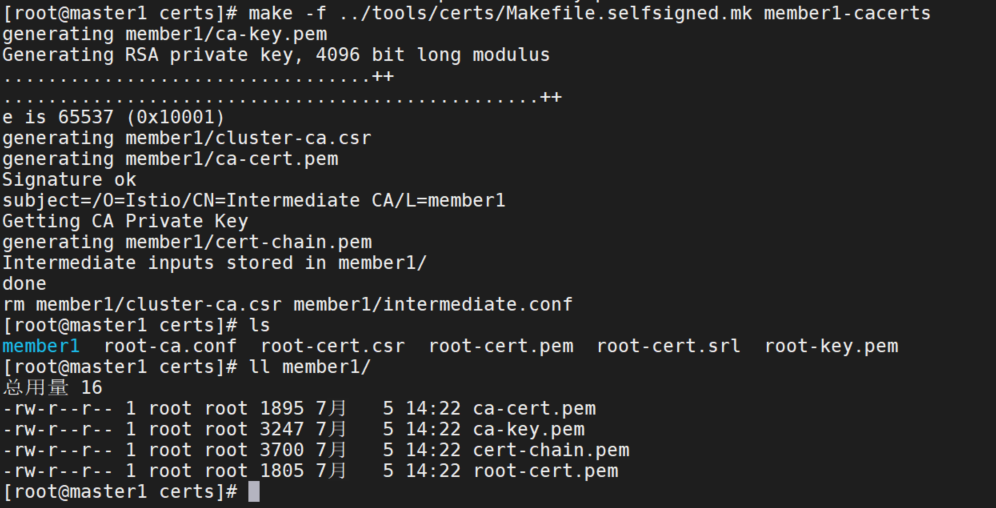

对于每个集群,为Istio CA生成一个中间证书和密钥。

make -f ../tools/certs/Makefile.selfsigned.mk member1-cacerts

# 运行以上命令,将会在名为 cluster1 的目录下生成以下文件:

ca-cert.pem:生成的中间证书

ca-key.pem:生成的中间密钥

cert-chain.pem:istiod 使用的生成的证书链

root-cert.pem:根证书

make -f ../tools/certs/Makefile.selfsigned.mk member2-cacerts

make -f ../tools/certs/Makefile.selfsigned.mk member3-cacerts

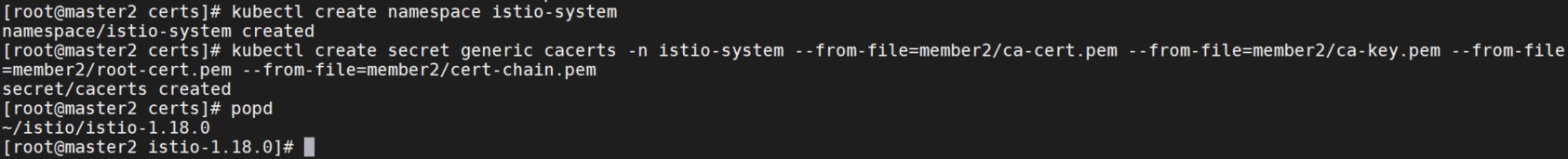

# 拷贝member2和member3目录到对应集群istio目录中

scp -r member2 172.16.255.181:/root/istio/istio-1.18.0/certs

scp -r member3 172.16.255.182:/root/istio/istio-1.18.0/certs

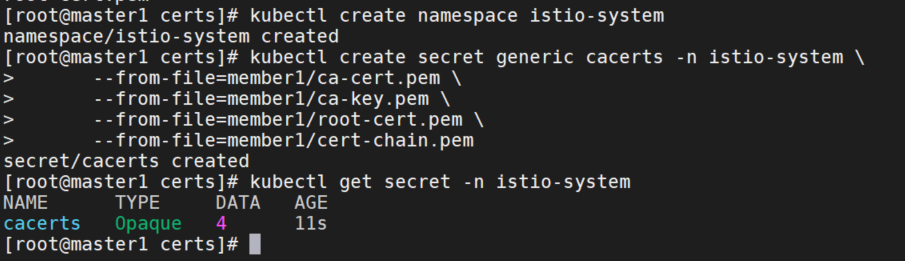

在每个集群中,创建一个私密 cacerts secret,包括所有输入文件 ca-cert.pem, ca-key.pem,root-cert.pem 和 cert-chain.pem。

kubectl create namespace istio-system

kubectl create secret generic cacerts -n istio-system \

--from-file=member1/ca-cert.pem \

--from-file=member1/ca-key.pem \

--from-file=member1/root-cert.pem \

--from-file=member1/cert-chain.pem

安装istio

使用demo配置文件部署Istio。Istio的CA将会从私密安装文件中读取证书和密钥。

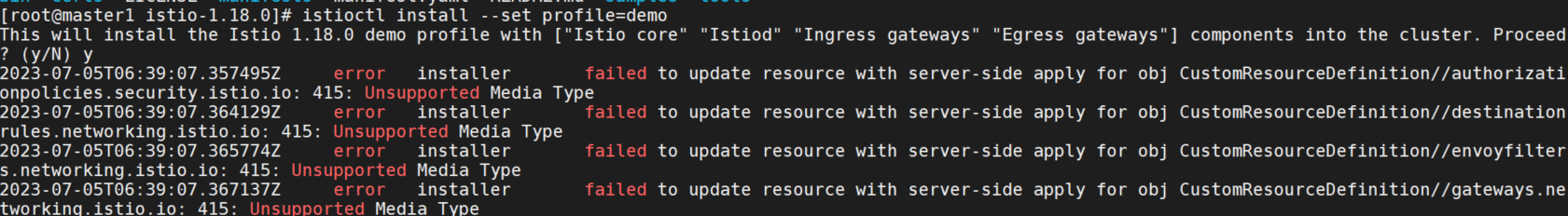

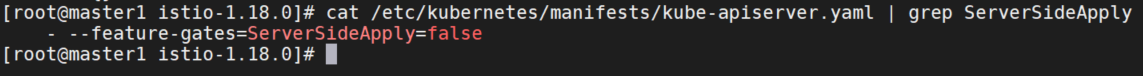

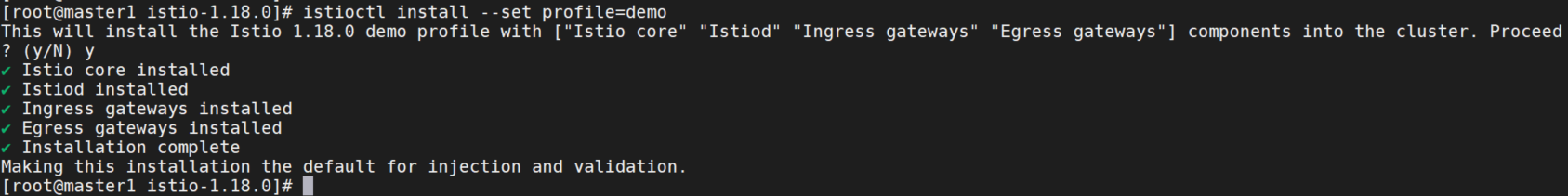

istioctl install --set profile=demo安装报错:error installer failed to update resource with server-side apply for obj CustomResourceDefinition//authorizationpolicies.security.istio.io: 415: Unsupported Media Type

这个是因为apiserver配置了feature-gates=ServerSideApply=false参数,参考官方文档:https://kubernetes.io/zh-cn/docs/reference/using-api/server-side-apply/ ,这个配置默认是启用的,我这里禁用了,需要开启。

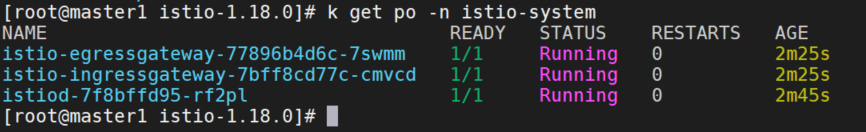

再次部署。

部署实例服务

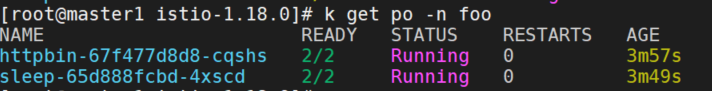

部署httpbin和sleep示例服务。

kubectl create ns foo

kubectl apply -f <(istioctl kube-inject -f samples/httpbin/httpbin.yaml) -n foo

kubectl apply -f <(istioctl kube-inject -f samples/sleep/sleep.yaml) -n foo

为foo命名空间中的工作负载部署一个策略,使其只接受相互的TLS流量。

kubectl apply -n foo -f - <<EOF

apiVersion: security.istio.io/v1beta1

kind: PeerAuthentication

metadata:

name: "default"

spec:

mtls:

mode: STRICT

EOF

验证证书

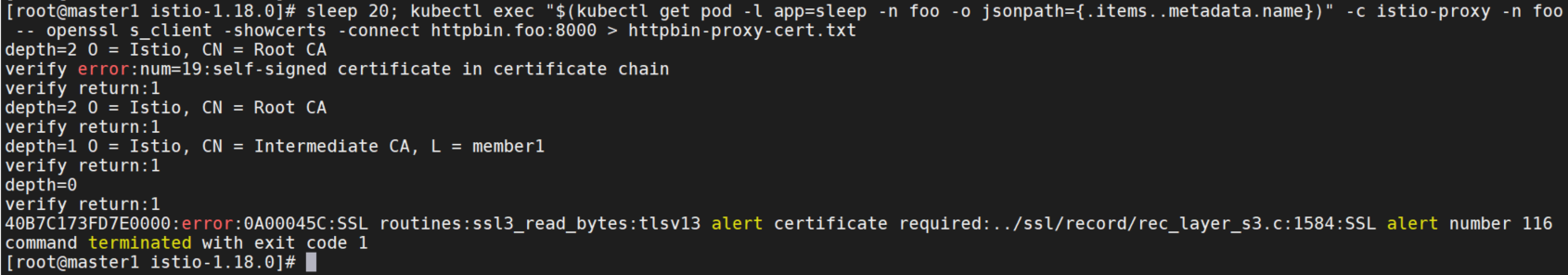

验证工作负载证书是否已通过插入到CA中的证书签署。验证的前提要求机器上安装有openssl。

在检索httpbin的证书链之前,请等待20秒使mTLS策略生效。由于使用的CA证书是自签的,所以可以预料openssl命令返回verify error:num=19:self signed certificate in certificate chain。

sleep 20; kubectl exec "$(kubectl get pod -l app=sleep -n foo -o jsonpath={.items..metadata.name})" -c istio-proxy -n foo -- openssl s_client -showcerts -connect httpbin.foo:8000 > httpbin-proxy-cert.txt

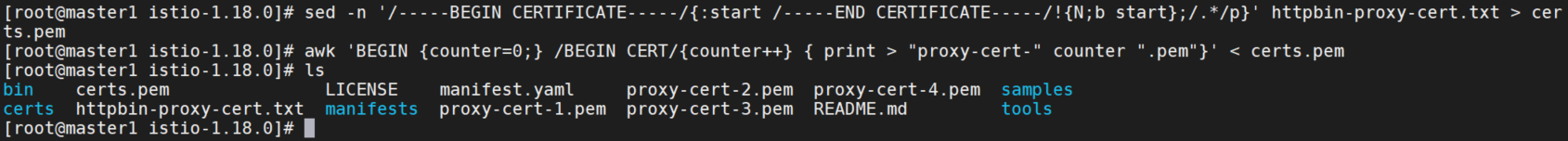

解析证书链上的证书。

sed -n '/-----BEGIN CERTIFICATE-----/{:start /-----END CERTIFICATE-----/!{N;b start};/.*/p}' httpbin-proxy-cert.txt > certs.pem

awk 'BEGIN {counter=0;} /BEGIN CERT/{counter++} { print > "proxy-cert-" counter ".pem"}' < certs.pem

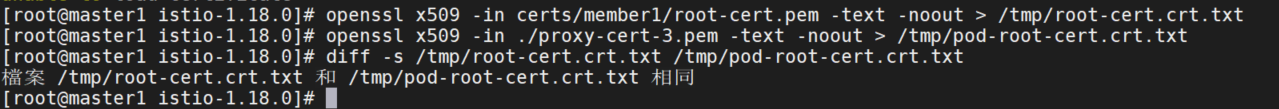

确认根证书与管理员指定的证书是否相同:

openssl x509 -in certs/member1/root-cert.pem -text -noout > /tmp/root-cert.crt.txt

openssl x509 -in ./proxy-cert-3.pem -text -noout > /tmp/pod-root-cert.crt.txt

diff -s /tmp/root-cert.crt.txt /tmp/pod-root-cert.crt.txt

验证CA证书与管理员指定的证书是否相同:

openssl x509 -in certs/member1/ca-cert.pem -text -noout > /tmp/ca-cert.crt.txt

openssl x509 -in ./proxy-cert-2.pem -text -noout > /tmp/pod-cert-chain-ca.crt.txt

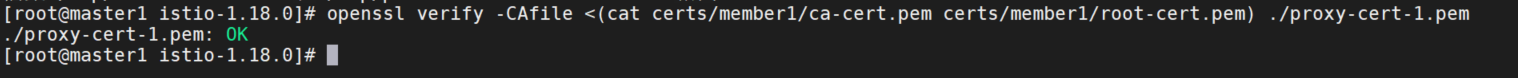

diff -s /tmp/ca-cert.crt.txt /tmp/pod-cert-chain-ca.crt.txt验证从根证书到工作负载证书的证书链:

openssl verify -CAfile <(cat certs/member1/ca-cert.pem certs/member1/root-cert.pem) ./proxy-cert-1.pem

CA证书和密钥插入成功。

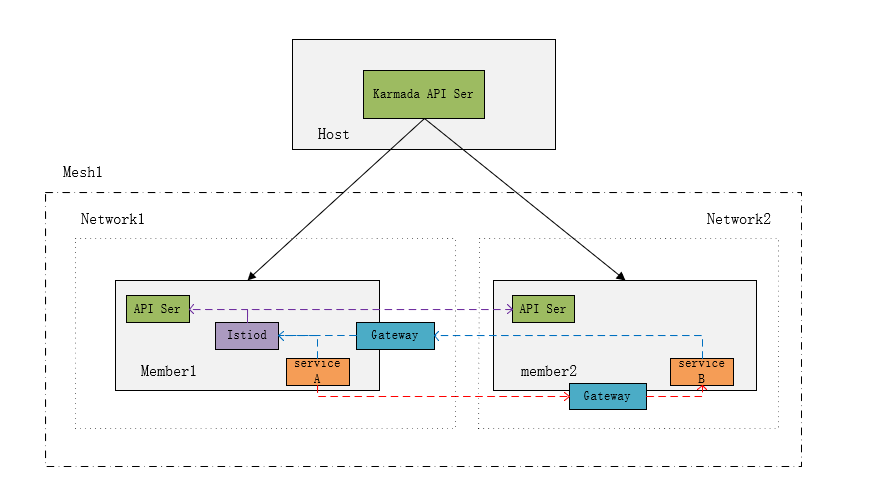

多集群安装

我这里每个集群间pod可以通过Submariner来通信,所以这4种安装方式都可以实现。这里介绍下跨网络多主架构的安装。

在member1-3中,安装istio控制平面,且每个都设置为primary cluster主集群。跨集群边界的服务负载通过专用的东西向网关,以间接的方式通讯。每个集群中的网关在其他集群必须可以访问。

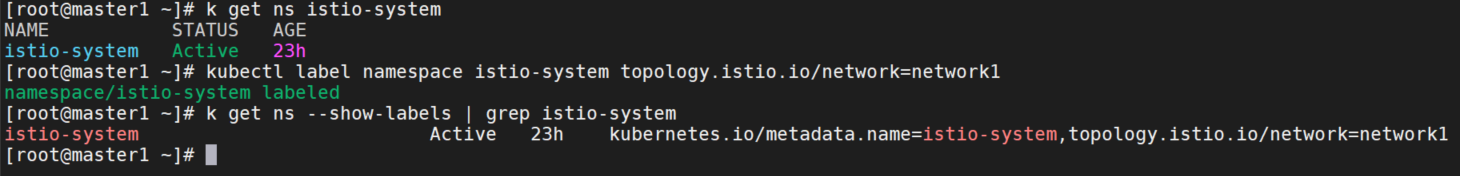

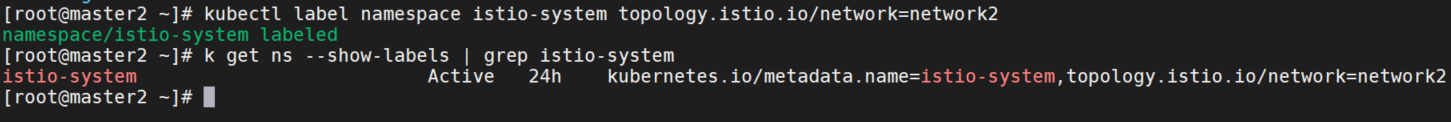

为member1设置缺省网络

前面已经部署好了istio,istio-system namespace,所以这里只需要打标签即可。

kubectl label namespace istio-system topology.istio.io/network=network1

将member1设为主集群

创建Istio配置文件:

cat <<EOF > member1.yaml

apiVersion: install.istio.io/v1alpha1

kind: IstioOperator

spec:

values:

global:

meshID: mesh1

multiCluster:

clusterName: member1

network: network1

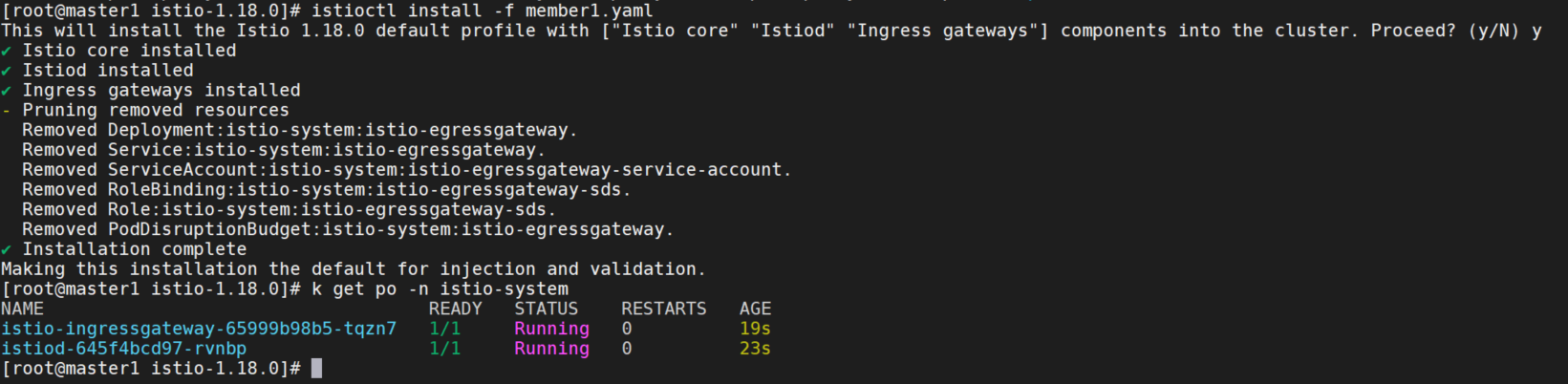

EOF将配置文件应用到member1:

istioctl install -f member1.yaml

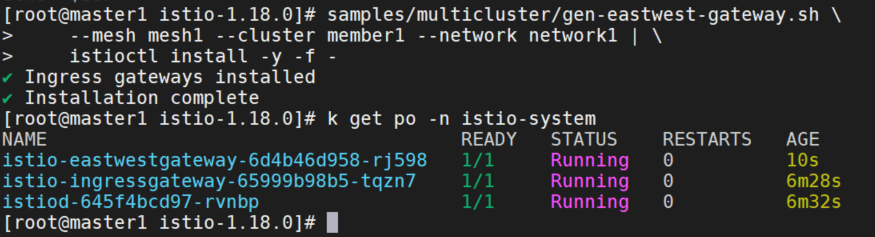

在member1安装东西向网关

在member1安装专用的东西向网关。 默认情况下,此网关将被公开到互联网上。生产系统可能需要添加额外的访问限制(即:通过防火墙规则)来防止外部攻击。

samples/multicluster/gen-eastwest-gateway.sh \

--mesh mesh1 --cluster member1 --network network1 | \

istioctl install -y -f -

我这里没有部署metalb或者openelb,所以修改loadbalancer为nodeport。

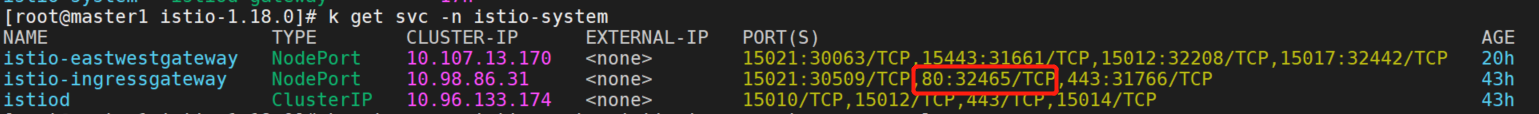

k get svc -n istio-system

k edit svc istio-eastwestgateway -n istio-system

k edit svc istio-ingressgateway -n istio-system

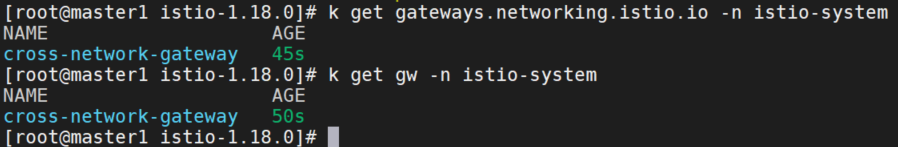

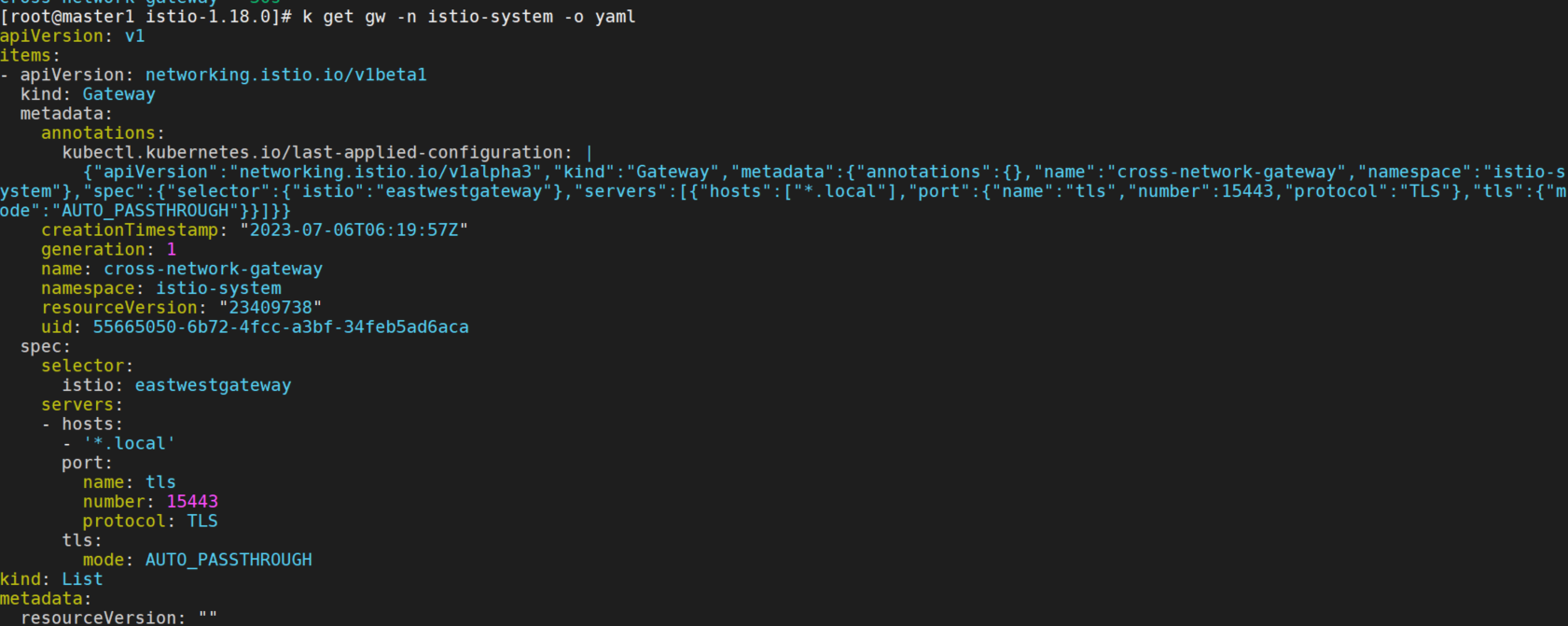

开放member1中的服务

因为集群位于不同的网络中,所以我们需要在两个集群东西向网关上开放所有服务(*.local)。 虽然此网关在互联网上是公开的,但它背后的服务只能被拥有可信 mTLS 证书、工作负载 ID 的服务访问, 就像它们处于同一网络一样。

kubectl apply -n istio-system -f \

samples/multicluster/expose-services.yaml

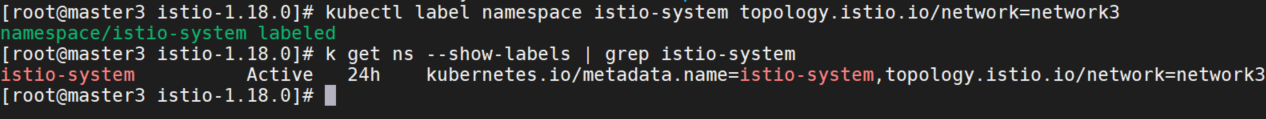

为member2,3设置缺省网络

kubectl label namespace istio-system topology.istio.io/network=network2

将member2,3设为主集群

创建Istio配置文件:

cat <<EOF > member2.yaml

apiVersion: install.istio.io/v1alpha1

kind: IstioOperator

spec:

values:

global:

meshID: mesh1

multiCluster:

clusterName: member2

network: network2

EOF

cat <<EOF > member3.yaml

> apiVersion: install.istio.io/v1alpha1

> kind: IstioOperator

> spec:

> values:

> global:

> meshID: mesh1

> multiCluster:

> clusterName: member3

> network: network3

> EOF将配置文件应用到member2,3:

istioctl install -f member2.yaml

istioctl install -f member3.yaml

在member2,3安装东西向网关

仿照上面member1的操作,在member2安装专用于东西向流量的网关。

samples/multicluster/gen-eastwest-gateway.sh \

--mesh mesh1 --cluster member3 --network network3 | \

istioctl install -y -f -修改loadbalancer为nodeport。

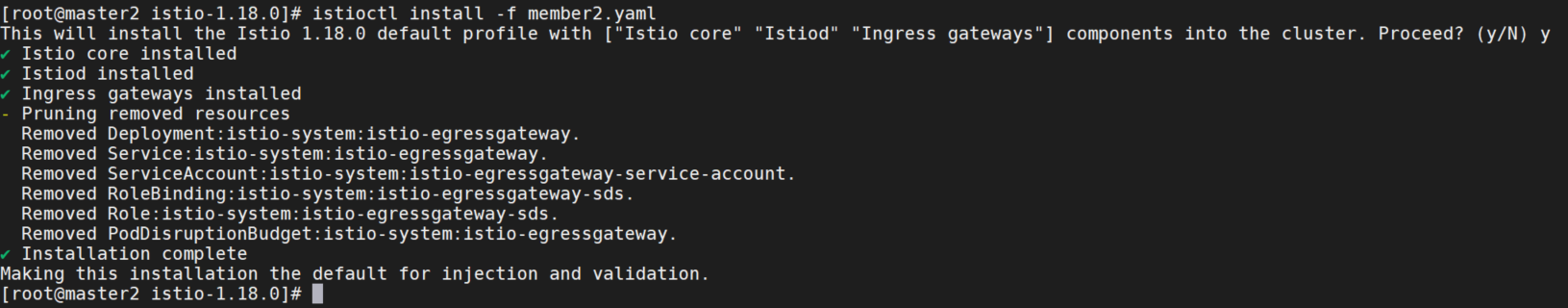

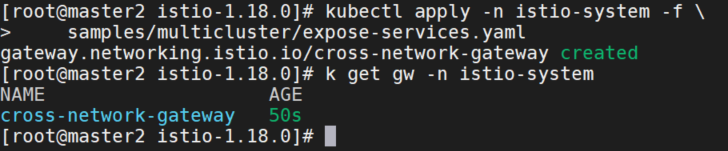

开放member2,3中的服务

kubectl apply -n istio-system -f \

samples/multicluster/expose-services.yaml

启用端点发现

我的集群context都是一样的,所以需要拷贝各个member的kubeconfig到各机器上。

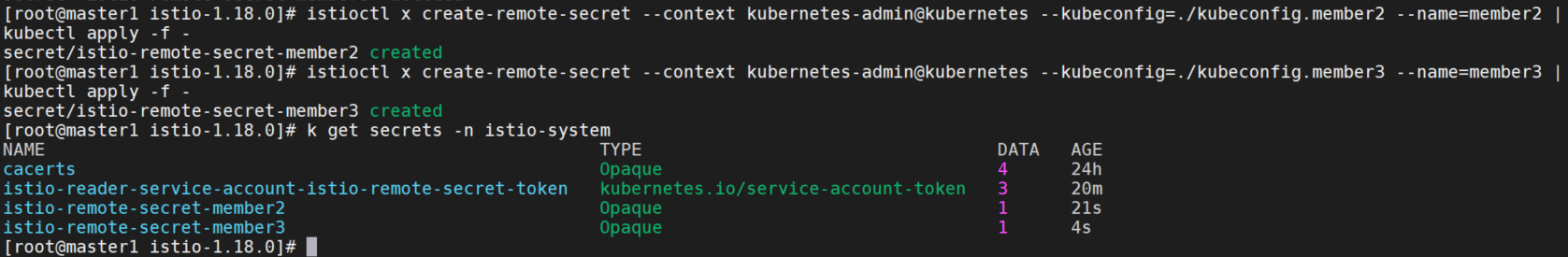

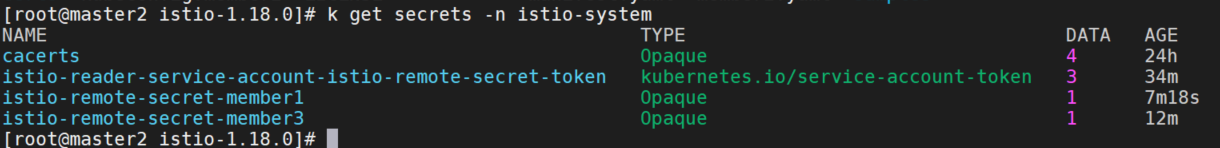

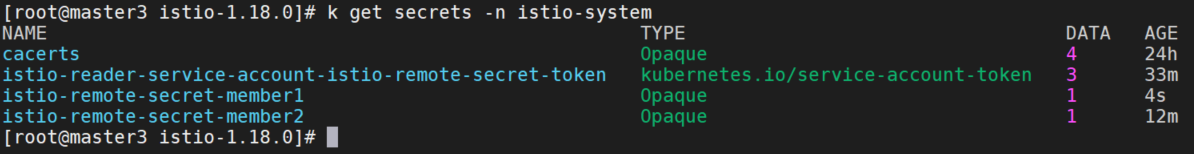

在member1中安装一个提供member2,member3 API Server 访问权限的远程 Secret。

istioctl x create-remote-secret --context kubernetes-admin@kubernetes --kubeconfig=./kubeconfig.member2 --name=member2 | kubectl apply -f -

istioctl x create-remote-secret --context kubernetes-admin@kubernetes --kubeconfig=./kubeconfig.member3 --name=member3 | kubectl apply -f -

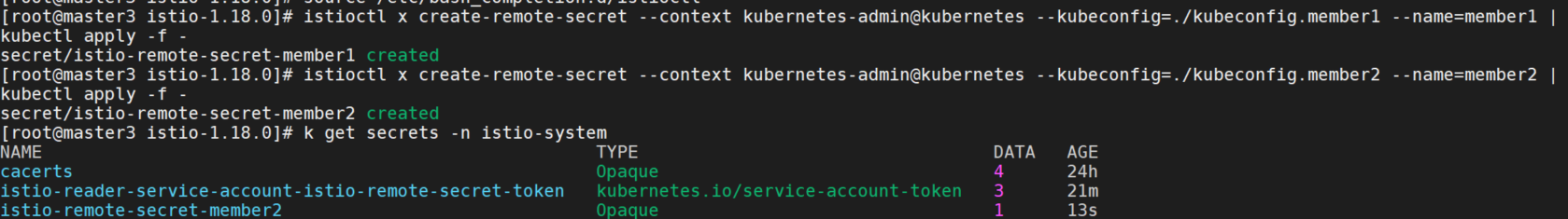

在member2中安装一个提供member1,member3 API Server 访问权限的远程 Secret。

istioctl x create-remote-secret --context kubernetes-admin@kubernetes --kubeconfig=./kubeconfig.member1 --name=member1 | kubectl apply -f -

istioctl x create-remote-secret --context kubernetes-admin@kubernetes --kubeconfig=./kubeconfig.member3 --name=member3 | kubectl apply -f -在member3中安装一个提供member1,member2 API Server 访问权限的远程 Secret。

istioctl x create-remote-secret --context kubernetes-admin@kubernetes --kubeconfig=./kubeconfig.member1 --name=member1 | kubectl apply -f -

istioctl x create-remote-secret --context kubernetes-admin@kubernetes --kubeconfig=./kubeconfig.member2 --name=member2 | kubectl apply -f -

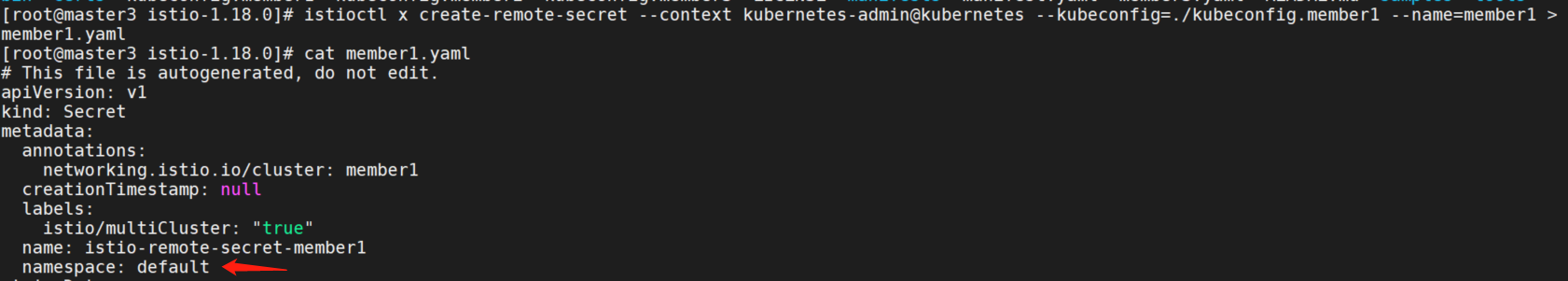

在member2和member3上apply后,istio-remote-secret-member1 secret都出现在了default空间内。

执行命令导出yaml,确实在default空间内。

istioctl x create-remote-secret --context kubernetes-admin@kubernetes --kubeconfig=./kubeconfig.member1 --name=member1 > member1.yaml

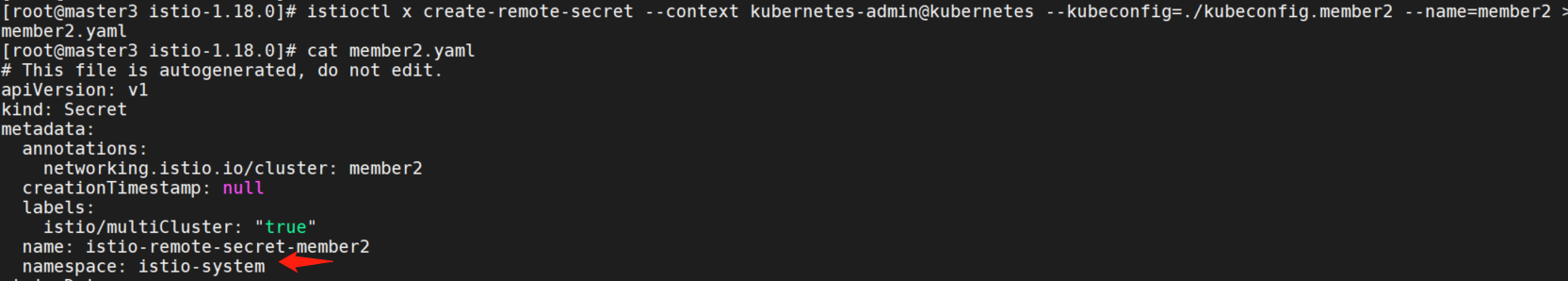

而member2就在istio-system中。

istioctl x create-remote-secret --context kubernetes-admin@kubernetes --kubeconfig=./kubeconfig.member2 --name=member2 > member2.yaml

修改namespace后,手动导入secret到istio-system中。

kubectl apply -f member1.yaml

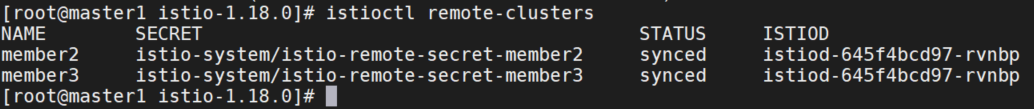

查看集群情况:

istioctl remote-clusters

karmada-server集群部署istio

我的集群环境是跨网络的,用karmada部署的,所以不能用官方istio的文档来测试多集群,参考karmada官方文档:

https://karmada.io/zh/docs/userguide/service/working-with-istio-on-non-flat-network

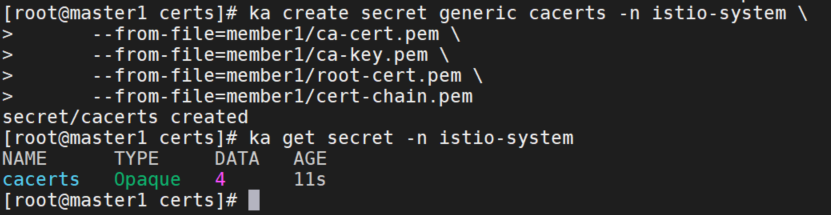

创建namespace,secret

ka create namespace istio-system

ka create secret generic cacerts -n istio-system \

--from-file=member1/ca-cert.pem \

--from-file=member1/ca-key.pem \

--from-file=member1/root-cert.pem \

--from-file=member1/cert-chain.pem

创建cacerts传播策略

cat <<EOF | ka apply -f -

apiVersion: policy.karmada.io/v1alpha1

kind: PropagationPolicy

metadata:

name: cacerts-propagation

namespace: istio-system

spec:

resourceSelectors:

- apiVersion: v1

kind: Secret

name: cacerts

placement:

clusterAffinity:

clusterNames:

- member1

- member2

- member3

EOF

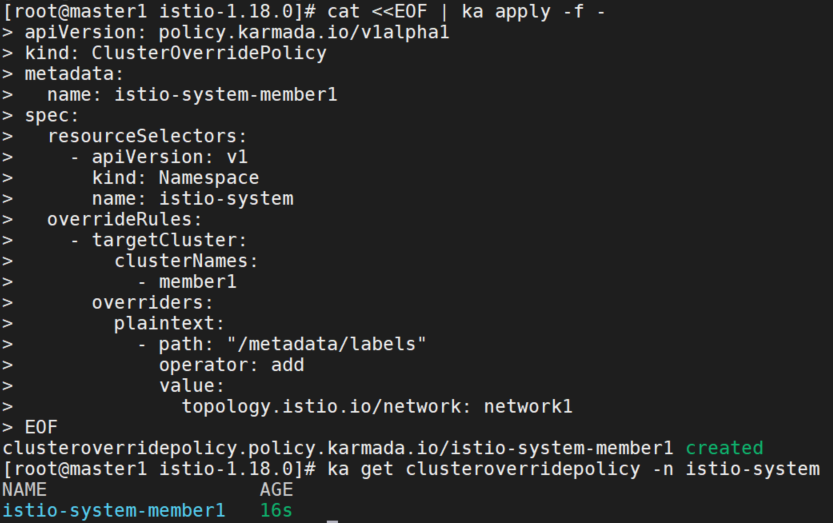

覆盖member1的istio-system namespace标签

cat <<EOF | ka apply -f -

apiVersion: policy.karmada.io/v1alpha1

kind: ClusterOverridePolicy

metadata:

name: istio-system-member1

spec:

resourceSelectors:

- apiVersion: v1

kind: Namespace

name: istio-system

overrideRules:

- targetCluster:

clusterNames:

- member1

overriders:

plaintext:

- path: "/metadata/labels"

operator: add

value:

topology.istio.io/network: network1

EOF

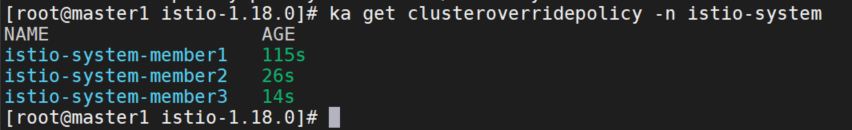

同样创建member2和member3的。

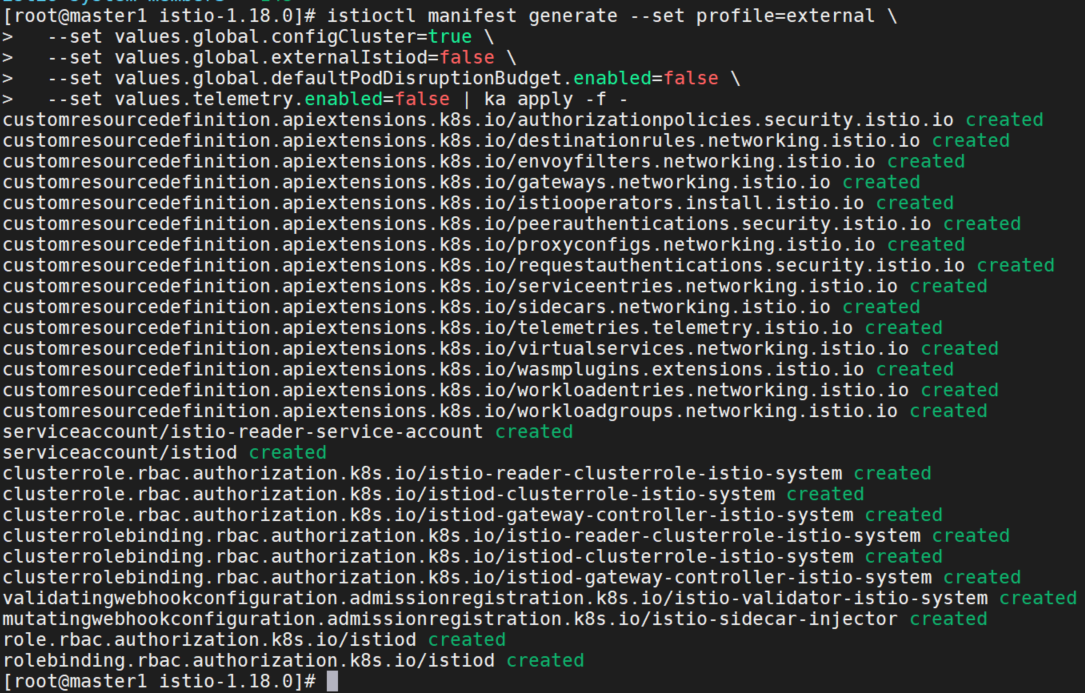

在karmada apiserver上安装 istio CRD

istioctl manifest generate --set profile=external \

--set values.global.configCluster=true \

--set values.global.externalIstiod=false \

--set values.global.defaultPodDisruptionBudget.enabled=false \

--set values.telemetry.enabled=false | ka apply -f -

member1集群安装istiod

安装istio控制平面,安装东西向网关,这两个上面已经安装过了。

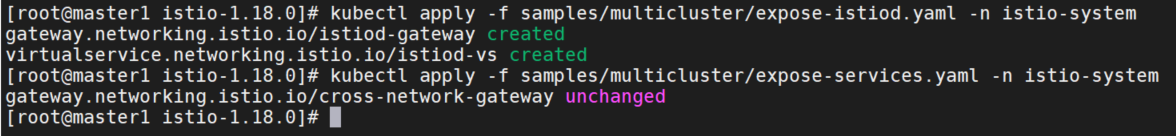

公开member1控制平面和服务

kubectl apply -f samples/multicluster/expose-istiod.yaml -n istio-system

kubectl apply -f samples/multicluster/expose-services.yaml -n istio-system

安装istio remote,这个上面也已经安装过了。

创建传播策略

apiVersion: policy.karmada.io/v1alpha1

kind: PropagationPolicy

metadata:

name: service-propagation

spec:

resourceSelectors:

- apiVersion: v1

kind: Service

name: helloworld

- apiVersion: v1

kind: Service

name: sleep

placement:

clusterAffinity:

clusterNames:

- member1

- member2

- member3

---

apiVersion: policy.karmada.io/v1alpha1

kind: PropagationPolicy

metadata:

name: produtpage-propagation

spec:

resourceSelectors:

- apiVersion: apps/v1

kind: Deployment

name: helloworld-v1

- apiVersion: v1

kind: ServiceAccount

name: sleep

placement:

clusterAffinity:

clusterNames:

- member1

---

apiVersion: policy.karmada.io/v1alpha1

kind: PropagationPolicy

metadata:

name: produtpage-propagation

spec:

resourceSelectors:

- apiVersion: apps/v1

kind: Deployment

name: helloworld-v2

- apiVersion: v1

kind: ServiceAccount

name: sleep

placement:

clusterAffinity:

clusterNames:

- member2使用karmada官方的示例bookinfo来测试。文档地址:https://karmada.io/zh/docs/userguide/service/working-with-istio-on-flat-network#deploy-bookinfo-application

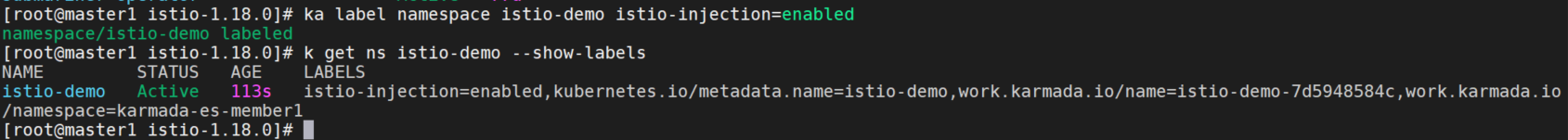

创建namespace

ka create ns istio-demo开启sidecar注入

ka label namespace istio-demo istio-injection=enabled

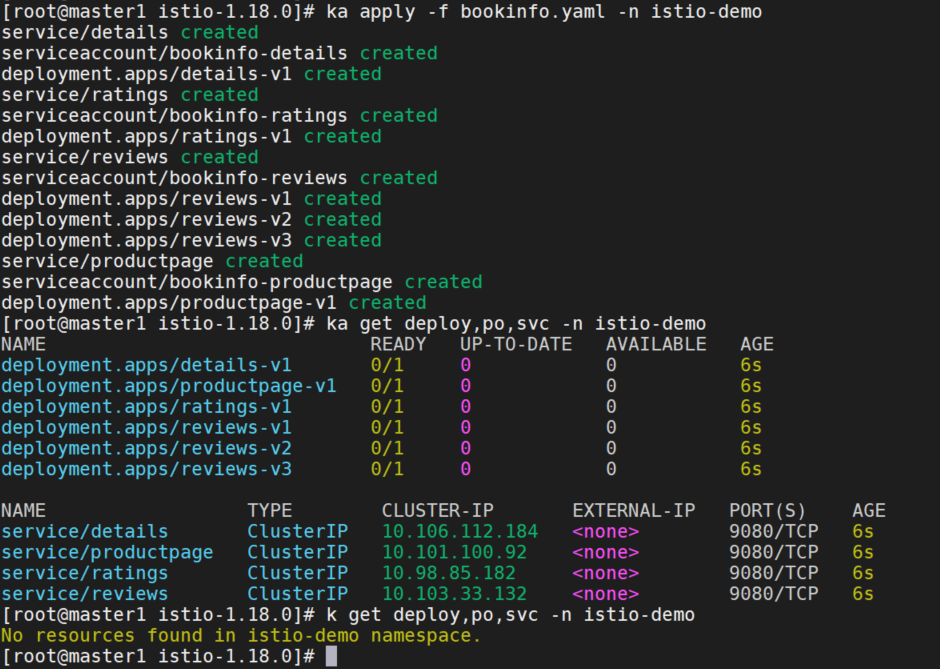

部署bookinfo

ka apply -nistio-demo -f https://raw.githubusercontent.com/istio/istio/release-1.12/samples/bookinfo/platform/kube/bookinfo.yamlyaml如下:

# Copyright Istio Authors

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

##################################################################################################

# This file defines the services, service accounts, and deployments for the Bookinfo sample.

#

# To apply all 4 Bookinfo services, their corresponding service accounts, and deployments:

#

# kubectl apply -f samples/bookinfo/platform/kube/bookinfo.yaml

#

# Alternatively, you can deploy any resource separately:

#

# kubectl apply -f samples/bookinfo/platform/kube/bookinfo.yaml -l service=reviews # reviews Service

# kubectl apply -f samples/bookinfo/platform/kube/bookinfo.yaml -l account=reviews # reviews ServiceAccount

# kubectl apply -f samples/bookinfo/platform/kube/bookinfo.yaml -l app=reviews,version=v3 # reviews-v3 Deployment

##################################################################################################

##################################################################################################

# Details service

##################################################################################################

apiVersion: v1

kind: Service

metadata:

name: details

labels:

app: details

service: details

spec:

ports:

- port: 9080

name: http

selector:

app: details

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: bookinfo-details

labels:

account: details

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: details-v1

labels:

app: details

version: v1

spec:

replicas: 1

selector:

matchLabels:

app: details

version: v1

template:

metadata:

labels:

app: details

version: v1

spec:

serviceAccountName: bookinfo-details

containers:

- name: details

image: docker.io/istio/examples-bookinfo-details-v1:1.16.2

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9080

securityContext:

runAsUser: 1000

---

##################################################################################################

# Ratings service

##################################################################################################

apiVersion: v1

kind: Service

metadata:

name: ratings

labels:

app: ratings

service: ratings

spec:

ports:

- port: 9080

name: http

selector:

app: ratings

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: bookinfo-ratings

labels:

account: ratings

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: ratings-v1

labels:

app: ratings

version: v1

spec:

replicas: 1

selector:

matchLabels:

app: ratings

version: v1

template:

metadata:

labels:

app: ratings

version: v1

spec:

serviceAccountName: bookinfo-ratings

containers:

- name: ratings

image: docker.io/istio/examples-bookinfo-ratings-v1:1.16.2

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9080

securityContext:

runAsUser: 1000

---

##################################################################################################

# Reviews service

##################################################################################################

apiVersion: v1

kind: Service

metadata:

name: reviews

labels:

app: reviews

service: reviews

spec:

ports:

- port: 9080

name: http

selector:

app: reviews

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: bookinfo-reviews

labels:

account: reviews

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: reviews-v1

labels:

app: reviews

version: v1

spec:

replicas: 1

selector:

matchLabels:

app: reviews

version: v1

template:

metadata:

labels:

app: reviews

version: v1

spec:

serviceAccountName: bookinfo-reviews

containers:

- name: reviews

image: docker.io/istio/examples-bookinfo-reviews-v1:1.16.2

imagePullPolicy: IfNotPresent

env:

- name: LOG_DIR

value: "/tmp/logs"

ports:

- containerPort: 9080

volumeMounts:

- name: tmp

mountPath: /tmp

- name: wlp-output

mountPath: /opt/ibm/wlp/output

securityContext:

runAsUser: 1000

volumes:

- name: wlp-output

emptyDir: {}

- name: tmp

emptyDir: {}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: reviews-v2

labels:

app: reviews

version: v2

spec:

replicas: 1

selector:

matchLabels:

app: reviews

version: v2

template:

metadata:

labels:

app: reviews

version: v2

spec:

serviceAccountName: bookinfo-reviews

containers:

- name: reviews

image: docker.io/istio/examples-bookinfo-reviews-v2:1.16.2

imagePullPolicy: IfNotPresent

env:

- name: LOG_DIR

value: "/tmp/logs"

ports:

- containerPort: 9080

volumeMounts:

- name: tmp

mountPath: /tmp

- name: wlp-output

mountPath: /opt/ibm/wlp/output

securityContext:

runAsUser: 1000

volumes:

- name: wlp-output

emptyDir: {}

- name: tmp

emptyDir: {}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: reviews-v3

labels:

app: reviews

version: v3

spec:

replicas: 1

selector:

matchLabels:

app: reviews

version: v3

template:

metadata:

labels:

app: reviews

version: v3

spec:

serviceAccountName: bookinfo-reviews

containers:

- name: reviews

image: docker.io/istio/examples-bookinfo-reviews-v3:1.16.2

imagePullPolicy: IfNotPresent

env:

- name: LOG_DIR

value: "/tmp/logs"

ports:

- containerPort: 9080

volumeMounts:

- name: tmp

mountPath: /tmp

- name: wlp-output

mountPath: /opt/ibm/wlp/output

securityContext:

runAsUser: 1000

volumes:

- name: wlp-output

emptyDir: {}

- name: tmp

emptyDir: {}

---

##################################################################################################

# Productpage services

##################################################################################################

apiVersion: v1

kind: Service

metadata:

name: productpage

labels:

app: productpage

service: productpage

spec:

ports:

- port: 9080

name: http

selector:

app: productpage

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: bookinfo-productpage

labels:

account: productpage

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: productpage-v1

labels:

app: productpage

version: v1

spec:

replicas: 1

selector:

matchLabels:

app: productpage

version: v1

template:

metadata:

labels:

app: productpage

version: v1

spec:

serviceAccountName: bookinfo-productpage

containers:

- name: productpage

image: docker.io/istio/examples-bookinfo-productpage-v1:1.16.2

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9080

volumeMounts:

- name: tmp

mountPath: /tmp

securityContext:

runAsUser: 1000

volumes:

- name: tmp

emptyDir: {}

---

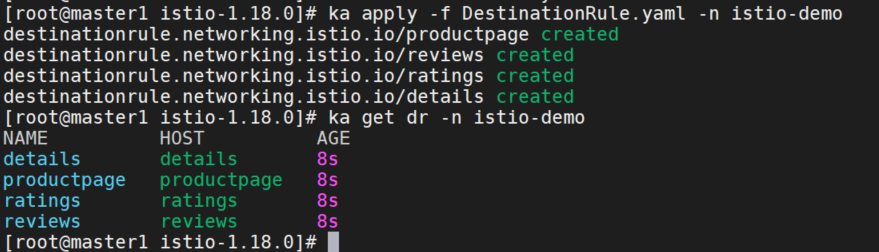

创建DestinationRule

ka apply -nistio-demo -f https://raw.githubusercontent.com/istio/istio/release-1.12/samples/bookinfo/networking/destination-rule-all.yamlyaml如下:

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: productpage

spec:

host: productpage

subsets:

- name: v1

labels:

version: v1

---

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: reviews

spec:

host: reviews

subsets:

- name: v1

labels:

version: v1

- name: v2

labels:

version: v2

- name: v3

labels:

version: v3

---

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: ratings

spec:

host: ratings

subsets:

- name: v1

labels:

version: v1

- name: v2

labels:

version: v2

- name: v2-mysql

labels:

version: v2-mysql

- name: v2-mysql-vm

labels:

version: v2-mysql-vm

---

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: details

spec:

host: details

subsets:

- name: v1

labels:

version: v1

- name: v2

labels:

version: v2

---

创建VirtualService

ka apply -nistio-demo -f https://raw.githubusercontent.com/istio/istio/release-1.12/samples/bookinfo/networking/virtual-service-all-v1.yamlyaml如下:

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: productpage

spec:

hosts:

- productpage

http:

- route:

- destination:

host: productpage

subset: v1

---

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: reviews

spec:

hosts:

- reviews

http:

- route:

- destination:

host: reviews

subset: v1

---

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: ratings

spec:

hosts:

- ratings

http:

- route:

- destination:

host: ratings

subset: v1

---

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: details

spec:

hosts:

- details

http:

- route:

- destination:

host: details

subset: v1

---

创建传播策略

给bookinfo相关服务创建传播策略。

cat <<EOF | ka apply -nistio-demo -f -

apiVersion: policy.karmada.io/v1alpha1

kind: PropagationPolicy

metadata:

name: service-propagation

spec:

resourceSelectors:

- apiVersion: v1

kind: Service

name: productpage

- apiVersion: v1

kind: Service

name: details

- apiVersion: v1

kind: Service

name: reviews

- apiVersion: v1

kind: Service

name: ratings

placement:

clusterAffinity:

clusterNames:

- member1

- member2

---

apiVersion: policy.karmada.io/v1alpha1

kind: PropagationPolicy

metadata:

name: produtpage-propagation

spec:

resourceSelectors:

- apiVersion: apps/v1

kind: Deployment

name: productpage-v1

- apiVersion: v1

kind: ServiceAccount

name: bookinfo-productpage

placement:

clusterAffinity:

clusterNames:

- member1

---

apiVersion: policy.karmada.io/v1alpha1

kind: PropagationPolicy

metadata:

name: details-propagation

spec:

resourceSelectors:

- apiVersion: apps/v1

kind: Deployment

name: details-v1

- apiVersion: v1

kind: ServiceAccount

name: bookinfo-details

placement:

clusterAffinity:

clusterNames:

- member2

---

apiVersion: policy.karmada.io/v1alpha1

kind: PropagationPolicy

metadata:

name: reviews-propagation

spec:

resourceSelectors:

- apiVersion: apps/v1

kind: Deployment

name: reviews-v1

- apiVersion: apps/v1

kind: Deployment

name: reviews-v2

- apiVersion: apps/v1

kind: Deployment

name: reviews-v3

- apiVersion: v1

kind: ServiceAccount

name: bookinfo-reviews

placement:

clusterAffinity:

clusterNames:

- member1

- member2

---

apiVersion: policy.karmada.io/v1alpha1

kind: PropagationPolicy

metadata:

name: ratings-propagation

spec:

resourceSelectors:

- apiVersion: apps/v1

kind: Deployment

name: ratings-v1

- apiVersion: v1

kind: ServiceAccount

name: bookinfo-ratings

placement:

clusterAffinity:

clusterNames:

- member2

EOF

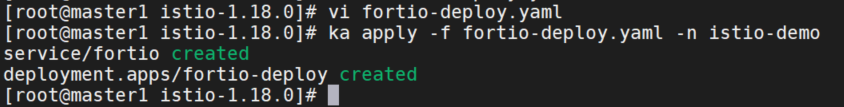

部署fortio应用

ka apply -nistio-demo -f https://raw.githubusercontent.com/istio/istio/release-1.12/samples/httpbin/sample-client/fortio-deploy.yamlyaml如下:

apiVersion: v1

kind: Service

metadata:

name: fortio

labels:

app: fortio

service: fortio

spec:

ports:

- port: 8080

name: http

selector:

app: fortio

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: fortio-deploy

spec:

replicas: 1

selector:

matchLabels:

app: fortio

template:

metadata:

annotations:

# This annotation causes Envoy to serve cluster.outbound statistics via 15000/stats

# in addition to the stats normally served by Istio. The Circuit Breaking example task

# gives an example of inspecting Envoy stats via proxy config.

proxy.istio.io/config: |-

proxyStatsMatcher:

inclusionPrefixes:

- "cluster.outbound"

- "cluster_manager"

- "listener_manager"

- "server"

- "cluster.xds-grpc"

labels:

app: fortio

spec:

containers:

- name: fortio

image: fortio/fortio:latest_release

imagePullPolicy: Always

ports:

- containerPort: 8080

name: http-fortio

- containerPort: 8079

name: grpc-ping

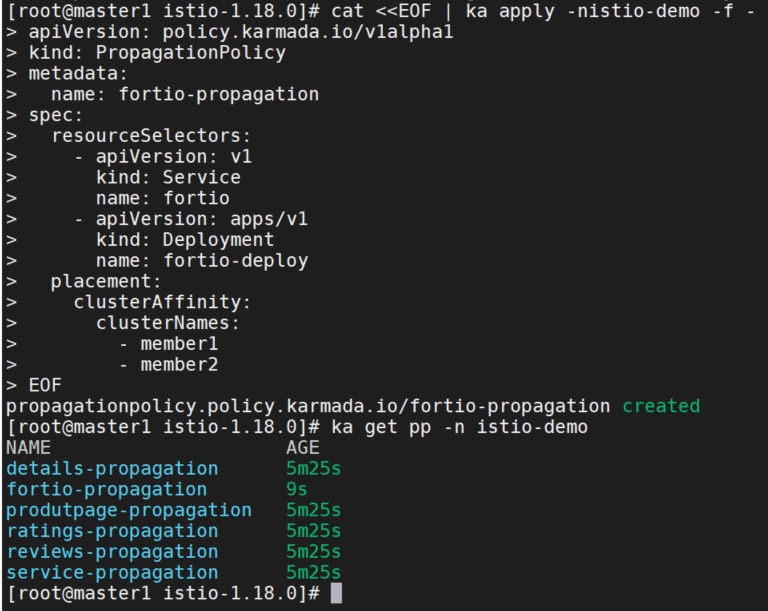

创建传播策略

给fortio应用创建传播策略

cat <<EOF | ka apply -nistio-demo -f -

apiVersion: policy.karmada.io/v1alpha1

kind: PropagationPolicy

metadata:

name: fortio-propagation

spec:

resourceSelectors:

- apiVersion: v1

kind: Service

name: fortio

- apiVersion: apps/v1

kind: Deployment

name: fortio-deploy

placement:

clusterAffinity:

clusterNames:

- member1

- member2

EOF

测试

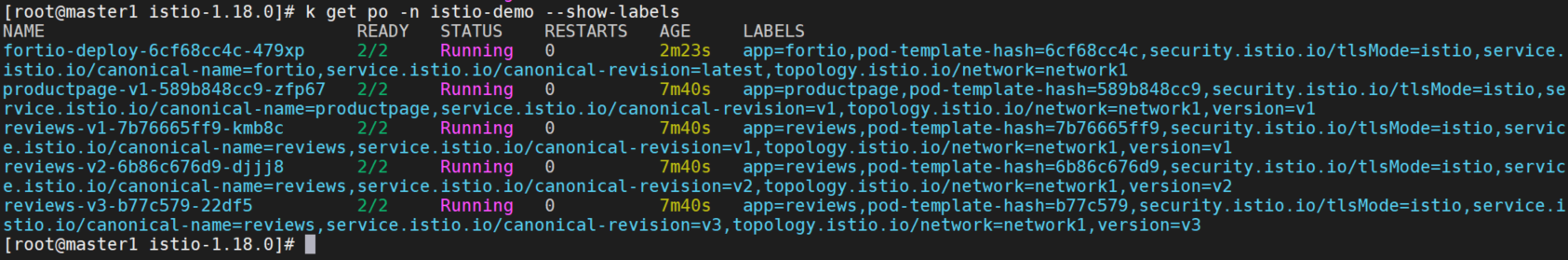

切换到member1主机群,即karmada-host集群。

查看member1应用:

查看member2应用:

查看member3应用:

执行下面的命令测试返回结果:

export FORTIO_POD=`kubectl get po -nistio-demo | grep fortio | awk '{print $1}'`

kubectl exec -it ${FORTIO_POD} -nistio-demo -- fortio load -t 3s productpage:9080/productpage

这里报错io超时了,直接访问和跨namespace都可以访问的。

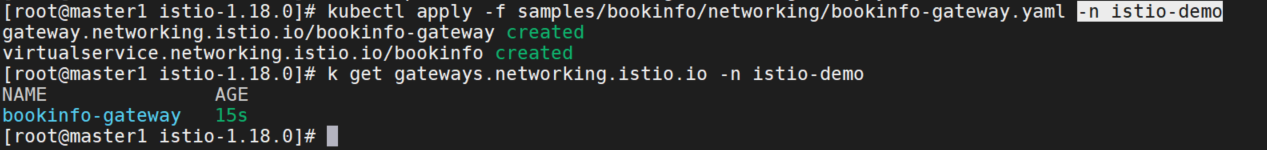

创建bookinfo gateway

kubectl apply -f samples/bookinfo/networking/bookinfo-gateway.yaml -n istio-demo

查看gateway的地址

即istio-ingressgateway的地址。

export INGRESS_NAME=istio-ingressgateway

export INGRESS_NS=istio-system

export INGRESS_HOST=$(kubectl -n "$INGRESS_NS" get service "$INGRESS_NAME" -o jsonpath='{.status.loadBalancer.ingress[0].ip}')

export INGRESS_PORT=$(kubectl -n "$INGRESS_NS" get service "$INGRESS_NAME" -o jsonpath='{.spec.ports[?(@.name=="http2")].port}')

export SECURE_INGRESS_PORT=$(kubectl -n "$INGRESS_NS" get service "$INGRESS_NAME" -o jsonpath='{.spec.ports[?(@.name=="https")].port}')

export TCP_INGRESS_PORT=$(kubectl -n "$INGRESS_NS" get service "$INGRESS_NAME" -o jsonpath='{.spec.ports[?(@.name=="tcp")].port}')我的是nodeport,查看http2的端口。

所以gateway的地址就是172.16.255.183:32465。

在集群外访问:

curl -s "http://172.16.255.183:32465/productpage" | grep -o "<title>.*</title>"

可以正常访问。