需求

我的一个k8s环境是在阿里云上的,没有使用ingress,某个服务是通过slb--nodeport外部访问的,现在这个服务内部需要看到访问的源ip地址。

解决

阿里云ip透传方案分为CLB和NLB。

CLB

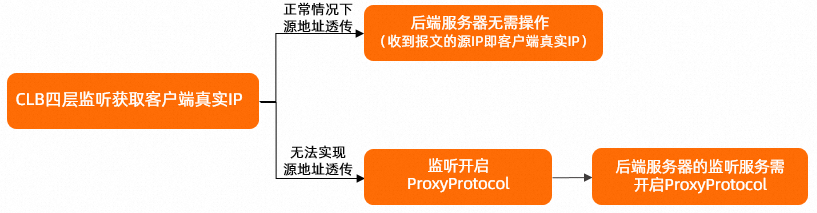

四层

阿里云CLB四层直接透传。我的环境不涉及代理所以不涉及Proxy Protocol。

七层

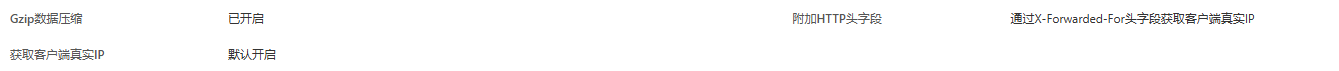

阿里云CLB七层监听默认开启通过X-Forwarded-For头字段获取客户端真实IP功能,不支持关闭。

需要后端nginx安装http_realip_module模块,Nginx使用http_realip_module模块解析X-Forwarded-For记录。

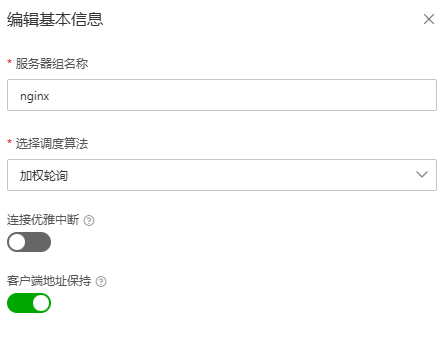

NLB

开启客户端地址保持即可,此时在后端服务器上获取的源IP即为客户端真实IP地址。

我这里使用的是CLB。

CCM

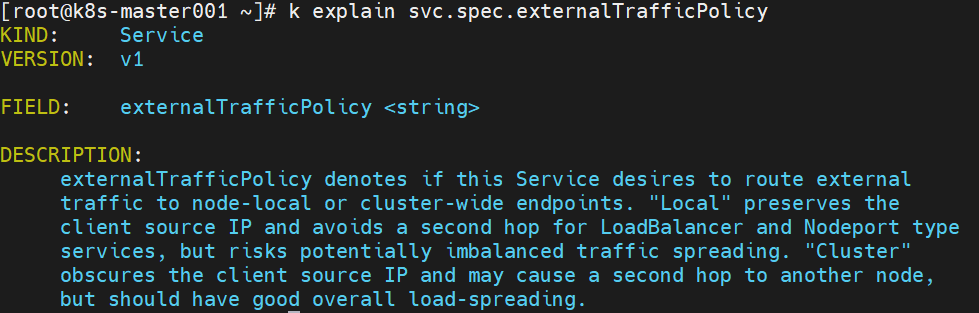

k8s内ip透传就需要修改svc的externalTrafficPolicy为Local了,意思是使流量绕过集群NAT,保留客户端IP,只转发到本机。默认都是Cluster。

修改后服务可能会无法访问,因为slb上监听绑定的后端服务器中可能没有当前服务所在的节点。这就需要动态修改slb的后端服务器组,指向pod所在的节点。

要想实现这个功能就需要使用Cloud Controller Manager CCM组件了。部署参考:

CCM有个功能是:当Service的类型设置为LoadBalancer时,CCM组件会为该Service创建并配置阿里云负载均衡CLB,包括CLB实例、监听、后端服务器组等资源。当Service对应的后端Endpoint或者集群节点发生变化时,CCM会自动更新CLB的后端服务器组。

部署CCM

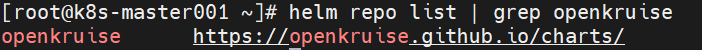

- 添加仓库

helm repo add openkruise https://openkruise.github.io/charts/

helm repo update

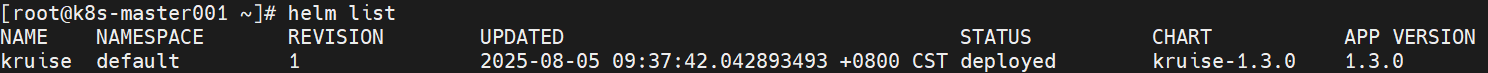

helm install kruise openkruise/kruise --version 1.3.0

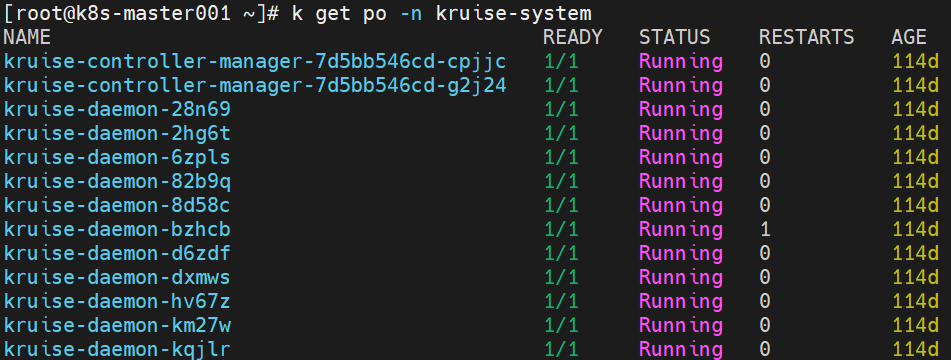

这里安装后可能有的kruise-daemon pod会报错:

Failed to list images for docker(unix:///hostyarrun/containerd/containerd.sock,unix:///hostvarrun/dockershim.sock): Errod response from daemon: {"message": client version is 1.23 is too old.Minimum supported API version is 1.24, please upgrade your client to a newer version"}

我有几台docker版本是最新的,需要把这几台排除掉。

修改kruise-daemonds的yaml

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: NotIn

values:

- k8s-node001

- k8s-node002

- 创建BroadcastJob给ecs打标

provider.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: ecs-node-initor

rules:

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- patch

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: ecs-node-initor

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: ecs-node-initor

subjects:

- kind: ServiceAccount

name: ecs-node-initor

namespace: default

roleRef:

kind: ClusterRole

name: ecs-node-initor

apiGroup: rbac.authorization.k8s.io

---

apiVersion: apps.kruise.io/v1alpha1

kind: BroadcastJob

metadata:

name: create-ecs-node-provider-id

spec:

template:

spec:

serviceAccount: ecs-node-initor

restartPolicy: OnFailure

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: type

operator: NotIn

values:

- virtual-kubelet

tolerations:

- operator: Exists

containers:

- name: create-ecs-node-provider-id

image: registry.cn-beijing.aliyuncs.com/eci-release/provider-initor:v1

command: [ "/usr/bin/init" ]

env:

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

completionPolicy:

type: Never

failurePolicy:

type: FailFast

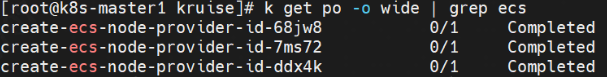

restartLimit: 3k apply -f provider.yamlcreate-ecs-node-provider-id相关的Pod均达到Completed状态,表示相应ECS节点的ProviderID已经配置成功。

-

清理BroadcastJob

k delete -f provider.yaml -

创建cm

把阿里云账号对应的AccessKey保存到环境变量。export ACCESS_KEY_ID=LTAI******************** export ACCESS_KEY_SECRET=HAeS************************** -

添加accesskey到configMap

#!/bin/bash

## create ConfigMap kube-system/cloud-config for CCM.

accessKeyIDBase64=`echo -n "$ACCESS_KEY_ID" |base64 -w 0`

accessKeySecretBase64=`echo -n "$ACCESS_KEY_SECRET"|base64 -w 0`

cat <<EOF >cloud-config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: cloud-config

namespace: kube-system

data:

cloud-config.conf: |-

{

"Global": {

"accessKeyID": "$accessKeyIDBase64",

"accessKeySecret": "$accessKeySecretBase64",

"region": "cn-hangzhou"

}

}

EOF

kubectl create -f cloud-config.yaml- 部署ccm

使用文档:https://github.com/kubernetes/cloud-provider-alibaba-cloud/blob/master/docs/usage.md

需要修改yaml中的--cluster-cidr,ImageVersion,nodeSelector,hostpath中的k8s路径,leader-elect-resource-lock改为leases。

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: system:cloud-controller-manager

rules:

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- get

- list

- update

- create

- apiGroups:

- ""

resources:

- persistentvolumes

- services

- secrets

- endpoints

- serviceaccounts

verbs:

- get

- list

- watch

- create

- update

- patch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- list

- watch

- delete

- patch

- update

- apiGroups:

- ""

resources:

- services/status

verbs:

- update

- patch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

- update

- apiGroups:

- ""

resources:

- events

- endpoints

verbs:

- create

- patch

- update

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: cloud-controller-manager

namespace: kube-system

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: system:cloud-controller-manager

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:cloud-controller-manager

subjects:

- kind: ServiceAccount

name: cloud-controller-manager

namespace: kube-system

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: system:shared-informers

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:cloud-controller-manager

subjects:

- kind: ServiceAccount

name: shared-informers

namespace: kube-system

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: system:cloud-node-controller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:cloud-controller-manager

subjects:

- kind: ServiceAccount

name: cloud-node-controller

namespace: kube-system

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: system:pvl-controller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:cloud-controller-manager

subjects:

- kind: ServiceAccount

name: pvl-controller

namespace: kube-system

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: system:route-controller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:cloud-controller-manager

subjects:

- kind: ServiceAccount

name: route-controller

namespace: kube-system

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app: cloud-controller-manager

tier: control-plane

name: cloud-controller-manager

namespace: kube-system

spec:

selector:

matchLabels:

app: cloud-controller-manager

tier: control-plane

template:

metadata:

labels:

app: cloud-controller-manager

tier: control-plane

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

serviceAccountName: cloud-controller-manager

tolerations:

- effect: NoSchedule

operator: Exists

key: node-role.kubernetes.io/master

- effect: NoSchedule

operator: Exists

key: node.cloudprovider.kubernetes.io/uninitialized

nodeSelector:

app: cloud-controller-manager

containers:

- command:

- /cloud-controller-manager

- --leader-elect=true

- --cloud-provider=alicloud

- --use-service-account-credentials=true

- --cloud-config=/etc/kubernetes/config/cloud-config.conf

- --route-reconciliation-period=3m

- --leader-elect-resource-lock=leases

# 需要配置下面这两个参数,否则会给所有node添加NetworkUnavailable污点

- --configure-cloud-routes=false

# replace ${cluster-cidr} with your own cluster cidr

# example: 172.16.0.0/16

- --cluster-cidr=172.16.0.0/16

# replace ${ImageVersion} with the latest release version

# example: v2.1.0

image: registry.cn-hangzhou.aliyuncs.com/acs/cloud-controller-manager-amd64:v2.6.0

livenessProbe:

failureThreshold: 8

httpGet:

host: 127.0.0.1

path: /healthz

port: 10258

scheme: HTTP

initialDelaySeconds: 15

timeoutSeconds: 15

name: cloud-controller-manager

resources:

requests:

cpu: 200m

volumeMounts:

- mountPath: /etc/kubernetes/

name: k8s

- mountPath: /etc/ssl/certs

name: certs

- mountPath: /etc/pki

name: pki

- mountPath: /etc/kubernetes/config

name: cloud-config

hostNetwork: true

volumes:

- hostPath:

path: /opt/kubernetes

name: k8s

- hostPath:

path: /etc/ssl/certs

name: certs

- hostPath:

path: /etc/pki

name: pki

- configMap:

defaultMode: 420

items:

- key: cloud-config.conf

path: cloud-config.conf

name: cloud-config

name: cloud-configk8s版本1.20.11不能使用最新版本的v2.10.4,会报错没有api:

controller-runtime/source/EventHandler "msg"="failed to get informer from cache" "error"="failed to get API group resources: unable to retrieve the complete list of server APIs: discovery.k8s.io/v1: the server could not find the requested resource"

替换版本为2.6.0

正常启动。

-

创建LoadBalancer

部署后,CCM组件会为Service创建并配置阿里云负载均衡CLB,包括CLB实例、监听、后端服务器组等资源。 -

验证

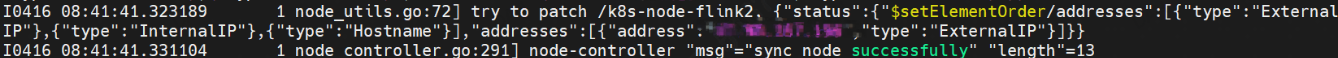

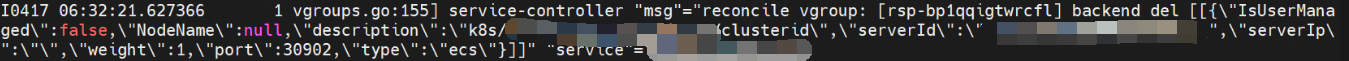

pod扩缩容后,clb上的后端服务器也动态进行增加删除。

注意:

https://github.com/kubernetes/cloud-provider-alibaba-cloud/issues/329

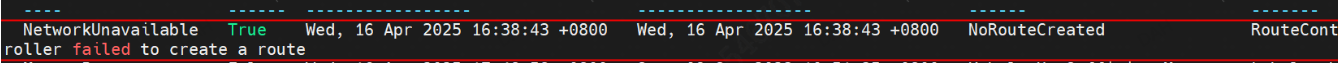

一定要配置 - --configure-cloud-routes=false参数,否则会导致node不可调度。