环境

master1:172.50.60.94

master2:172.50.60.82

master3:172.50.60.126

node:172.50.60.105

使用kube-vip来实现k8s的高可用,替代haproxy+keepalivved。

kube-vip介绍:https://kube-vip.io/docs/

架构图类似如下:

步骤

1.基础环境配置

设置主机名

hostnamectl set-hostname master1

hostnamectl set-hostname master2

hostnamectl set-hostname master3

hostnamectl set-hostname node关闭防火墙,selinux

systemctl disable firewalld

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config添加hosts

vi /etc/hosts

172.50.60.94 master1

172.50.60.82 master2

172.50.60.126 master3

172.50.60.105 node关闭swap

swapoff -a开启内核 ipv4 转发

modprobe br_netfilter

vi /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.swappiness=0

sysctl -p /etc/sysctl.d/k8s.conf安装ipvs

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

# yum后面配置代理后再安装

yum -y install epel-release

yum install ipset ipvsadm -y2.配置代理

我得机器是内网的虚拟机,不通外网,所以需要配置代理,代理使用本地的squid。

yum代理

vi /etc/yum.conf

proxy=http://192.168.11.140:3128wget代理

yum -y install wget

vim /etc/wgetrc

http_proxy=http://192.168.11.140:3128

https_proxy=http://192.168.11.140:31283.安装docker

yum -y install yum-utils

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

yum -y install docker-ce docker-ce-cli containerd.io docker-compose-plugin

systemctl start docker

systemctl status docker

docker version4.配置docker代理,加速器

mkdir -p /etc/systemd/system/docker.service.d

touch /etc/systemd/system/docker.service.d/proxy.conf

vim /etc/systemd/system/docker.service.d/proxy.conf

[Service]

Environment="HTTP_PROXY=http://192.168.11.140:3128/"

Environment="HTTPS_PROXY=http://192.168.11.140:3128/"

Environment="NO_PROXY=localhost,127.0.0.1,.example.com"

vim /etc/docker/daemon.json

{

"registry-mirrors": ["https://registry.cn-hangzhou.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

systemctl daemon-reload

systemctl restart docker

systemctl status docker

systemctl show --property=Environment docker5.master1上配置kube-vip

# 找一个同网段不通的ip

ping 172.50.60.66

设置vip地址

export VIP=172.50.60.66

# 查看网卡名

ip a

export INTERFACE=eth0

export master1=172.50.60.94

export master2=172.50.60.82

export master3=172.50.60.126

mkdir -p /etc/kubernetes/manifests

docker run --rm plndr/kube-vip:v0.5.5 manifest pod --interface $INTERFACE --vip $VIP --controlplane --services --arp --leaderElection | tee /etc/kubernetes/manifests/kube-vip.yaml6.安装kubelet,kubeadm,kubectl

master上安装这三个,node上安装kubelet,kubeadm。

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum search kubeadm --showduplicates

yum install -y kubeadm-1.22.15 kubectl-1.22.15 kubelet-1.22.15 --disableexcludes=kubernetes

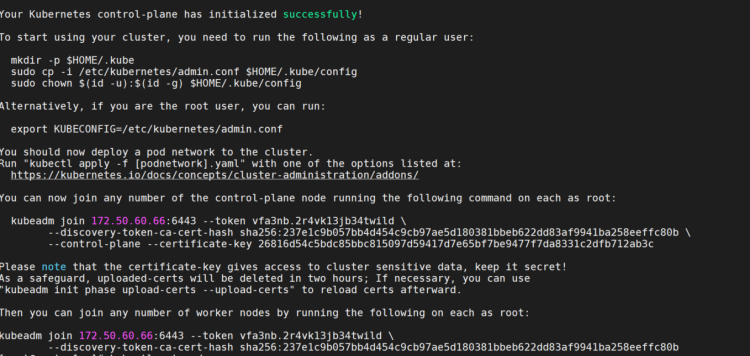

systemctl enable --now kubelet7.初始化集群

cat << EOF > kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta3

# 高可用地址

controlPlaneEndpoint: "172.50.60.66:6443"

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

# 与k8s集群版本一致

kubernetesVersion: v1.22.15

# 为兼容rancher,启用不安全的metric端口

controllerManager:

extraArgs:

# 这里的端口只能为0,不然会报错,或者不加。

port: "0"

# 为兼容rancher,启用不安全的metric端口

scheduler:

extraArgs:

port: "10251"

# 关闭yaml导出时的ManagedFields字段

apiServer:

extraArgs:

feature-gates: ServerSideApply=false

networking:

# 与原集群版本一致

# set podSubnet network,same as calico network in calico.yaml

podSubnet: "192.169.0.0/16"

---

# Open IPVS mode

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

kubeadm init --config=kubeadm-config.yaml --upload-certs | tee kubeadm-init.log

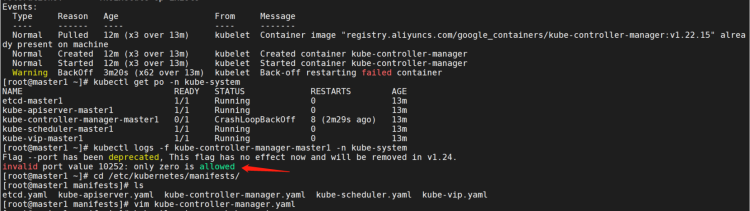

配置kube-proxy需要添加kind和apiVersion:

如果上面配置了controllerManager的端口,报错如下:

配置kubectl

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config8.配置master2

export VIP=172.50.60.66

export INTERFACE=eth0

export master1=172.50.60.94

export master2=172.50.60.82

export master3=172.50.60.126

kubeadm join 172.50.60.66:6443 --token vfa3nb.2r4vk13jb34twild --discovery-token-ca-cert-hash sha256:237e1c9b057bb4d454c9cb97ae5d180381bbeb622dd83af9941ba258eeffc80b --control-plane --certificate-key 26816d54c5bdc85bbc815097d59417d7e65bf7be9477f7da8331c2dfb712ab3c

docker run --rm plndr/kube-vip:v0.5.5 manifest pod --interface $INTERFACE --vip $VIP --controlplane --services --arp --leaderElection | tee /etc/kubernetes/manifests/kube-vip.yaml9.配置master3

export VIP=172.50.60.66

export INTERFACE=eth0

export master1=172.50.60.94

export master2=172.50.60.82

export master3=172.50.60.126

kubeadm join 172.50.60.66:6443 --token vfa3nb.2r4vk13jb34twild --discovery-token-ca-cert-hash sha256:237e1c9b057bb4d454c9cb97ae5d180381bbeb622dd83af9941ba258eeffc80b --control-plane --certificate-key 26816d54c5bdc85bbc815097d59417d7e65bf7be9477f7da8331c2dfb712ab3c

docker run --rm plndr/kube-vip:v0.5.5 manifest pod --interface $INTERFACE --vip $VIP --controlplane --services --arp --leaderElection | tee /etc/kubernetes/manifests/kube-vip.yaml10.配置node

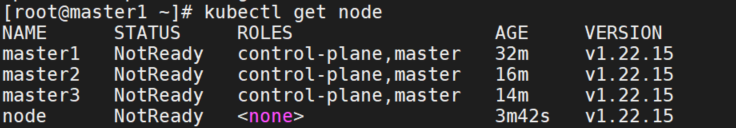

kubeadm join 172.50.60.66:6443 --token vfa3nb.2r4vk13jb34twild --discovery-token-ca-cert-hash sha256:237e1c9b057bb4d454c9cb97ae5d180381bbeb622dd83af9941ba258eeffc80b11.查看节点

kubectl get node

12.配置calico

curl https://raw.githubusercontent.com/projectcalico/calico/v3.24.1/manifests/calico.yaml -O

# 可以修改CALICO_IPV4POOL_CIDR

- name: CALICO_IPV4POOL_CIDR

value: "172.18.0.0/16"

kubectl apply -f calico.yaml

# 修改calico模式为bgp或者混合模式

kubectl edit ippool

ipipMode: CrossSubnet13.查看集群

kubectl get po -A

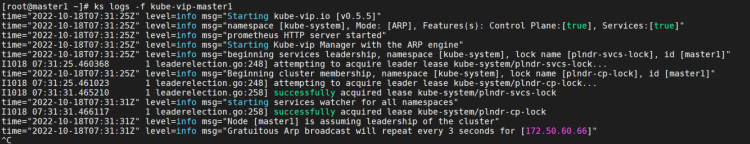

查看集群选举情况

kubectl logs -f kube-vip-master1 -n kube-system

kubectl logs -f kube-vip-master2 -n kube-system

kubectl logs -f kube-vip-master3 -n kube-system

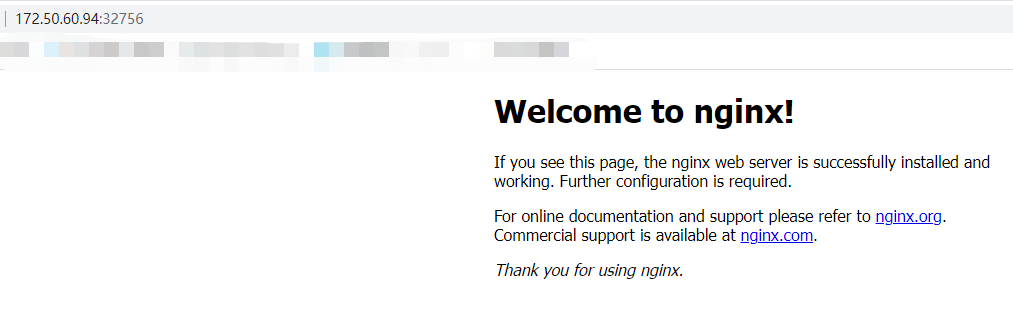

14.部署nginx测试服务

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

---

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

type: NodePort

ports:

- port: 80

protocol: TCP

name: http

nodePort: 32756

selector:

app: nginx

kubectl apply -f nginx.yaml

查看pod ip,访问服务

kubectl get po,svc -o wide