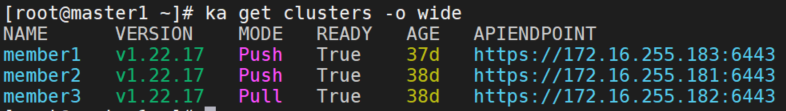

环境

我的环境是以前部署karmada的环境,三个单节点集群。集群版本已经升级到了1.22.17,参见这里

- master1:172.16.255.183 member1

- master2:172.16.255.181 member2

- master3:172.16.255.182 member3

- node:172.16.255.184

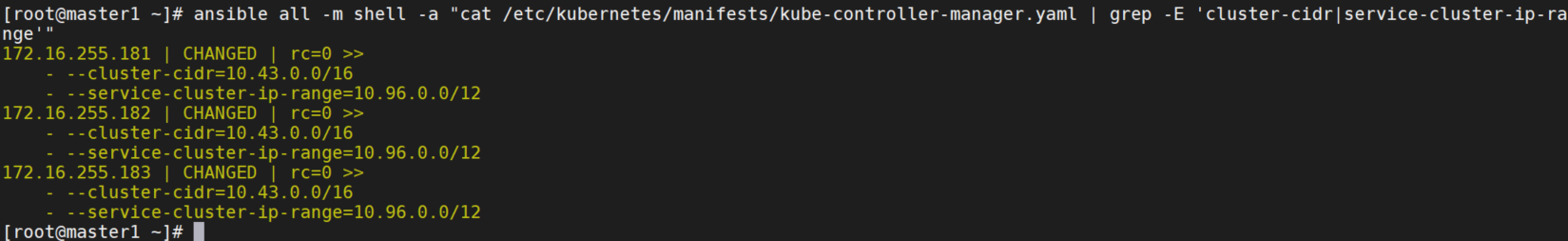

但是每个集群的pod和service的CIDR都是一样的。所以就需要使用Globalnet。

非集群主机的子网应与集群的子网区分开来,以便于指定外网CIDR。我这里test机器和集群内机器都在一个内网172.16.255.0/24中,无法分开。因为只有一台主机的测试环境中,可以指定一个外网CIDR,所以手动指定外网CIDR为172.16.255.184/32。

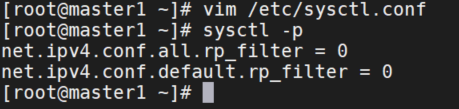

禁用反欺骗

注意:在这种配置中,网关节点和非集群主机之间使用全局IP进行访问,这意味着将数据包发送到不属于实际网段的IP地址。为了使此类数据包不被丢弃,需要为主机和网关节点禁用反欺骗规则。

vim /etc/sysctl.conf

# 在文件末尾添加以下配置项

net.ipv4.conf.all.rp_filter=0

net.ipv4.conf.default.rp_filter=0

sysctl -p

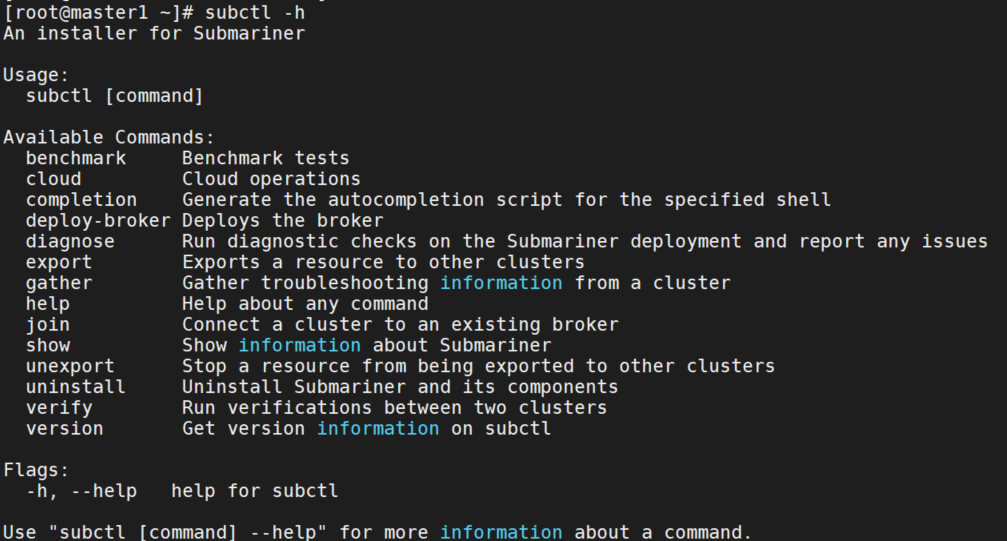

部署Submariner

安装 subctl

curl -Ls https://get.submariner.io | bash

export PATH=$PATH:~/.local/bin

echo export PATH=\$PATH:~/.local/bin >> ~/.profile

# wget https://github.com/submariner-io/releases/releases/download/v0.14.3/subctl-v0.14.3-linux-amd64.tar.xz

# tar xvJf subctl-v0.14.3-linux-amd64.tar.xz

# cd subctl-v0.14.3

# cp subctl-v0.14.3-linux-amd64 /usr/bin/subctl

部署Broker

使用 member1 作为启用了 Globalnet 的 Broker

cp /root/.kube/config kubeconfig.member1

scp 172.16.255.181:/root/.kube/config kubeconfig.member2

scp 172.16.255.182:/root/.kube/config kubeconfig.member3

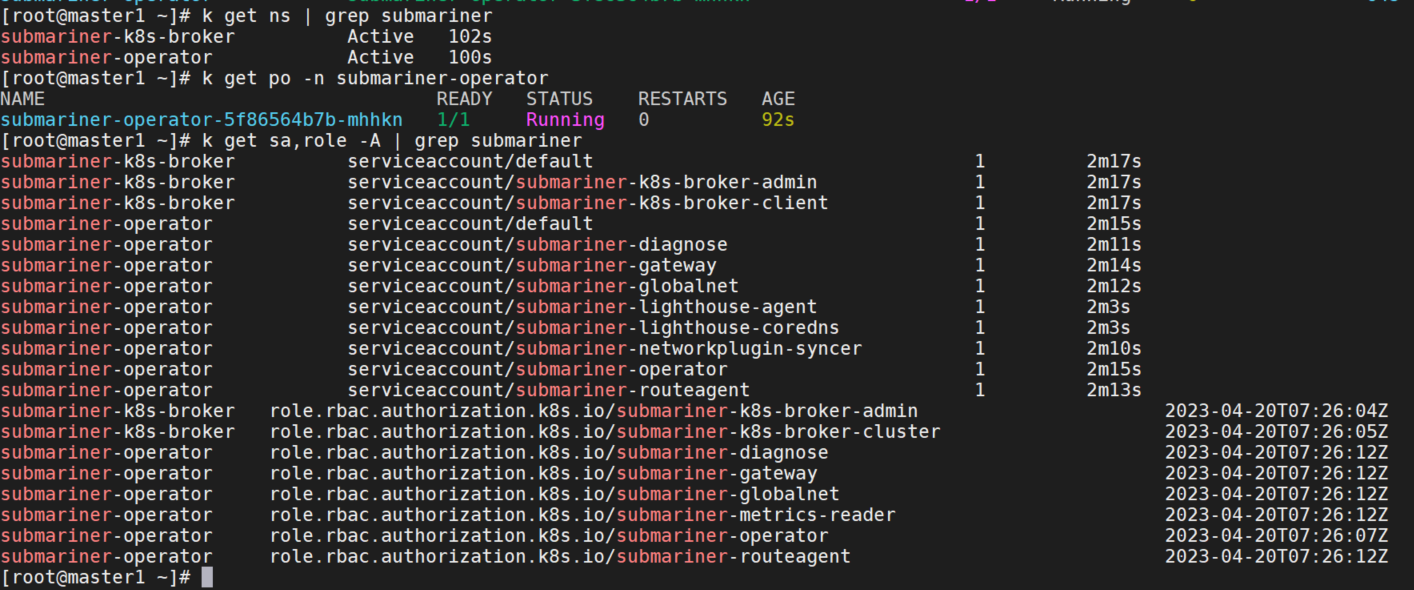

subctl deploy-broker --kubeconfig kubeconfig.member1 --globalnet

给网关节点打标签

当 Submariner 通过 subctl join 命令将集群加入代理时,它会通过适当标记来选择一个节点来安装网关。默认情况下,Submariner 使用工作节点作为网关;如果没有工作节点,则不会安装网关,除非手动将节点标记为网关。由于环境是单节点,没有worker节点,所以需要将单节点标记为gateway。默认情况下,节点名称是主机名。

k label node master1 submariner.io/gateway=true

k label node master2 submariner.io/gateway=true

k label node master3 submariner.io/gateway=true加入Broker

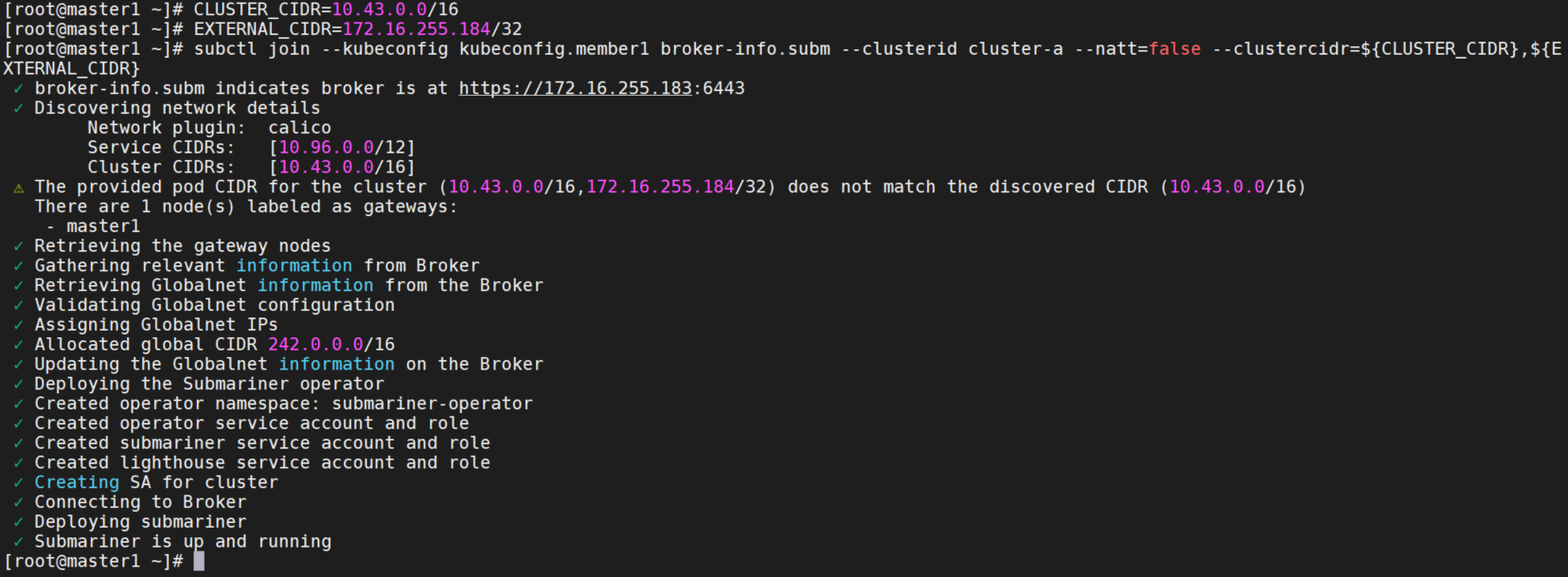

将 master1 加入 Broker,并将外部 CIDR 添加为集群 CIDR。

CLUSTER_CIDR=10.43.0.0/16

EXTERNAL_CIDR=172.16.255.184/32

subctl join --kubeconfig kubeconfig.member1 broker-info.subm --clusterid cluster-a --natt=false --clustercidr=${CLUSTER_CIDR},${EXTERNAL_CIDR}

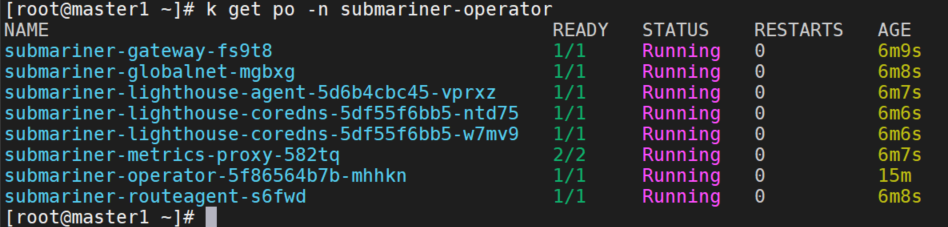

k get po -n submariner-operator

master2 加入 Broker

subctl join --kubeconfig kubeconfig.member2 broker-info.subm --clusterid cluster-b --natt=falsemaster3 加入 Broker

subctl join --kubeconfig kubeconfig.member3 broker-info.subm --clusterid cluster-c --natt=false

echo "source <(subctl completion bash)" >> /root/.bashrc

source /root/.bashrc

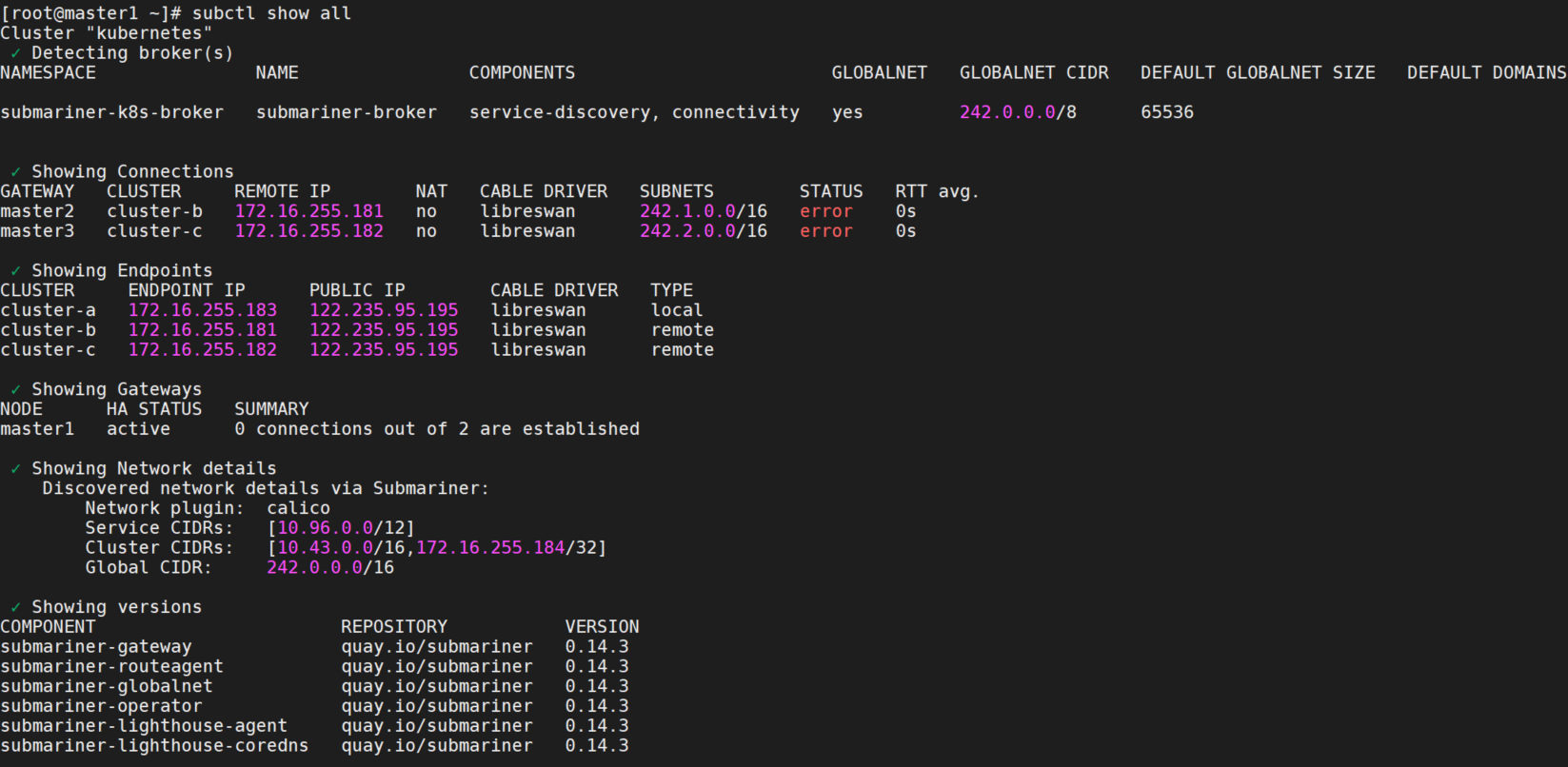

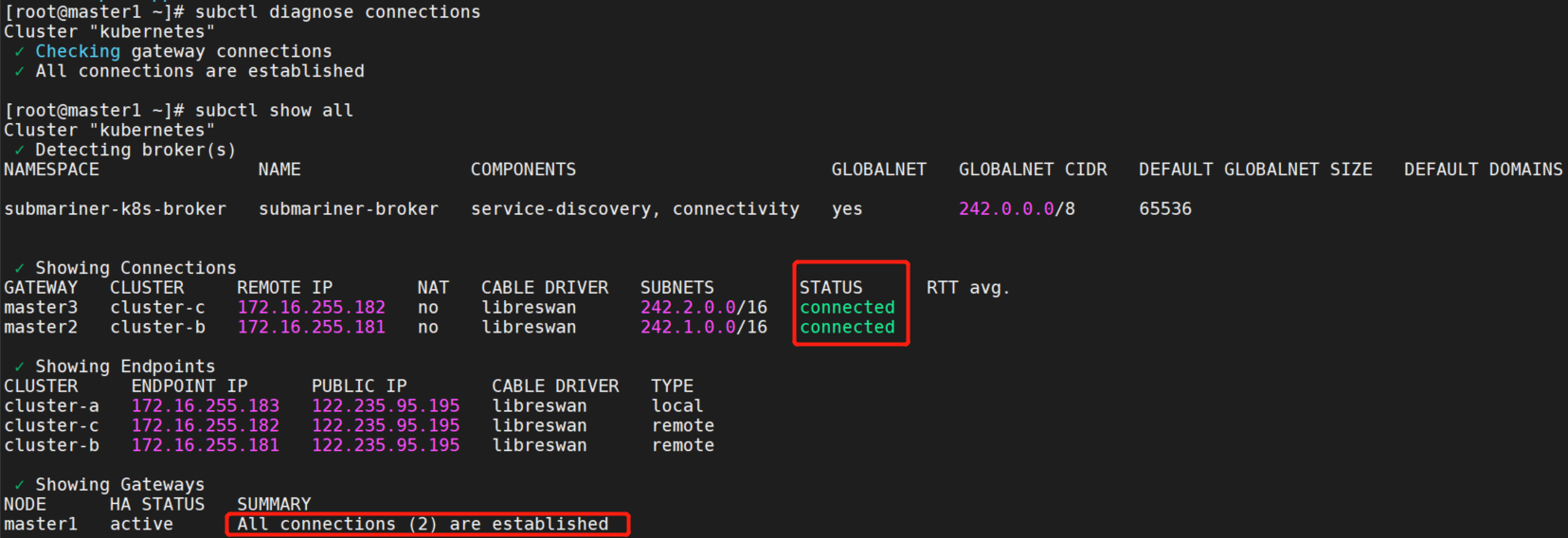

subctl show all

- 可以看到broker name为submariner-broker,启用了GLOBALNET,GLOBALNET CIDR为

242.0.0.0/8。 - 当前Connections为master2,master3,CABLE DRIVER为libreswan,子网分别为

242.1.0.0/16,242.2.0.0/16。 - Endpoints:cluster-a为local,cluster-b,cluster-c为remote。PUBLIC IP为

122.235.95.195。

部署DNS服务器

为非集群主机172.16.255.184在cluster-a上部署DNS服务器。

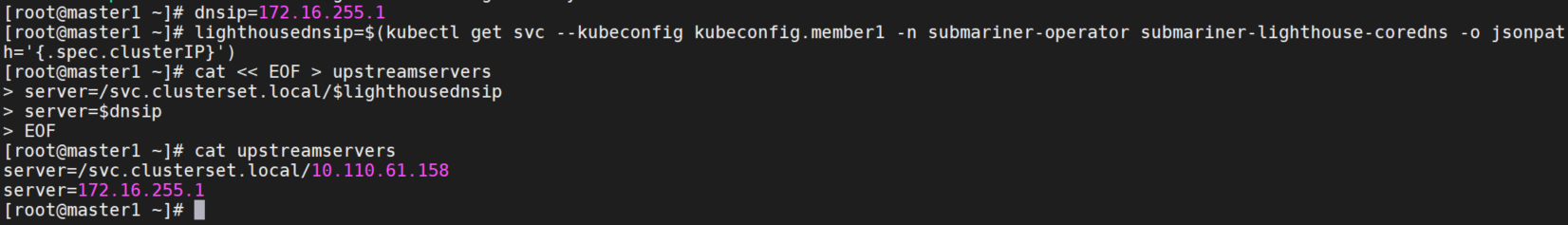

创建一个上游 DNS 服务器列表作为 upstreamservers,dnsip 是 node 的 DNS 服务器 IP,即 /etc/resolve.conf 中的 nameserver 。

dnsip=172.16.255.1

lighthousednsip=$(kubectl get svc --kubeconfig kubeconfig.member1 -n submariner-operator submariner-lighthouse-coredns -o jsonpath='{.spec.clusterIP}')

cat << EOF > upstreamservers

server=/svc.clusterset.local/$lighthousednsip

server=$dnsip

EOF

创建configmap

export KUBECONFIG=kubeconfig.member1

kubectl create configmap external-dnsmasq -n submariner-operator --from-file=upstreamservers创建dns

apiVersion: apps/v1

kind: Deployment

metadata:

name: external-dns-cluster-a

namespace: submariner-operator

labels:

app: external-dns-cluster-a

spec:

replicas: 1

selector:

matchLabels:

app: external-dns-cluster-a

template:

metadata:

labels:

app: external-dns-cluster-a

spec:

containers:

- name: dnsmasq

image: registry.access.redhat.com/ubi8/ubi-minimal:latest

ports:

- containerPort: 53

command: [ "/bin/sh", "-c", "microdnf install -y dnsmasq; ln -s /upstreamservers /etc/dnsmasq.d/upstreamservers; dnsmasq -k" ]

securityContext:

capabilities:

add: ["NET_ADMIN"]

volumeMounts:

- name: upstreamservers

mountPath: /upstreamservers

volumes:

- name: upstreamservers

configMap:

name: external-dnsmasq

---

apiVersion: v1

kind: Service

metadata:

namespace: submariner-operator

name: external-dns-cluster-a

spec:

ports:

- name: udp

port: 53

protocol: UDP

targetPort: 53

selector:

app: external-dns-cluster-a

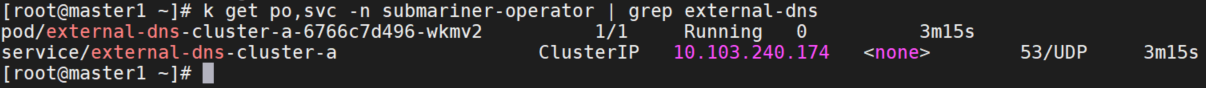

k apply -f dns.yaml

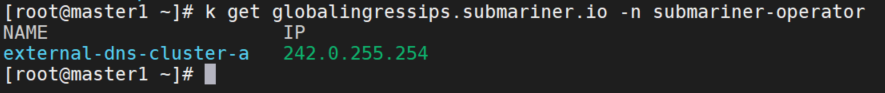

分配全局入口 IP

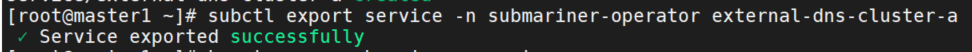

subctl export service -n submariner-operator external-dns-cluster-a

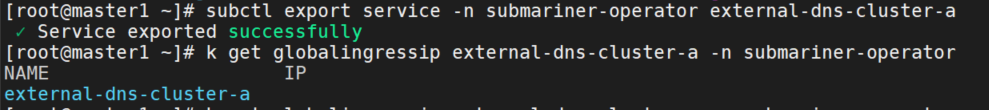

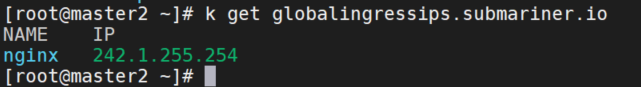

检查全局入口 IP

k --kubeconfig kubeconfig.member1 get globalingressip external-dns-cluster-a -n submariner-operator

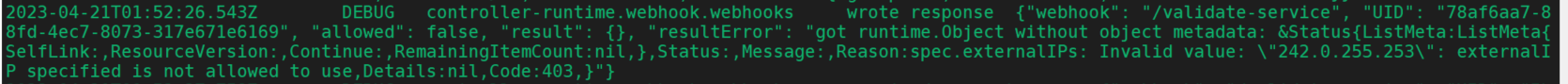

这里ip为空,执行诊断命令,报错:"error creating the internal Service submariner-operator/submariner-ouajjylqjq3zjzzktlytsczndaheiwct: admission webhook \"validate.webhook.svc\" denied the request: spec.externalIPs: Invalid value: \"242.0.255.253\": externalIP specified is not allowed to use"

subctl diagnose service-discovery

这个是external-webhook配置问题,需要修改allowed-usernames为system:serviceaccount:submariner-operator:submariner-globalnet。

cd externalip-webhook-master

vim config/webhook/webhook.yaml

args:

- --allowed-external-ip-cidrs=10.0.0.0/8,`242.0.0.0/8`

# - --allowed-external-ip-cidrs=10.0.0.0/8,`242.1.0.0/16`

- --allowed-usernames=system:admin,system:serviceaccount:kube-system:default,system:serviceaccount:submariner-operator:submariner-globalnet

make deploy IMG=externalip-webhook:v1重新部署dns

k delete -f dns.yaml

k delete serviceexports.multicluster.x-k8s.io external-dns-cluster-a -n submariner-operator

k apply -f dns.yaml

subctl export service -n submariner-operator external-dns-cluster-a

k --kubeconfig kubeconfig.member1 get globalingressip external-dns-cluster-a -n submariner-operator报错"242.0.255.253"externalIP specified is not allowed to use,这个是因为allowed-external-ip-cidrs网段用的242.1.0.0/16,改为242.0.0.0/16即可。

分配成功。

设置非集群主机

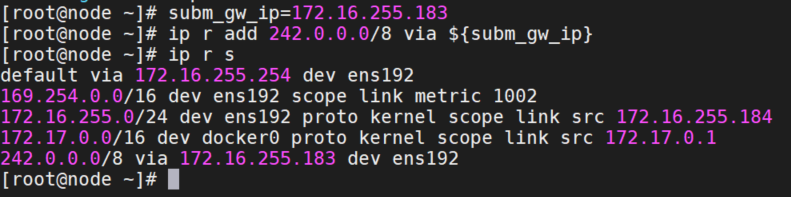

添加路由

在 node 上修改全局 CIDR 的路由。需要注意的是 subm_gw_ip 是hosts同一网段的集群网关节点IP。在本指南的示例中,它是 cluster-a 的节点 IP。此外,242.0.0.0/8 是默认的 globalCIDR。

subm_gw_ip=172.16.255.183

ip r add 242.0.0.0/8 via ${subm_gw_ip}

ip r s

设置永久路由

echo "242.0.0.0/8 via ${subm_gw_ip} dev ens192" >> /etc/sysconfig/network-scripts/route-ens192修改dns

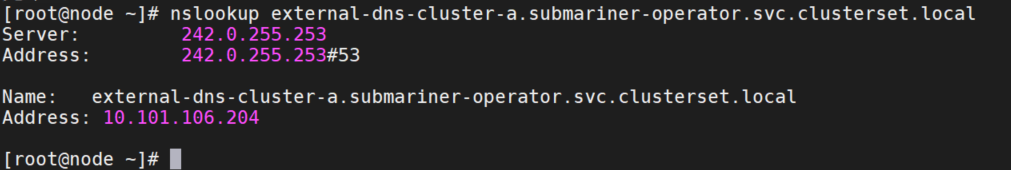

echo "nameserver 242.0.255.253" >> /etc/resolv.conf验证解析

yum -y install bind-utils

nslookup external-dns-cluster-a.submariner-operator.svc.clusterset.local

服务测试

验证从集群访问外部主机

在 node 上运行:

# Python 2.x:

python -m SimpleHTTPServer 80

# Python 3.x:

python -m http.server 80在 clusterr-a 上创建 Service、Endpoints、ServiceExport 访问 node。

export KUBECONFIG=kubeconfig.cluster-a

cat << EOF | kubectl apply -f -

apiVersion: v1

kind: Service

metadata:

name: node

spec:

ports:

- protocol: TCP

port: 80

targetPort: 80

EOF

cat << EOF | kubectl apply -f -

apiVersion: v1

kind: Endpoints

metadata:

name: node

subsets:

- addresses:

- ip: 172.16.255.184

ports:

- port: 80

EOF

subctl export service -n default node

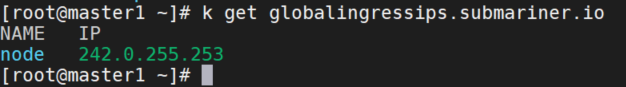

在 cluster-a 上检查 node 的全局入口 IP:

k get globalingressip node

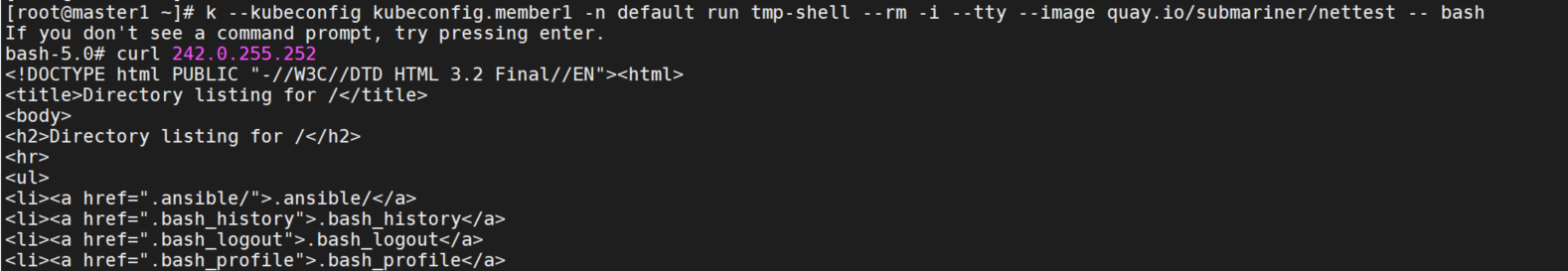

验证从集群访问 node

k --kubeconfig kubeconfig.member1 -n default run tmp-shell --rm -i --tty --image quay.io/submariner/nettest -- bash

curl 242.0.255.252

k --kubeconfig kubeconfig.member2 -n default run tmp-shell --rm -i --tty --image quay.io/submariner/nettest -- bash

curl 242.0.255.252

k --kubeconfig kubeconfig.member3 -n default run tmp-shell --rm -i --tty --image quay.io/submariner/nettest -- bash

curl 242.0.255.252在member1和member2上都可以正常访问到node。

node上http服务器的日志有来自pod的访问。

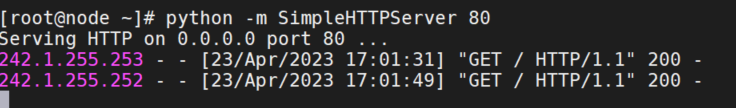

注意

- 目前,Globalnet 不支持不带选择器的无头服务,因此需要使用不带选择器的服务。详情请见:https://github.com/submariner-io/submariner/issues/1537

- 目前不支持不带selector的服务的DNS解析,需要使用全局IP访问外部主机。也可以通过手动创建端点切片来使其可解析。详情请见:https://github.com/submariner-io/lighthouse/issues/603

验证从非集群主机访问 Deployment

在 cluster-b 中创建 Deployment

k --kubeconfig kubeconfig.member2 -n default create deployment nginx --image=nginx:latest

k --kubeconfig kubeconfig.member2 -n default expose deployment nginx --port=80

subctl export service --namespace default nginx从 node,验证访问,无法访问。

curl nginx.default.svc.clusterset.local排查

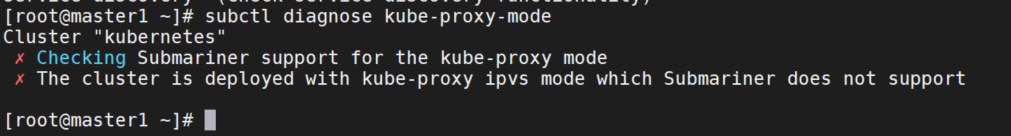

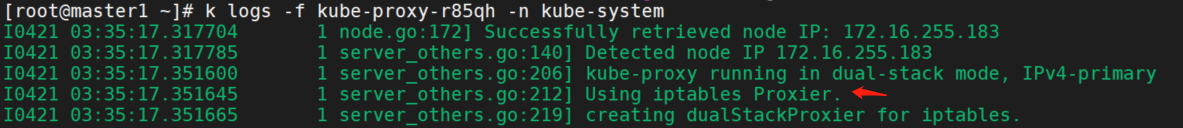

- 1.iptables模式

首先,集群kube-proxy使用的模式是ipvs,不支持,需要修改为iptables。

k edit cm -n kube-system kube-proxy

mode: iptables

k delete po kube-proxy-74brv -n kube-system

- 2.calico模式

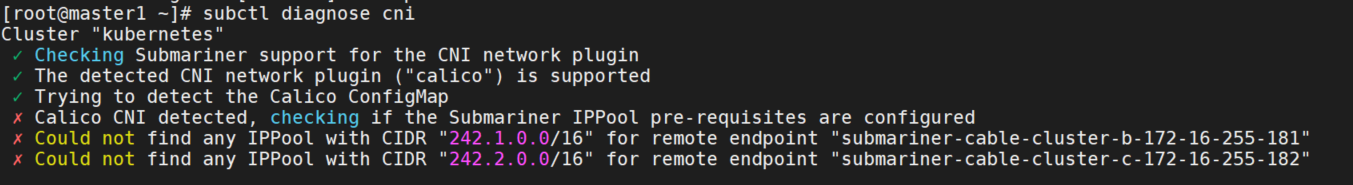

cni当前是calico的模式,无法连接到另外两个gateway。

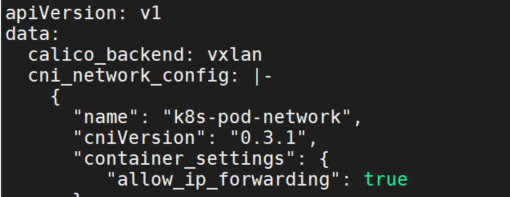

首先修改configmap,修改后端为vxlan

k edit cm -n kube-system calico-config

calico_backend: vxlan

再修改daemonset,改为vxlan模式

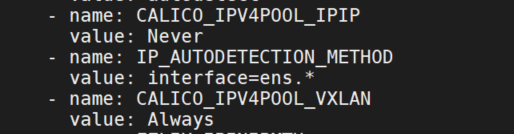

k edit ds -n kube-system calico-node

CALICO_IPV4POOL_VXLAN:Always

CALICO_IPV4POOL_IPIP:Never

# 删除livenessProbe和readinessProbe,因为不使用bgp了,bird进程也不会启动了,不禁用的话将导致每个节点上的 readiness/liveness 检查失败。

livenessProbe:

exec:

command:

- /bin/calico-node

- -felix-live

- -bird-live

readinessProbe:

exec:

command:

- /bin/calico-node

- -bird-ready

- -felix-ready

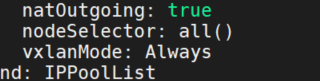

最后修改ippool

k edit ippool default-ipv4-ippool

vxlanMode: Always确认修改成功

calicoctl get ippool --allow-version-mismatch -o yaml

再分别手动创建IPPool

官方文档:https://submariner.io/operations/deployment/calico/

分别在cluster-a,cluster-b和cluster-c上创建以下IPPool:

cluster-a:

# cat cluster-b.yaml

apiVersion: projectcalico.org/v3

kind: IPPool

metadata:

name: cluster-b

spec:

cidr: 242.1.0.0/16

natOutgoing: false

disabled: true

# cat cluster-c.yaml

apiVersion: projectcalico.org/v3

kind: IPPool

metadata:

name: cluster-c

spec:

cidr: 242.2.0.0/16

natOutgoing: false

disabled: true

# cat cluster-a-svc.yaml

apiVersion: projectcalico.org/v3

kind: IPPool

metadata:

name: clusterasvc

spec:

cidr: 10.96.0.0/12

natOutgoing: false

disabled: truecluster-b:

# cat cluster-a.yaml

apiVersion: projectcalico.org/v3

kind: IPPool

metadata:

name: cluster-a

spec:

cidr: 242.0.0.0/16

natOutgoing: false

disabled: true

# cat cluster-c.yaml

apiVersion: projectcalico.org/v3

kind: IPPool

metadata:

name: cluster-c

spec:

cidr: 242.2.0.0/16

natOutgoing: false

disabled: true

# cat cluster-b-svc.yaml

apiVersion: projectcalico.org/v3

kind: IPPool

metadata:

name: clusterbsvc

spec:

cidr: 10.96.0.0/12

natOutgoing: false

disabled: truecluster-c:

# cat cluster-a.yaml

apiVersion: projectcalico.org/v3

kind: IPPool

metadata:

name: cluster-a

spec:

cidr: 242.0.0.0/16

natOutgoing: false

disabled: true

# cat cluster-b.yaml

apiVersion: projectcalico.org/v3

kind: IPPool

metadata:

name: cluster-b

spec:

cidr: 242.1.0.0/16

natOutgoing: false

disabled: true

# cat cluster-c-svc.yaml

apiVersion: projectcalico.org/v3

kind: IPPool

metadata:

name: clusterasvc

spec:

cidr: 10.96.0.0/12

natOutgoing: false

disabled: true

再次执行subctl diagnose cni命令,cni输出没有报错了。

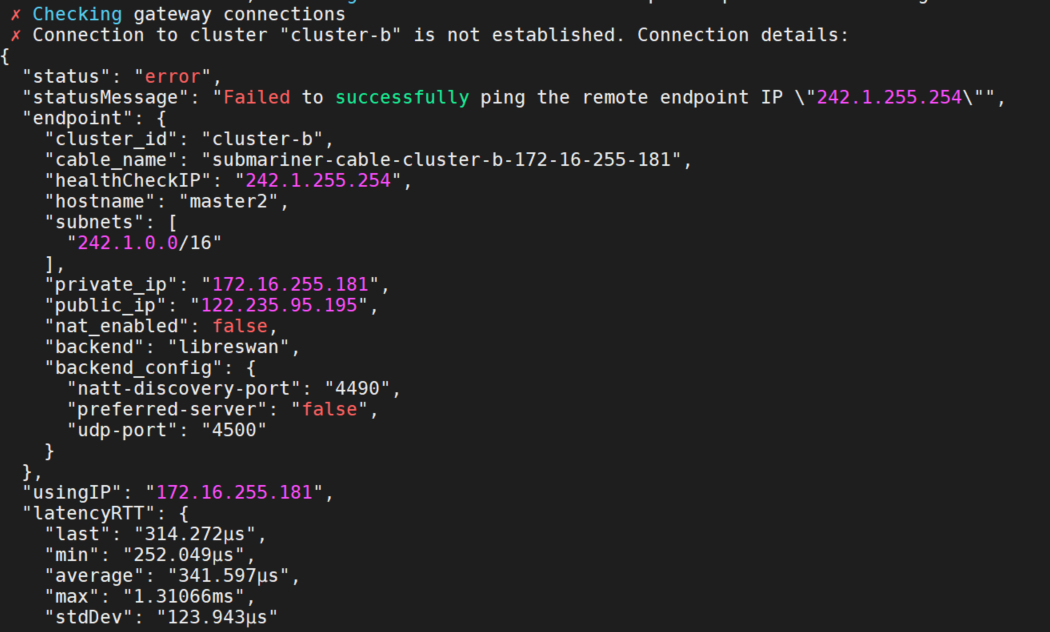

- 3.subctl connections报错

Failed to successfully ping the remote endpoint IP \"242.1.255.254\"

参考这个issue:https://github.com/submariner-io/submariner/issues/1583

添加--health-check=false参数,重新join。

subctl join --kubeconfig kubeconfig.member2 broker-info.subm --clusterid cluster-b --natt=false --health-check=false

subctl join --kubeconfig kubeconfig.member3 broker-info.subm --clusterid cluster-c --natt=false --health-check=false

CLUSTER_CIDR=10.43.0.0/16

EXTERNAL_CIDR=172.16.255.184/32

subctl join --kubeconfig kubeconfig.member1 broker-info.subm --clusterid cluster-a --natt=false --clustercidr=${CLUSTER_CIDR},${EXTERNAL_CIDR} --health-check=false

再次执行subctl diagnose connections命令,没有报错了。

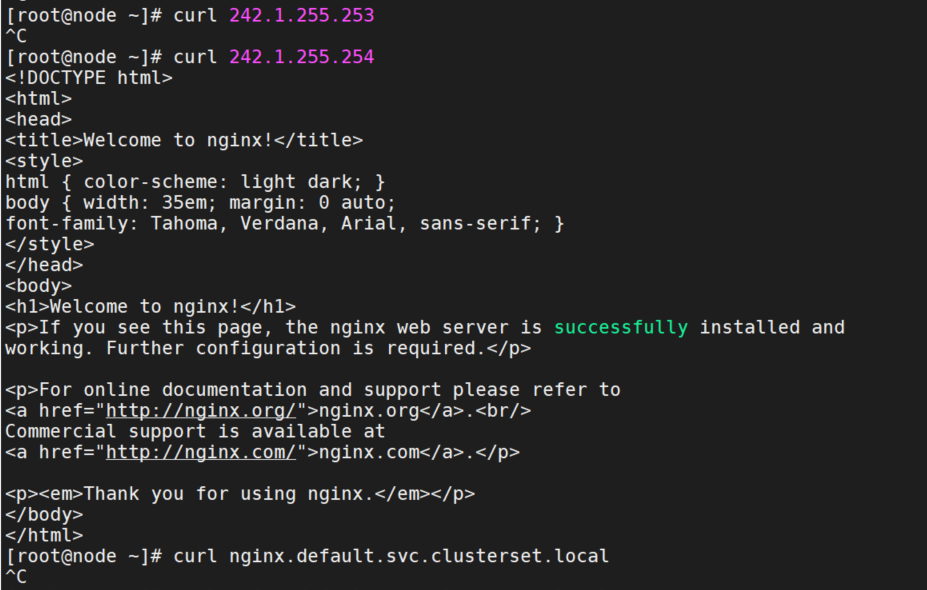

- 4.nginx svc的ip可以curl通,域名不通

查看nginx的globalingressip,发现变成了242.1.255.254,不是之前的242.1.255.253.

curl ip通,curl域名不通。

查看cluster-a上dns的globalingressip,确认是242.0.255.254

在node上修改resolv.conf,即:nameserver 242.0.255.254

再次curl域名,通了。原因是dns配置有误,不是cluster-a上部署的dns。

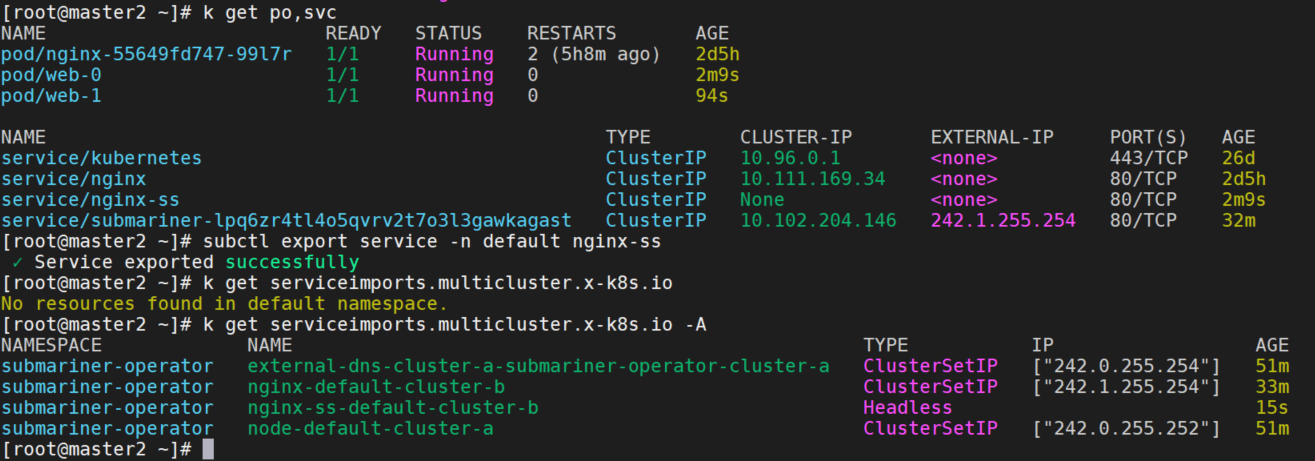

验证从非集群主机访问 Statefulset

创建一个带有 nginx-ss 作为无头服务的 web StatefulSet。创建 web.yaml 文件如下:

apiVersion: v1

kind: Service

metadata:

name: nginx-ss

labels:

app.kubernetes.io/instance: nginx-ss

app.kubernetes.io/name: nginx-ss

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app.kubernetes.io/instance: nginx-ss

app.kubernetes.io/name: nginx-ss

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

serviceName: "nginx-ss"

replicas: 2

selector:

matchLabels:

app.kubernetes.io/instance: nginx-ss

app.kubernetes.io/name: nginx-ss

template:

metadata:

labels:

app.kubernetes.io/instance: nginx-ss

app.kubernetes.io/name: nginx-ss

spec:

containers:

- name: nginx-ss

image: nginx:stable-alpine

ports:

- containerPort: 80

name: webk --kubeconfig kubeconfig.member2 -n default apply -f web.yaml

subctl export service -n default nginx-ss

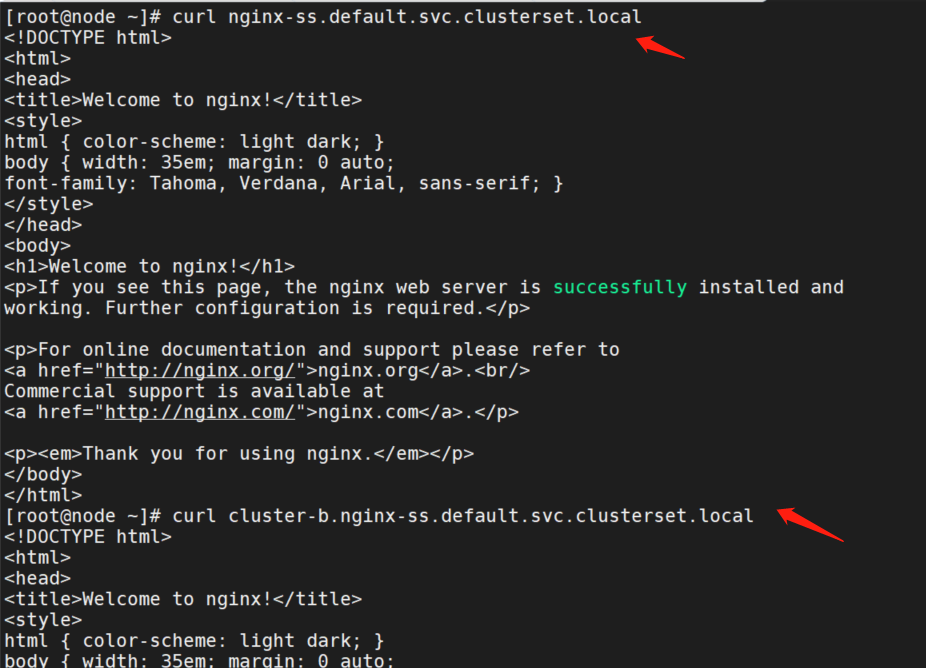

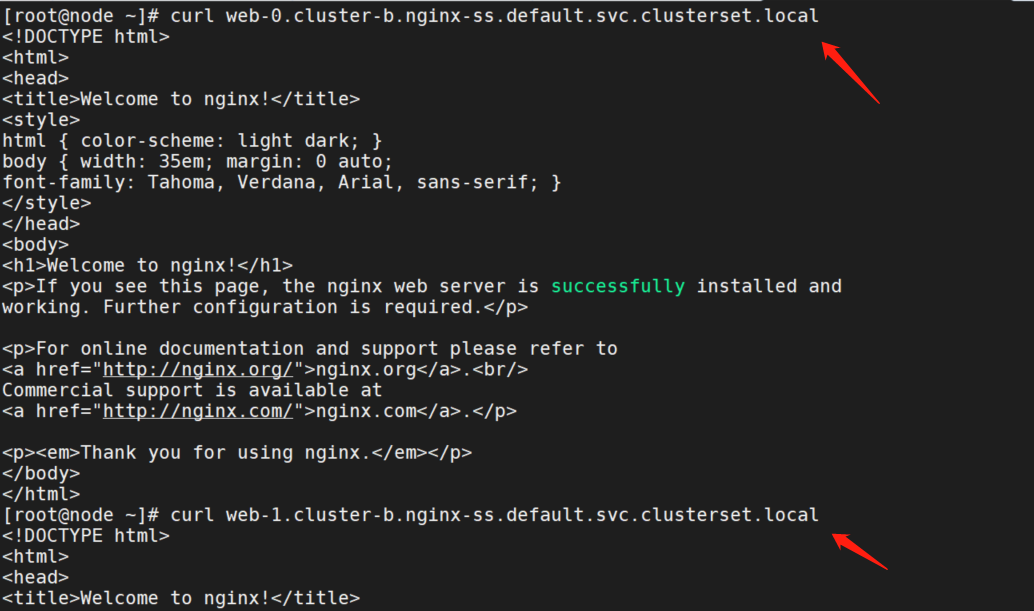

从node访问nginx-ss:

curl nginx-ss.default.svc.clusterset.local

curl cluster-b.nginx-ss.default.svc.clusterset.local

curl web-0.cluster-b.nginx-ss.default.svc.clusterset.local

curl web-1.cluster-b.nginx-ss.default.svc.clusterset.local都可以正常访问。

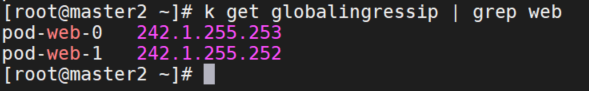

验证Statefulset访问的源IP

确认由 Statefulset 管理的每个 pod 的全局出口 IP:

从集群:

k --kubeconfig kubeconfig.member2 get globalingressip | grep web

从node:

nslookup web-0.cluster-b.nginx-ss.default.svc.clusterset.local

nslookup web-1.cluster-b.nginx-ss.default.svc.clusterset.local

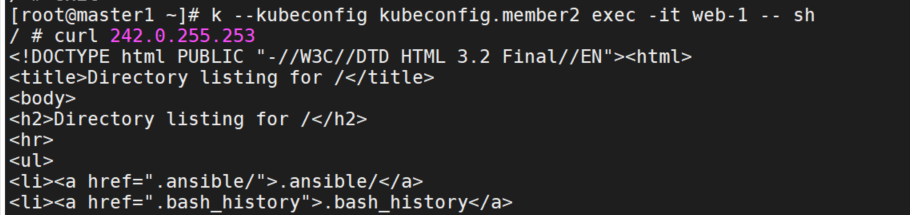

验证从每个 pod 到 test-vm 的每次访问的源 IP 与其全局出口 IP 相同:

# node上启动http server

python -m SimpleHTTPServer 80

# cluster-a或者直接到cluster-b上执行exec

k --kubeconfig kubeconfig.member2 exec -it web-0 -- sh

curl 242.0.255.253

k --kubeconfig kubeconfig.member2 exec -it web-0 -- sh

curl 242.0.255.253

至此,实现了相同pod,service CIDR网段跨集群的互访。