前言

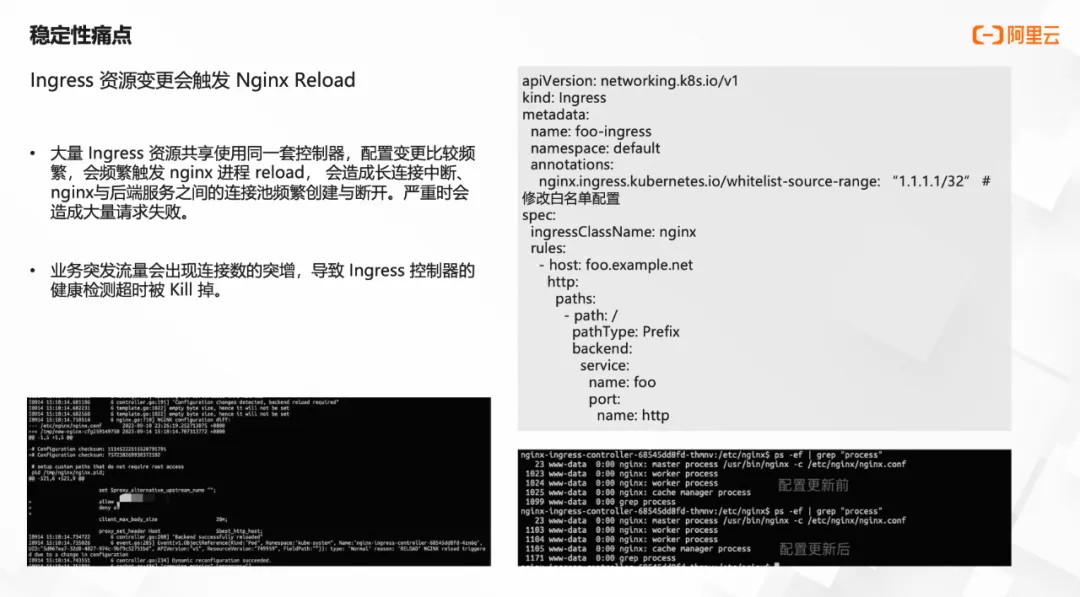

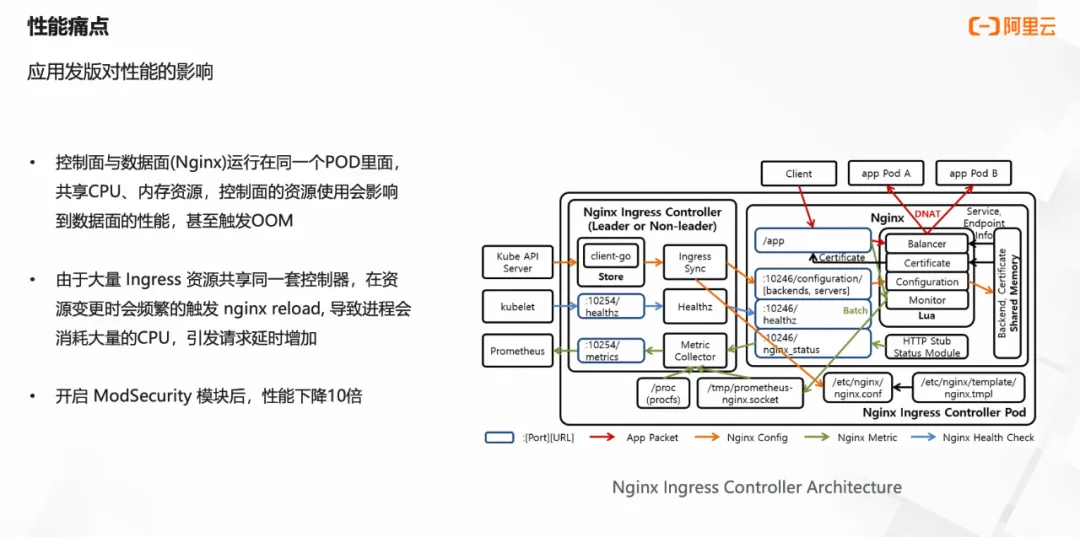

原环境用的ingress-nginx,存在一些痛点,所以新环境直接采用了higress。

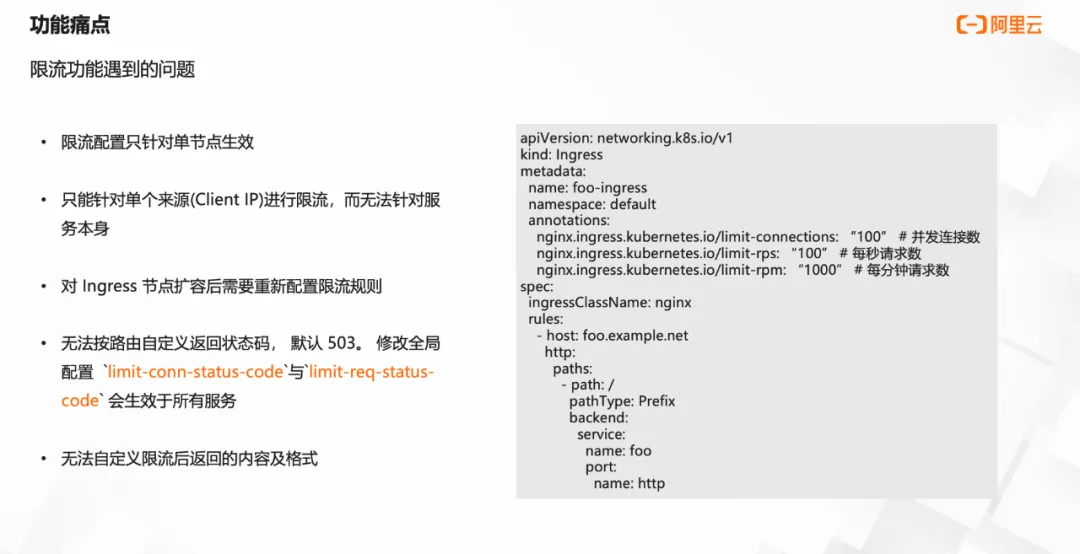

ingress-nginx还缺少面向服务限流的能力,只能实现面向单个来源ip限流,对于后端服务保护来说没有意义。

步骤

从ingress-nginx迁移到higress也很简单,但也存在一些小问题。

部署

首先部署higress。我是本地环境采用helm部署的开源版。

helm repo add higress.cn https://higress.cn/helm-charts

helm upgrade --install higress -n higress-system higress.cn/higress --create-namespace --render-subchart-notes --set global.local=true --set global.o11y.enabled=false这样部署后创建的higress-gatewaysvc类型是LoadBalancer类型,你也可以手动改成NodePort类型也可以改成ClusterIP,取决于你想怎么访问。这里官方给出了三种方案:

- 使用云厂商提供的K8s服务,例如阿里云ACK

- 参考运维参数配置,开启

higress-core.gateway.hostNetwork,让Higress监听本机端口,再通过其他软/硬负载均衡器转发给固定机器 IP - (生产不建议)使用开源的负载均衡方案MetalLB

我这里采用的是第二种。

helm pull higress.cn/higress

tar xf higress-2.0.0.tgz

cd higress

vim charts/higress-core/values.yaml

local: true

hostNetwork: true这里chart和官方文档都写明了ingressClass默认是higress,如果是nginx则只监听nginx class的ingress,如果设置为空,则监听所有ingress。

# IngressClass filters which ingress resources the higress controller watches.

# The default ingress class is higress.

# There are some special cases for special ingress class.

# 1. When the ingress class is set as nginx, the higress controller will watch ingress

# resources with the nginx ingress class or without any ingress class.

# 2. When the ingress class is set empty, the higress controller will watch all ingress

# resources in the k8s cluster.

ingressClass: "higress"但是在install时,如果这里直接设置为空,则会报错name不能为空。因为chart包中会安装higress这个ingressCLass。

cat higress/charts/higress-core/templates/ingressclass.yaml

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

name: {{ .Values.global.ingressClass }}

spec:

controller: higress.io/higress-controller所以这里还是得设置为higress,安装好后修改higress-controller的yaml参数即可。

spec:

containers:

- args:

- serve

- --gatewaySelectorKey=higress

- --gatewaySelectorValue=higress-system-higress-gateway

- --gatewayHttpPort=80

- --gatewayHttpsPort=443

# 设置为空

- --ingressClass=

- --enableAutomaticHttps=true

- --automaticHttpsEmail=测试访问

使用higress-gateway svc的ip和端口访问域名可以正常访问。但在浏览器中无法访问。

我的k8s环境有一个kube-vip,我开始在添加hosts时,域名绑定的就是kubevip,测试了手动创建ingress和higress-console上创建路由都无法访问。后来想起来higress-gateway采用的是hostNetwork=true模式,而我的higress-gatewaypod并不在master上,而是在node上,把hosts中域名绑定的ip换成node ip即可正常访问。

但这样带来一个问题,pod会重启,重启后飘到别的节点,ip就会变,再在node上加一个负载均衡那就本末倒置了。若是固定节点,一个节点只能启动一个higress-gateway pod,否则会报错NodePorts,一个节点只能启动一个80,443端口。pod一旦重启,流量进不来,极易造成风险。

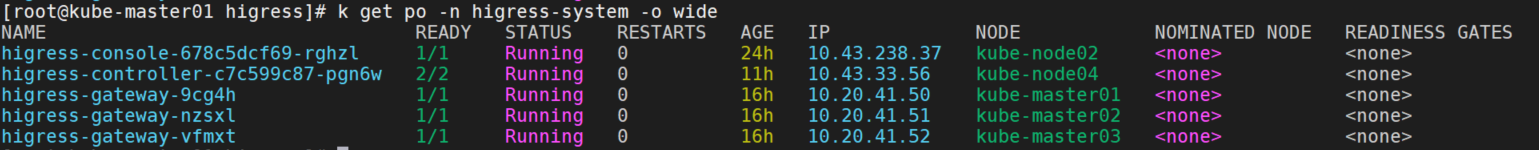

所以我这里把higress-gateway从deployment变为了daemonset,只部署在master上。这样即保证了高可用又用上了kubevip。做到了一种伪LoadBalancer的感觉。下面是daemonset的yaml:

apiVersion: apps/v1

kind: DaemonSet

metadata:

annotations:

meta.helm.sh/release-name: higress

meta.helm.sh/release-namespace: higress-system

labels:

app: higress-gateway

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: higress-gateway

app.kubernetes.io/version: 2.0.0

helm.sh/chart: higress-core-2.0.0

higress: higress-system-higress-gateway

name: higress-gateway

namespace: higress-system

spec:

revisionHistoryLimit: 10

selector:

matchLabels:

app: higress-gateway

higress: higress-system-higress-gateway

template:

metadata:

annotations:

prometheus.io/path: /stats/prometheus

prometheus.io/port: "15020"

prometheus.io/scrape: "true"

sidecar.istio.io/inject: "false"

creationTimestamp: null

labels:

app: higress-gateway

higress: higress-system-higress-gateway

sidecar.istio.io/inject: "false"

spec:

containers:

- args:

- proxy

- router

- --domain

- $(POD_NAMESPACE).svc.cluster.local

- --proxyLogLevel=warning

- --proxyComponentLogLevel=misc:error

- --log_output_level=all:info

- --serviceCluster=higress-gateway

env:

- name: NODE_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: spec.nodeName

- name: POD_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

- name: INSTANCE_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

- name: HOST_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.hostIP

- name: SERVICE_ACCOUNT

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: spec.serviceAccountName

- name: PROXY_XDS_VIA_AGENT

value: "true"

- name: ENABLE_INGRESS_GATEWAY_SDS

value: "false"

- name: JWT_POLICY

value: third-party-jwt

- name: ISTIO_META_HTTP10

value: "1"

- name: ISTIO_META_CLUSTER_ID

value: Kubernetes

- name: INSTANCE_NAME

value: higress-gateway

- name: LITE_METRICS

value: "on"

image: higress/gateway:2.0.0

imagePullPolicy: IfNotPresent

name: higress-gateway

ports:

- containerPort: 15020

hostPort: 15020

name: istio-prom

protocol: TCP

- containerPort: 15090

hostPort: 15090

name: http-envoy-prom

protocol: TCP

- containerPort: 80

hostPort: 80

name: http

protocol: TCP

- containerPort: 443

hostPort: 443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 30

httpGet:

path: /healthz/ready

port: 15021

scheme: HTTP

initialDelaySeconds: 1

periodSeconds: 2

successThreshold: 1

timeoutSeconds: 3

resources: {}

securityContext:

allowPrivilegeEscalation: true

capabilities:

add:

- NET_BIND_SERVICE

drop:

- ALL

runAsGroup: 1337

runAsNonRoot: false

runAsUser: 0

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/run/secrets/tokens

name: istio-token

readOnly: true

- mountPath: /etc/istio/config

name: config

- mountPath: /var/run/secrets/istio

name: istio-ca-root-cert

- mountPath: /var/lib/istio/data

name: istio-data

- mountPath: /etc/istio/pod

name: podinfo

- mountPath: /etc/istio/proxy

name: proxy-socket

dnsPolicy: ClusterFirstWithHostNet

hostNetwork: true

# 指定master

nodeSelector:

node-role.kubernetes.io/control-plane: ""

kubernetes.io/os: linux

# 添加容忍

tolerations:

- key: "node-role.kubernetes.io/master"

operator: "Equal"

effect: "NoSchedule"

- effect: NoSchedule

key: node-role.kubernetes.io/control-plane

operator: Equal

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

serviceAccount: higress-gateway

serviceAccountName: higress-gateway

terminationGracePeriodSeconds: 30

volumes:

- name: istio-token

projected:

defaultMode: 420

sources:

- serviceAccountToken:

audience: istio-ca

expirationSeconds: 43200

path: istio-token

- configMap:

defaultMode: 420

name: higress-ca-root-cert

name: istio-ca-root-cert

- configMap:

defaultMode: 420

name: higress-config

name: config

- emptyDir: {}

name: istio-data

- emptyDir: {}

name: proxy-socket

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.labels

path: labels

- fieldRef:

apiVersion: v1

fieldPath: metadata.annotations

path: annotations

- path: cpu-request

resourceFieldRef:

containerName: higress-gateway

divisor: 1m

resource: requests.cpu

- path: cpu-limit

resourceFieldRef:

containerName: higress-gateway

divisor: 1m

resource: limits.cpu

name: podinfo部署后:

测试ingressClass为nginx和higress的域名都可以正常访问。

附录

若是上面没有修改higress-controller的ingressClass参数,也可以批量把原来的ingressClass从nginx替换为higress。

cat moding.sh

#!/bin/bash

# 获取所有使用 nginx 的 Ingress 资源

ingresses=$(kubectl get ingress -A -o json | jq -r '.items[] | select(.spec.ingressClassName == "nginx") | "\(.metadata.namespace)/\(.metadata.name)"')

# 遍历所有的 Ingress 资源

for ingress in $ingresses; do

namespace=$(echo $ingress | cut -d "/" -f 1)

name=$(echo $ingress | cut -d "/" -f 2)

# 打印当前操作的 Ingress 资源

echo "Patching Ingress $name in namespace $namespace"

# 使用 kubectl patch 命令更新 ingressClassName

kubectl patch ingress $name -n $namespace --type='json' -p='[{"op": "replace", "path": "/spec/ingressClassName", "value": "higress"}]'

done