介绍

官网地址:https://victoriametrics.com/

github地址:https://github.com/VictoriaMetrics/VictoriaMetrics

文档地址:https://docs.victoriametrics.com/

VictoriaMetrics 是一种快速、经济高效且可扩展的监控解决方案和时间序列数据库。

特点

它有如下几个特点:

- 它可以用作 Prometheus 的长期存储。

- 它支持 Prometheus 查询 API,可以直接用于 Grafana 作为 Prometheus 数据源使用。

- 它实现了一种类似 PromQL 的查询语言 – MetricsQL,它在 PromQL 的基础上提供了改进的功能。

- 它提供了一个全局查询视图。多个 Prometheus 实例或任何其他数据源可能会将数据摄取到 VictoriaMetrics 中。稍后可以通过单个查询查询此数据。

- 它为数据摄取和数据查询提供了高性能和良好的垂直和水平可扩展性。它的性能比 InfluxDB 和 TimescaleDB 高出 20 倍。

- 在处理数百万个唯一时间序列(又名高基数)时,它使用的 RAM 比 InfluxDB 少 10 倍,比 Prometheus、Thanos 或 Cortex 少 7 倍。

- 它提供高数据压缩率,与 TimescaleDB 相比,可以在有限存储中存储多达 70 倍的数据点,与 Prometheus、Thanos 或 Cortex 相比,所需的存储空间最多可减少 7 倍。

- 它针对具有高延迟 IO 和低 IOPS 的存储(HDD 和 AWS、Google Cloud、Microsoft Azure 等中的网络存储)进行了优化。

- 单节点 VictoriaMetrics 可以替代使用竞争解决方案(如 Thanos、M3DB、Cortex、InfluxDB 或 TimescaleDB)构建的中等规模集群。

- 由于存储架构,它可以保护存储免受非正常关闭(即 OOM、硬件重置或终止 -9)的数据损坏。

- 操作简单:

- VictoriaMetrics 由一个没有外部依赖项的小型可执行文件组成。

- 所有配置都是通过具有合理默认值的显式命令行标志完成的。

- 所有数据都存储在由

-storageDataPath命令行标志指定的单个目录中。 - 可以使用 vmbackup / vmrestore 工具轻松快速地从即时快照进行备份。

- 支持各种协议中的指标抓取、摄取和回填:Prometheus 导出器、Prometheus 远程写入 API、Prometheus 公开格式。基于 HTTP、TCP 和 UDP 的 InfluxDB 协议。带有标签的 Graphite 明文协议。OpenTSDB put 消息。HTTP OpenTSDB /api/put 请求。JSON 行格式。任意 CSV 数据。本机二进制格式。DataDog 代理或 DogStatsD。NewRelic 基础设施代理。OpenTelemetry 指标格式。

- 它支持指标重新标记。

- 它可以通过 series limiter 处理高基数问题和高流失率问题。

- 它非常适合处理来自 APM、Kubernetes、IoT 传感器、互联汽车、工业遥测、财务数据和各种企业工作负载的大量时间序列数据。

- 它可以将数据存储在基于 NFS 的存储上,例如 Amazon EFS 和 Google Filestore。

架构

对于低于每秒 100 万个数据点的摄取速率,建议使用单节点版本而不是集群版本。单节点版本可随 CPU 内核、RAM 和可用存储空间的数量完美扩展。与集群版本相比,单节点版本更易于配置和操作,因此在选择集群版本之前请三思而后行。如果需要多租户那可以使用集群版本。

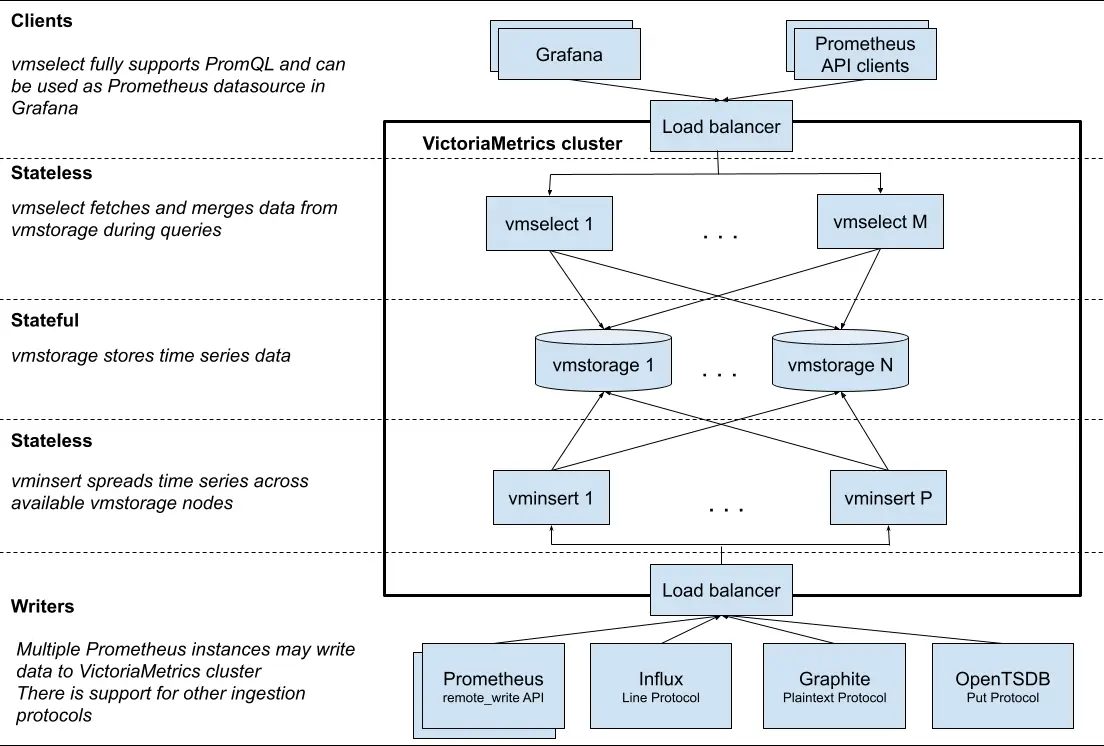

VictoriaMetrics 集群由以下服务组成:

- vmstorage – 存储原始数据并返回给定标签筛选条件的给定时间范围内的查询数据。默认端口为 8482。

- vminsert – 接受提取的数据,并根据指标名称及其所有标签的一致哈希将其分布在 VMStstorage 节点之间。默认端口 8480。

- vmselect – 通过从所有已配置的 vmstorage 节点获取所需数据来执行传入查询。尽管 vmselect 是无状态的,但仍需要一些磁盘空间(几 GB)来临时缓存。可以通过

-cacheDataPath配置 。默认端口 8480。

从上图也可以看出 vminsert 以及 vmselect 都是无状态的,所以扩展很简单,只有 vmstorage 是有状态的。

除此之外,VictoriaMetrics还有其他组件:

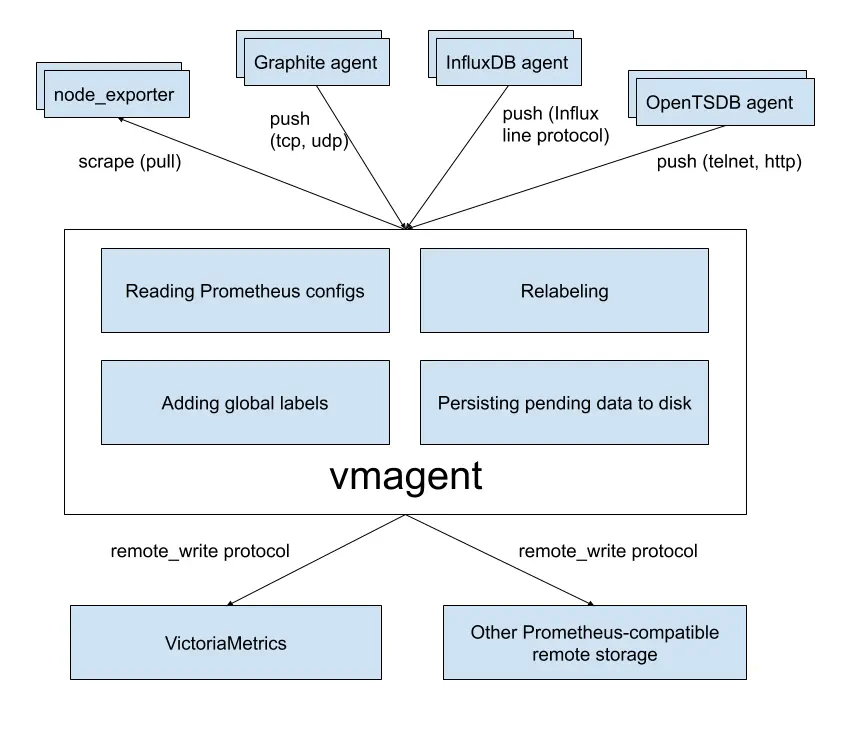

- vmagent – 轻量级代理,用于从各种来源收集指标,重新标记和过滤收集的指标,然后存储到 VictoriaMetrics 以及 Prometheus 兼容的存储系统中(支持 remote_write 协议即可)。默认端口 8429。下图是vmagent的架构:

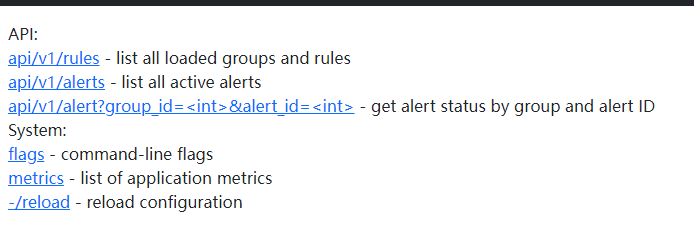

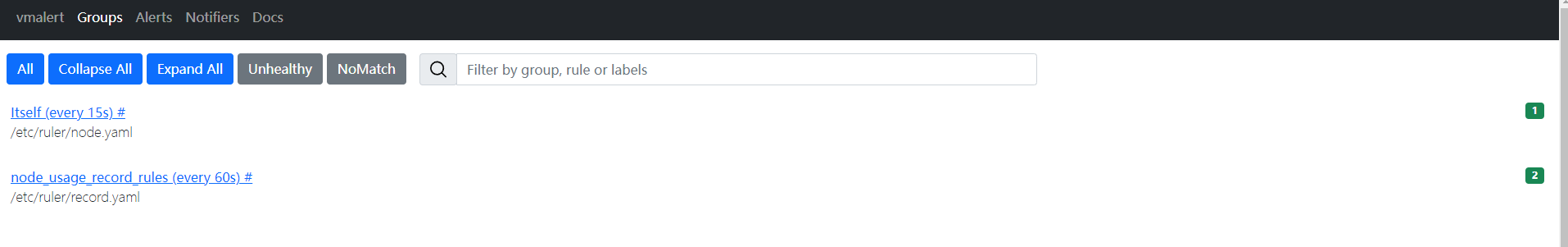

- vmalert – 用于处理与 Prometheus 兼容的警报和记录规则的服务。默认端口为 8880。

- vmalert-tool – 用于验证警报和记录规则的工具。

- vmauth – 针对 VictoriaMetrics 产品优化的授权代理和负载均衡器。

- vmgateway – 基于租户速率限制功能的授权代理。

- vmctl – 用于在不同存储系统之间迁移和复制数据的工具。

- vmbackup、vmrestore 和 vmbackupmanager – 用于创建备份和从 VictoriaMetrics 数据的备份还原的工具。

- VictoriaLogs – 用户友好且经济高效的日志数据库。

若要替换prometheus,除了上面的vmstorage,vminsert,vmselect还需要vmagent,vmalert。

部署

这里采用单节点+vmagent+vmalert模式来替代prometheus。单节点VictoriaMetrics有多种启动方式,如二进制,docker,helm,operator,ansible等。这里采用了yaml方式部署,helm部署的方式需要多次部署多个组件。

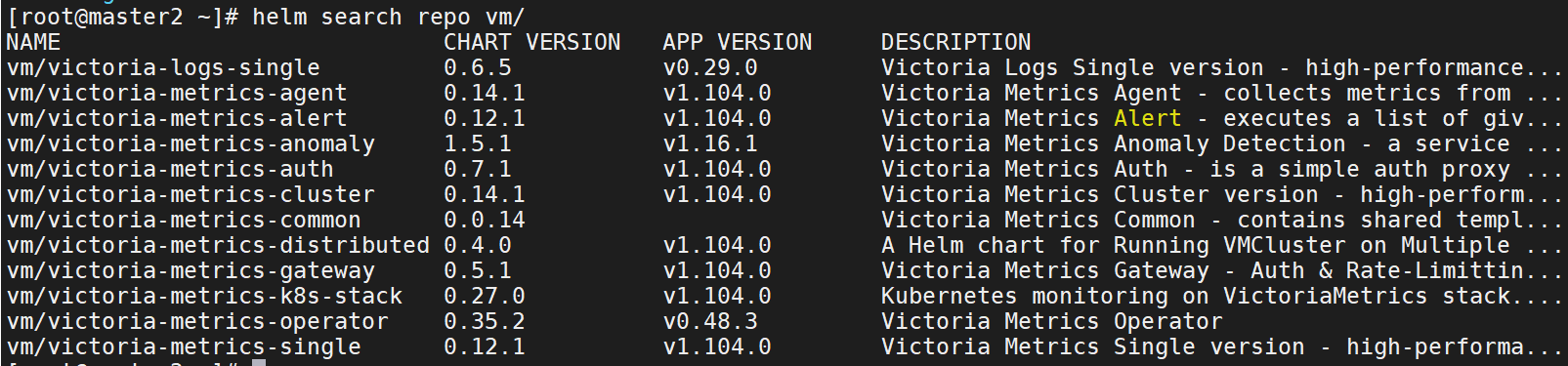

helm repo add vm https://victoriametrics.github.io/helm-charts/

helm repo update

helm search repo vm/

首选创建目录,/data/{vm,grafana}是本地主机存储的路径,需要提前创建,vm目录存放yaml文件。

mkdir -p mkdir -p /data/{vm,grafana}

mkdir vm

cd vmvm-single

VictoriaMetrics组件。

apiVersion: v1

kind: Namespace

metadata:

name: vm

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: victoria-metrics

namespace: vm

spec:

selector:

matchLabels:

app: victoria-metrics

template:

metadata:

labels:

app: victoria-metrics

spec:

volumes:

- name: storage

persistentVolumeClaim:

claimName: victoria-metrics-data

containers:

- name: vm

image: victoriametrics/victoria-metrics:latest

imagePullPolicy: IfNotPresent

args:

- -storageDataPath=/var/lib/victoria-metrics-data

- -retentionPeriod=30d

- -httpAuth.username=admin

- -httpAuth.password=Vmsingle

- -search.maxQueryLen=655350

ports:

- containerPort: 8428

name: http

volumeMounts:

- mountPath: /var/lib/victoria-metrics-data

name: storage

env:

- name: TZ

value: Asia/Shanghai

resources:

limits:

cpu: '4'

memory: 8Gi

requests:

cpu: 150m

memory: 512Mi

---

apiVersion: v1

kind: Service

metadata:

name: victoria-metrics

namespace: vm

spec:

type: NodePort

ports:

- port: 8428

selector:

app: victoria-metrics

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: victoria-metrics-data

spec:

accessModes:

- ReadWriteOnce

capacity:

storage: 100Gi

storageClassName: fast

local:

path: /data/vm-single

persistentVolumeReclaimPolicy: Retain

persistentVolumeReclaimPolicy: Retain

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- master2

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: victoria-metrics-data

namespace: vm

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: fast参数说明:

- -retentionPeriod=30d 数据存储时长。

- -httpAuth.username=admin 数据库账号,自行修改。

- -httpAuth.password=Vmsingle 数据库密码,自行修改。

- storageClassName:fast 我这里fast即local-path,本地存储。

node-exporter

采集系统指标。

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: vm

labels:

app: node-exporter

spec:

selector:

matchLabels:

app: node-exporter

template:

metadata:

labels:

app: node-exporter

spec:

hostPID: true

hostIPC: true

hostNetwork: true

containers:

- name: node-exporter

image: prom/node-exporter

ports:

- containerPort: 9100

resources:

requests:

cpu: 50m

memory: 20Mi

limits:

cpu: 500m

memory: 400Mi

securityContext:

privileged: true

args:

- --path.procfs=/host/proc

- --path.sysfs=/host/sys

- --path.rootfs=/host/root

- --collector.filesystem.mount-points-exclude=^/(dev|proc|sys|var/lib/docker/.+|var/lib/kubelet/.+)($|/)

- --collector.filesystem.fs-types-exclude=^(autofs|binfmt_misc|bpf|cgroup2?|configfs|debugfs|devpts|devtmpfs|fusectl|hugetlbfs|iso9660|mqueue|nsfs|overlay|proc|procfs|pstore|rpc_pipefs|securityfs|selinuxfs|squashfs|sysfs|tracefs)$

volumeMounts:

- mountPath: /host/proc

name: proc

readOnly: false

- mountPath: /host/sys

name: sys

readOnly: false

- mountPath: /host/root

mountPropagation: HostToContainer

name: root

readOnly: true

securityContext:

runAsUser: 0

tolerations:

- operator: Exists

volumes:

- hostPath:

path: /proc

name: proc

- hostPath:

path: /sys

name: sys

- hostPath:

path: /

name: rootkube-state-metrics

采集k8s指标。

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: v2.12.0

name: kube-state-metrics

namespace: vm

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: v2.12.0

name: kube-state-metrics

rules:

- apiGroups:

- ""

resources:

- configmaps

- secrets

- nodes

- pods

- services

- resourcequotas

- replicationcontrollers

- limitranges

- persistentvolumeclaims

- persistentvolumes

- namespaces

- endpoints

verbs:

- list

- watch

- apiGroups:

- extensions

resources:

- daemonsets

- deployments

- replicasets

- ingresses

verbs:

- list

- watch

- apiGroups:

- apps

resources:

- statefulsets

- daemonsets

- deployments

- replicasets

verbs:

- list

- watch

- apiGroups:

- batch

resources:

- cronjobs

- jobs

verbs:

- list

- watch

- apiGroups:

- autoscaling

resources:

- horizontalpodautoscalers

verbs:

- list

- watch

- apiGroups:

- authentication.k8s.io

resources:

- tokenreviews

verbs:

- create

- apiGroups:

- authorization.k8s.io

resources:

- subjectaccessreviews

verbs:

- create

- apiGroups:

- policy

resources:

- poddisruptionbudgets

verbs:

- list

- watch

- apiGroups:

- certificates.k8s.io

resources:

- certificatesigningrequests

verbs:

- list

- watch

- apiGroups:

- storage.k8s.io

resources:

- storageclasses

- volumeattachments

verbs:

- list

- watch

- apiGroups:

- admissionregistration.k8s.io

resources:

- mutatingwebhookconfigurations

- validatingwebhookconfigurations

verbs:

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- networkpolicies

- ingresses

verbs:

- list

- watch

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: v2.12.0

name: kube-state-metrics

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-state-metrics

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: vm

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: v2.12.0

name: kube-state-metrics

namespace: vm

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: kube-state-metrics

template:

metadata:

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: v2.12.0

spec:

containers:

- image: bitnami/kube-state-metrics:2.12.0

livenessProbe:

httpGet:

path: /healthz

port: 8080

initialDelaySeconds: 5

timeoutSeconds: 5

name: kube-state-metrics

ports:

- containerPort: 8080

name: http-metrics

- containerPort: 8081

name: telemetry

readinessProbe:

httpGet:

path: /

port: 8081

initialDelaySeconds: 5

timeoutSeconds: 5

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: kube-state-metrics

---

apiVersion: v1

kind: Service

metadata:

# annotations:

# prometheus.io/scrape: 'true'

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: v2.12.0

name: kube-state-metrics

namespace: vm

spec:

clusterIP: None

ports:

- name: http-metrics

port: 8080

targetPort: http-metrics

- name: telemetry

port: 8081

targetPort: telemetry

selector:

app.kubernetes.io/name: kube-state-metricsvmagent

负责对配置或者自动发现的JOB进行pull方式采集,也支持接收push进来的指标。

apiVersion: v1

kind: ServiceAccount

metadata:

name: vmagent

namespace: vm

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: vmagent

rules:

- apiGroups: ["", "networking.k8s.io", "extensions"]

resources:

- nodes

- nodes/metrics

- nodes/proxy

- services

- endpoints

- endpointslices

- pods

- app

- ingresses

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources:

- namespaces

- configmaps

verbs: ["get"]

- nonResourceURLs: ["/metrics", "/metrics/resources"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: vmagent

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: vmagent

subjects:

- kind: ServiceAccount

name: vmagent

namespace: vm

---

# vmagent-config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: vmagent-config

namespace: vm

data:

scrape.yml: |

global:

scrape_interval: 30s

scrape_timeout: 30s

external_labels:

origin_prometheus: prom-master

scrape_configs:

- job_name: 'vmagent'

static_configs:

- targets: ['localhost:8429']

- job_name: 'vmalert'

static_configs:

- targets: ['vmalert.vm:8080']

- job_name: 'alertmanager'

static_configs:

- targets: ['alertmanager.vm:9093']

- job_name: 'victoria-metrics'

static_configs:

- targets: ['victoria-metrics.vm:8428']

basic_auth:

username: admin

password: Vmsingle

- job_name: 'grafana'

static_configs:

- targets: ['grafana.vm:3000']

- job_name: 'k8s-node-exporter'

kubernetes_sd_configs:

- role: node

relabel_configs:

- source_labels: [__address__]

regex: '(.*):10250'

replacement: '${1}:9100'

target_label: __address__

action: replace

- action: replace

regex: (.*)

replacement: $1

source_labels: [__meta_kubernetes_node_name]

target_label: kubernetes_node

- job_name: 'k8s-kubelet'

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics

- job_name: 'k8s-cadvisor'

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

metric_relabel_configs:

- source_labels: [instance]

separator: ;

regex: (.+)

target_label: node

replacement: $1

action: replace

- job_name: 'kube-state-metrics'

kubernetes_sd_configs:

- role: endpoints

namespaces:

names:

- monit

relabel_configs:

- source_labels: [__meta_kubernetes_service_label_app_kubernetes_io_name]

regex: kube-state-metrics

replacement: $1

action: keep

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: vmagent

namespace: vm

labels:

app: vmagent

spec:

selector:

matchLabels:

app: vmagent

template:

metadata:

labels:

app: vmagent

spec:

serviceAccountName: vmagent

containers:

- name: vmagent

image: "victoriametrics/vmagent:latest"

imagePullPolicy: IfNotPresent

args:

- -promscrape.config=/config/scrape.yml

- -remoteWrite.url=http://admin:Vmsingle@victoria-metrics.monit:8428/api/v1/write

- -promscrape.maxScrapeSize=500MB

- -remoteWrite.disableOnDiskQueue

- -loggerTimezone=Asia/Shanghai

#- -remoteWrite.dropSamplesOnOverload

env:

- name: TZ

value: Asia/Shanghai

ports:

- name: http

containerPort: 8429

volumeMounts:

- name: config

mountPath: /config

resources:

limits:

cpu: '2'

memory: 2Gi

requests:

cpu: 100m

memory: 256Mi

volumes:

- name: config

configMap:

name: vmagent-config

---

apiVersion: v1

kind: Service

metadata:

name: vmagent

namespace: vm

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "8429"

spec:

selector:

app: vmagent

ports:

- name: http

port: 8429

targetPort: http

type: NodePort在configmap中配置prometheus的job规则。external_labels是Prometheus的外部系统标签,用于多个Prometheus接入同一个VictoriaMetrics时,区分不同的Prometheus。每个vmagent都必须配置,key是origin_prometheus,value是该vmagent的名称。

vmalert

apiVersion: v1

kind: ConfigMap

metadata:

name: vmalert-config

namespace: vm

data:

node.yaml: |-

groups:

- name: Itself

rules:

- alert: Exporter状态

expr: up == 0

for: 3m

labels:

alertype: itself

severity: critical

annotations:

description: "{{ $labels.job }}:异常\n> {{ $labels.origin_prometheus }}: {{ $labels.instance }}"

record.yaml: |

groups:

- name: node_usage_record_rules

interval: 1m

rules:

- record: cpu:usage:rate1m

expr: (1 - avg(irate(node_cpu_seconds_total{mode="idle"}[3m])) by (origin_prometheus,instance,job)) * 100

- record: mem:usage:rate1m

expr: (1 - node_memory_MemAvailable_bytes / node_memory_MemTotal_bytes) * 100

---

apiVersion: v1

kind: Service

metadata:

name: vmalert

namespace: vm

labels:

app: vmalert

spec:

ports:

- name: vmalert

port: 8080

targetPort: 8080

type: NodePort

selector:

app: vmalert

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: vmalert

namespace: vm

labels:

app: vmalert

spec:

selector:

matchLabels:

app: vmalert

template:

metadata:

labels:

app: vmalert

spec:

containers:

- name: vmalert

image: victoriametrics/vmalert:latest

imagePullPolicy: IfNotPresent

args:

- -rule=/etc/ruler/*.yaml

- -datasource.url=http://admin:Vmsingle@victoria-metrics.vm:8428

- -notifier.url=http://alertmanager.vm:9093

- -remoteWrite.url=http://admin:Vmsingle@victoria-metrics.vm:8428

- -evaluationInterval=15s

- -httpListenAddr=0.0.0.0:8080

env:

- name: TZ

value: Asia/Shanghai

resources:

limits:

cpu: '2'

memory: 2Gi

requests:

cpu: 100m

memory: 256Mi

ports:

- containerPort: 8080

name: http

volumeMounts:

- mountPath: /etc/ruler/

name: ruler

readOnly: true

volumes:

- configMap:

name: vmalert-config

name: ruleralertmanager

apiVersion: v1

kind: ConfigMap

metadata:

name: alertmanager-config

namespace: vm

data:

config.yml: |-

global:

resolve_timeout: 10m

route:

group_by: ['origin_prometheus','alertname']

group_wait: 15s

group_interval: 1m

repeat_interval: 20m

receiver: 'webhook-dd'

routes:

- receiver: 'webhook-dd'

continue: false

match_re:

alertname: .*

receivers:

- name: 'webhook-dd'

webhook_configs:

- url: 'http://alert-webhook.monit/node/ddkey=f290f47a-ce05-4955-8d21-dbc28ca'

send_resolved: true

inhibit_rules:

- source_match_re:

inhibit: K8S_Pod_status

target_match_re:

alertname: K8S_Pod_CPU总使用率|(K8S_Pod_内存使用).*

equal: ['pod']

- source_match_re:

alertname: (K8S_Pod_内存使用).*

severity: 'R2'

target_match_re:

alertname: (K8S_Pod_内存使用).*

severity: 'R1'

equal: ['pod']

- source_match:

inhibit: K8S_Pod_status

severity: 'R4'

target_match_re:

inhibit: K8S_Pod_status

severity: R1|R2|R3

equal: ['pod']

- source_match:

inhibit: K8S_Pod_status

severity: 'R2'

target_match_re:

inhibit: K8S_Pod_status

severity: R1|R3

equal: ['pod']

- source_match:

inhibit: K8S_Pod_status

severity: 'R3'

target_match:

inhibit: K8S_Pod_status

severity: 'R1'

equal: ['pod']

---

apiVersion: v1

kind: Service

metadata:

name: alertmanager

namespace: vm

labels:

app: alertmanager

spec:

selector:

app: alertmanager

type: NodePort

ports:

- name: web

port: 9093

targetPort: http

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: alertmanager

namespace: vm

labels:

app: alertmanager

spec:

selector:

matchLabels:

app: alertmanager

template:

metadata:

labels:

app: alertmanager

spec:

volumes:

- name: alertcfg

configMap:

name: alertmanager-config

containers:

- name: alertmanager

image: prom/alertmanager:latest

imagePullPolicy: IfNotPresent

env:

- name: TZ

value: Asia/Shanghai

args:

- "--config.file=/etc/alertmanager/config.yml"

ports:

- containerPort: 9093

name: http

volumeMounts:

- mountPath: "/etc/alertmanager"

name: alertcfg

resources:

limits:

cpu: '2'

memory: 2Gi

requests:

cpu: 100m

memory: 256Migrafana

apiVersion: v1

kind: PersistentVolume

metadata:

name: grafana-data

spec:

accessModes:

- ReadWriteOnce

capacity:

storage: 1Gi

storageClassName: fast

local:

path: /data/grafana

persistentVolumeReclaimPolicy: Retain

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- master2

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: grafana-data

namespace: vm

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: fast

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: grafana

namespace: vm

spec:

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

spec:

volumes:

- name: storage

persistentVolumeClaim:

claimName: grafana-data

containers:

- name: grafana

image: grafana/grafana:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 3000

name: grafana

securityContext:

runAsUser: 0

env:

- name: TZ

value: Asia/Shanghai

- name: GF_SECURITY_ADMIN_USER

value: admin

- name: GF_SECURITY_ADMIN_PASSWORD

value: ADMIN

readinessProbe:

failureThreshold: 10

httpGet:

path: /api/health

port: 3000

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 30

livenessProbe:

failureThreshold: 3

httpGet:

path: /api/health

port: 3000

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources:

limits:

cpu: '2'

memory: 2Gi

requests:

cpu: 150m

memory: 512Mi

volumeMounts:

- mountPath: /var/lib/grafana

name: storage

---

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: vm

spec:

type: NodePort

ports:

- port: 3000

selector:

app: grafanak apply -f single.yaml

k apply -f node-exporter.yaml

k apply -f kube-state-metrics.yaml

k apply -f vmagent.yaml

k apply -f vmalert.yaml

k apply -f alertmanager.yaml

k apply -f grafana.yaml

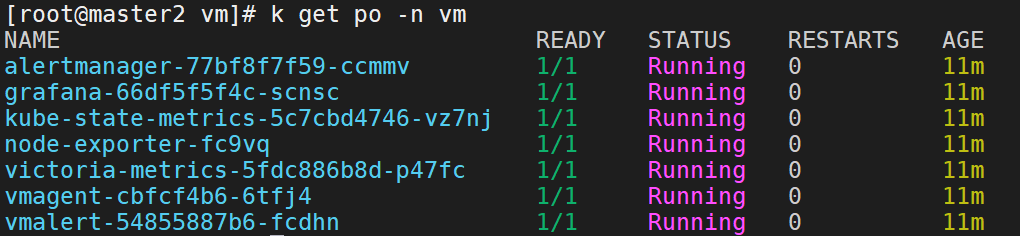

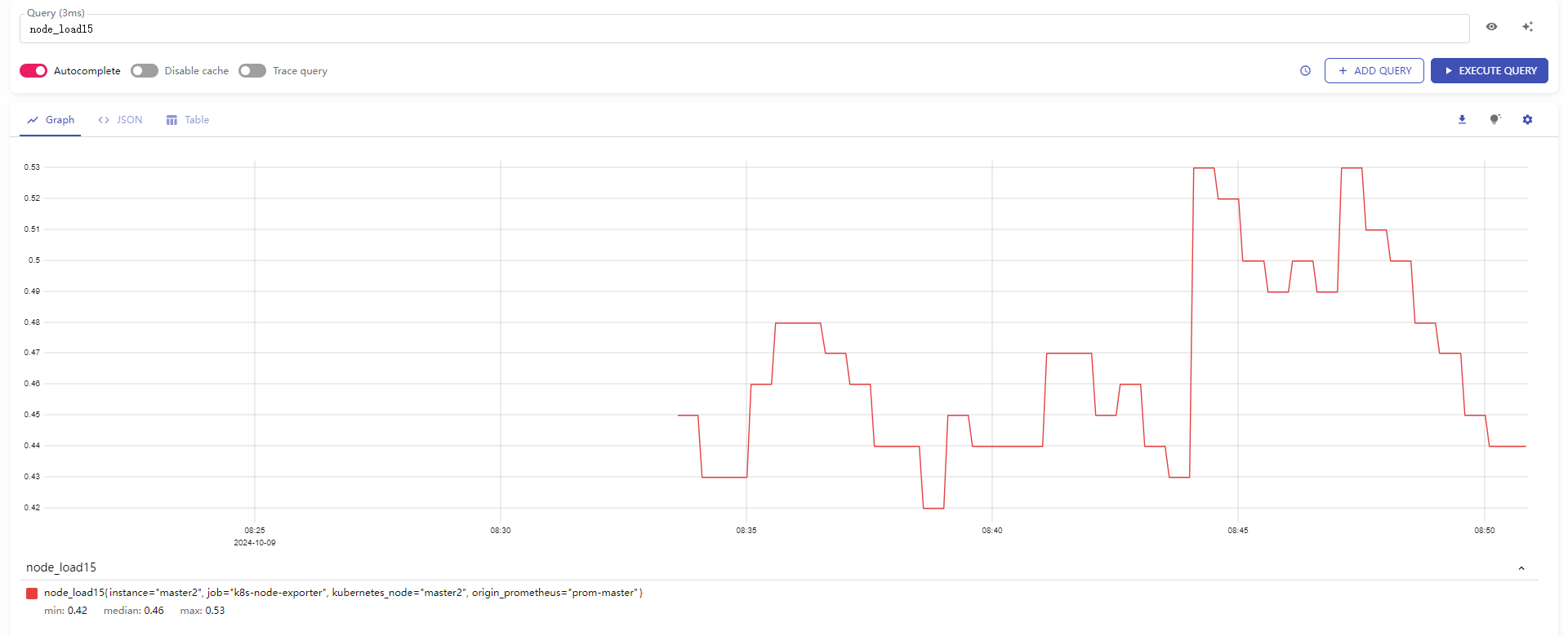

测试

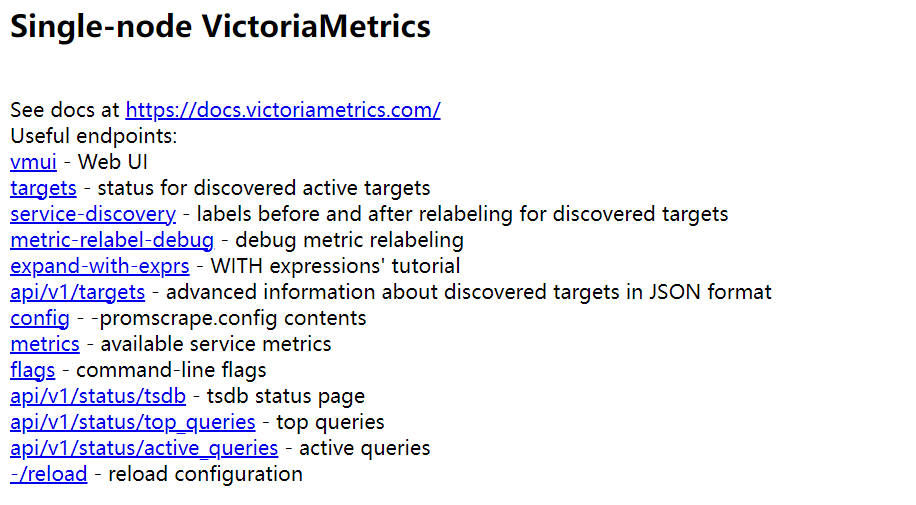

访问vm,输入用户名密码。

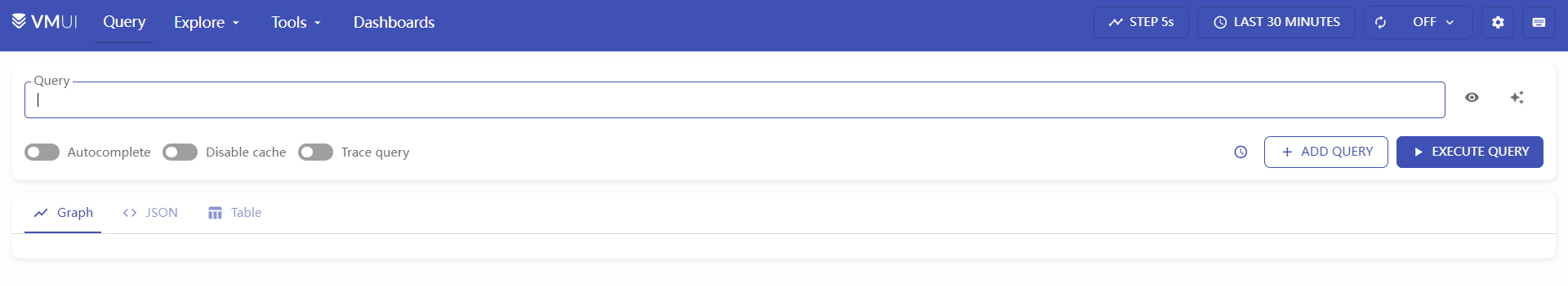

可以点击vmui来访问web ui。

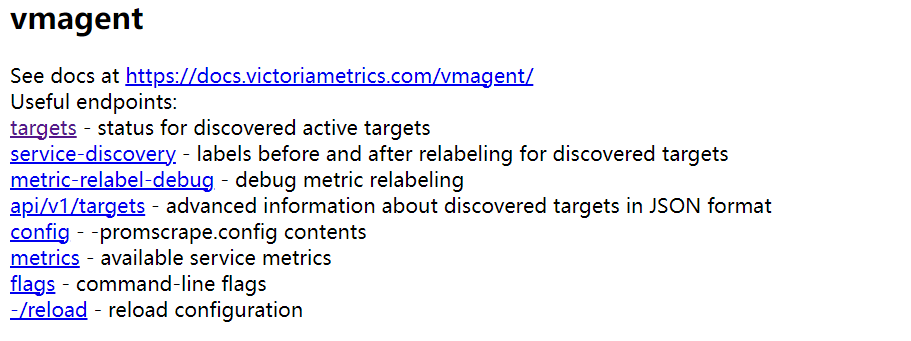

访问vmagent。

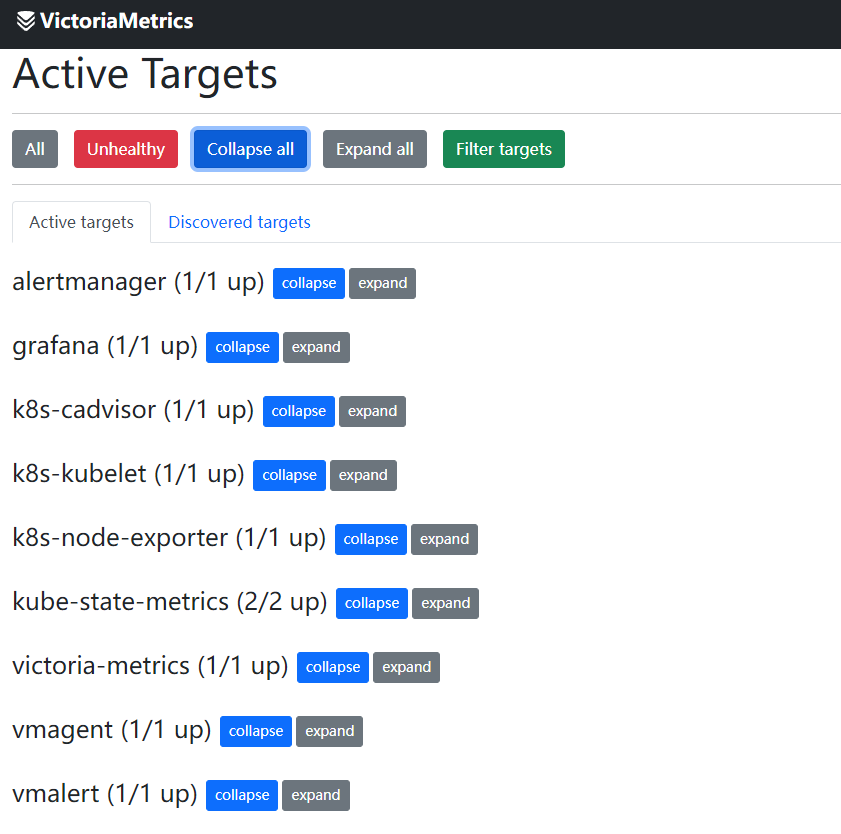

查看target。

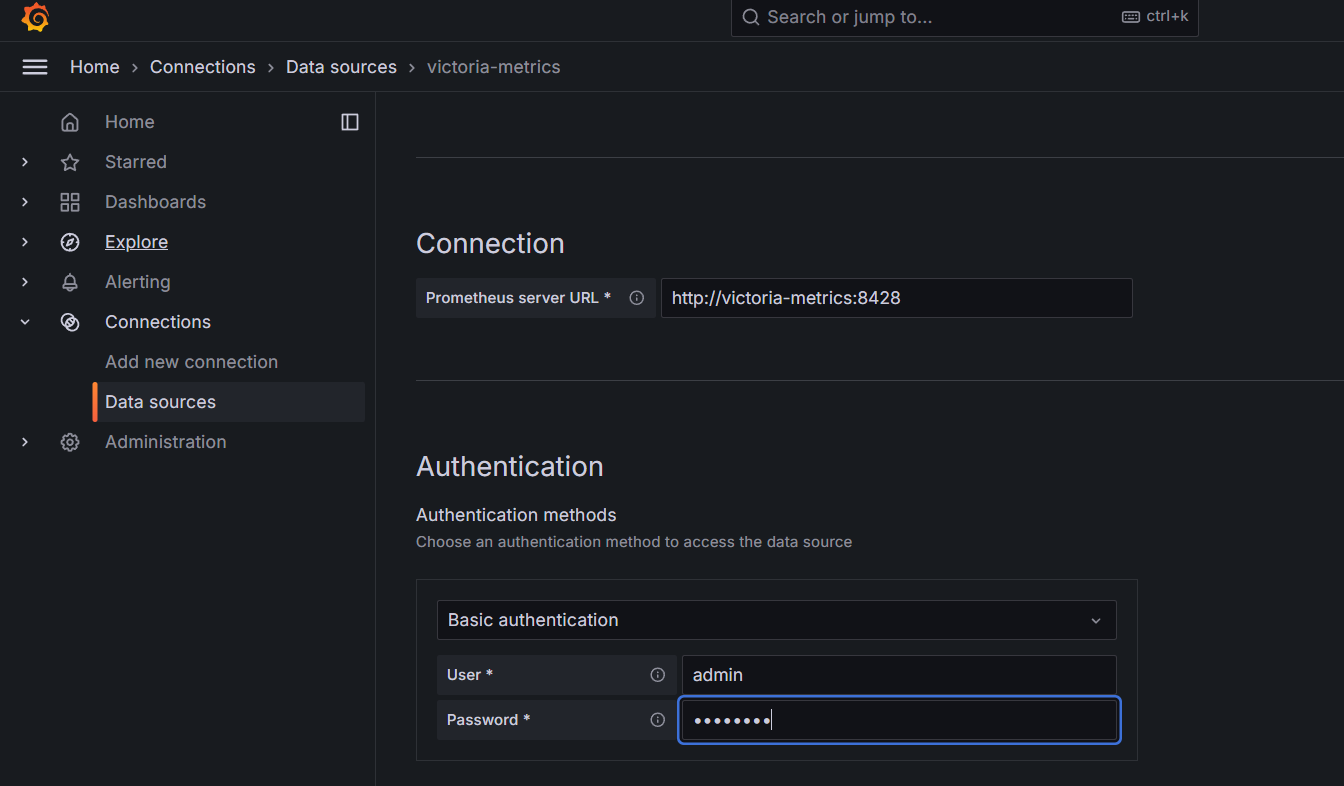

访问grafana,添加数据源,指定victoria-metrics svc的地址并添加用户名密码。

导入dashboard,16098,13105。

可以看到已经有数据了。

访问vmalert。